This morning at Code School we released JavaScript.com, a free community resource that serves two purposes: to provide a starting point to learn JavaScript, and to keep JavaScript developers up to date with the latest news, frameworks, and libraries.

Since the purpose of JavaScript.com is to teach everyone about web development, I couldn’t resist this opportunity to spread a little knowledge about the site’s deployment process. In this article, I thought I’d give a peek behind the curtain to show you the stack we’re using and why we’re hosting with DigitalOcean.

How Does JavaScript.com Work?

JavaScript.com is developed in JavaScript, of course. We chose Node.js for its speed, ease of use, and community support. But that’s not telling the whole story.

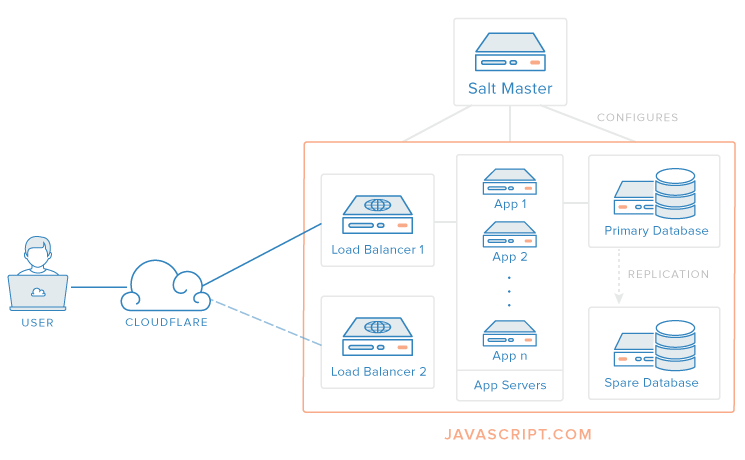

We don’t want every web request to make it all the way to our application servers, since that would strain them unnecessarily. So, before any web request makes it to the application, it passes through several layers that help offload and balance the traffic between multiple servers.

Content Delivery & Caching

The first destination on your request’s journey is CloudFlare. We’ve been experimenting with CloudFlare for some time at Code School, as both a security and extra caching layer. If CloudFlare sees a request for a static page that’s been generated recently, it will return that page from one of its data centers rather than asking our application servers to regenerate the page. The sort of page and asset caching CloudFlare provides works extremely well with a read-heavy site like JavaScript.com. After all, the best kind of cached request never even hits your server.

Load Balancing

After CloudFlare, any uncached request will go through one of two load balancers running HAProxy. These load balancers allow us to quickly add new application servers to the mix as the load increases. A lone HAProxy process can service many millions of requests per day, so we run only two of them: one to serve requests from the Internet, and one on standby, ready to take over if the hot server fails. This is called a “hot standby” configuration.

Application Servers

Finally, HAProxy routes the request down to our NGINX and Phusion Passenger stack. NGINX is a web server that handles static requests (like images), while Phusion Passenger manages our Node processes and dynamic requests (like the comments page) from within NGINX. We really enjoy Passenger on the DevOps side of things since it makes Node process management dead simple.

All of these application servers are designed to run independently from one another. So, we can fire up as many as we need of each type to handle whatever traffic we see on JavaScript.com.

Database Servers

In order to save all those stories and comments, JavaScript.com uses the PostgreSQL database. It is an extremely quick and reliable piece of software that we use across Code School for a variety of applications.

The database always gets its own server, along with a spare, should the main server ever go down. As a bonus, a PostgreSQL hot spare can be used by the JavaScript.com application as a read-only database should the main server fall under too much load.

One Command to Deploy Them All

JavaScript.com is made up of a minimum of six servers: two load balancers, two application servers, and two database servers. Since we want to be able to scale out at a moment’s notice, we need a way to spin up new servers quickly and automatically. Enter SaltStack and DigitalOcean.

SaltStack is a configuration management system for servers that lets us configure the following:

- Deploy a new DigitalOcean server

- Configure the server to run JavaScript.com

- Grant access to the database servers

- Add the new server to the load balancer so it can start handling requests

It finishes this provisioning process for a new server in just a few minutes. Our process for code deployments is exactly the same, since SaltStack knows how to ship new code to existing servers as well.

In order to scale out horizontally, we needed a provider that not only spins up servers very quickly, but can also do so programmatically. DigitalOcean works very well on both fronts, and in multiple locations around the world. If we end up with more traffic than expected, more capacity is just a salt-cloud command away.

Conclusion

To create a website that’s ready to handle a great deal of traffic, you need a detailed plan. Not only do you need a caching layer, but you must also be able to spin up new servers quickly as more uncacheable requests flow in. The first step in this process is separating your stack into layers so that each can scale independently. The second is making use of tools like HAProxy, SaltStack, and DigitalOcean to bring it all together.

Now that you’ve read about JavaScript.com’s stack, go try it — and feel free to promote your own stack, framework, or library on our community news page.

by Thomas Meeks