Announcing cost-efficient storage with usage-based backups, cold storage, and Network file storage

- Updated:

- 5 min read

As data footprints grow, businesses need cost-efficient storage for infrequently accessed data, high-performance file systems for collaborative work, and more aggressive data protection policies to meet strict recovery objectives. We’re introducing several significant enhancements to our storage portfolio to help you manage the challenges of data management, protection, and scaling.

TL;DR

Usage-based backups are now generally available to meet aggressive rpos. Check out our documentation to learn more and head over to the DigitalOcean console to enable backups for your Droplets or GPUs.

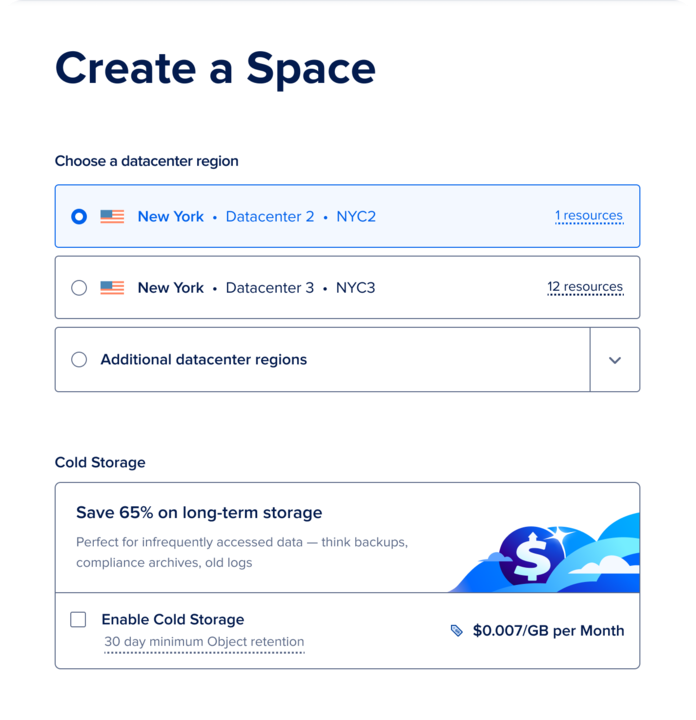

Spaces cold storage for infrequently accessed data is now generally available. Visit our documentation to learn more and and head over to the DigitalOcean console to set up Spaces cold storage.

Network file storage solution, specifically designed for high-performance AI workloads, is now generally available. You can access it in the DigitalOcean console. To learn more attend webinar or visit the product documentation page.

Usage-based backups to meet aggressive RPOs

Our new usage-based backup service is now generally available to help users with strict Recovery Point Objectives (RPO) schedule backups every 4, 6, or 12 hours. Flexible retention policies for Droplets can be configured for 3 days to 6 months.

This feature is paired with a transparent, consumption-based billing model, charging only for the amount of restorable data used based on frequency.

-

Weekly: $0.04/GiB-Month

-

Daily: $0.03/GiB-Month

-

12 Hour: $0.02/GiB-Month

-

6 Hour: $0.015/GiB-Month

-

4 Hour: $0.01/GiB-Month

This means you only pay for what you actually use with no hidden fees for snapshot operations. This provides the flexibility to create a recovery plan that is both technically sound and financially viable, especially for high-change environments in regulated industries or for development environments.

Use cases:

-

Compliance-driven organizations: Supports processing workloads that are subject to stringent compliance standards like HIPAA and SOC 2 by enabling frequent backups that can be stored for longer duration to provide a granular audit trail for sensitive data.

-

Gaming and AI startups: Protect rapidly changing user data and AI models on GPU Droplets with high-frequency backups, allowing for quick rollbacks in case of an issue.

-

Development environments: Shorten Recovery Point Objectives (RPOs) in Continuous Integration (CI)/Continuous Deployment (CD) pipelines and other dev workflows by using 4-6 hour backups to protect code and data changes.

-

SaaS environments: Safeguard rapidly changing user data in customer support platforms and SaaS tools by implementing more frequent, reliable data protection.

Benefits:

-

Enhanced data integrity: Restore from a more recent point in time, reducing data loss in the event of an incident.

-

Transparent billing: A clear, predictable cost model based on actual stored data.

-

Compliance-ready: Configure granular RPO and retention policies to enable meeting internal and external compliance standards.

Check out our documentation to learn more and head over to the DigitalOcean console to enable backups for your Droplets or GPUs.

Spaces cold storage for infrequently accessed data

The rapid growth of AI has led digital-native enterprises to store vast quantities of data, much of which is rarely accessed. DigitalOcean’s Spaces cold storage is generally available to store such infrequently accessed objects at a price of $0.007/GiB per month. This includes retrieval of all cold data stored in a bucket up to once per month at no additional cost, after which retrieval overages are charged at $0.01 per GiB per month. This new cold storage bucket type provides a low-cost, S3-compatible solution for petabyte-scale datasets where data is accessed infrequently, needs to be retained for at least 30 days, and must be retrieved instantly. Spaces cold storage service will have a 99.5% uptime per month as defined in service level agreement.

Use cases:

-

Backups and disaster recovery: Store secondary copies of data that are rarely accessed but must be available instantly.

-

Application logs and diagnostics: Keep data that must be occasionally retrieved for incident investigation, security events, or regulatory needs.

-

Archives: Store user-generated content, scientific data, and older project files.

-

AI/ML training and inference archives: Archive large, infrequently accessed datasets or model checkpoints that can be retrieved on-demand.

Benefits:

-

Cost-effective scaling: Store petabytes of data at a fraction of the cost of standard storage tiers.

-

Predictable pricing: Our simple pricing model includes one retrieval per month, up to your average storage usage, at no additional cost, and predictable, transparent pricing for additional retrievals so you can avoid the high, unpredictable fees of some other providers.

-

Instant retrieval: Access your data within seconds, even when it’s stored in a cold tier.

Visit our documentation to learn more and and head over to the DigitalOcean console to set up Spaces cold storage.

Network file storage (NFS) for high-performance AI workloads

Data-intensive applications, particularly in AI and machine learning, require shared, high-performance file storage that is easy to provision and manage. Our Network file storage service is now generally available in our ATL1 and NYC2 data centers. This fully managed, high-performance solution is specifically designed to meet the demands of AI/ML startups and data-centric businesses by enabling concurrent shared dataset access for multi-node workloads.

lt offers key functionalities including share provisioning and support for NFSv3 and NFSv4 with POSIX compliance. It operates seamlessly within your Virtual Private Cloud, allowing a single share to be mounted across multiple GPU/CPU Droplets, and is optimized for high-throughput and low-latency, making it ideal for AI workloads.

The service also provides snapshots for point-in-time restores and offers allocation-based pricing with discounts for GPU-committed customers. Provisioning is simple, and the service is designed for the high-throughput, low-latency demands of model training and inference. Unlike some competitors that start at 1TB+ increments with high minimums and complex pricing, our solution offers a more cost-effective entry point with increments as small as 50 GiB.

Use cases:

-

AI: A data science team can use a single NFS share to manage and preprocess large datasets for model training across a cluster of GPU Droplets, shortening training times.

-

Media and content: A video production agency can use shared storage to allow multiple designers to work on the same project files without having to move data.

Benefits:

-

Simplified operations: Eliminate the overhead of self-managing a shared file system.

-

Performance optimized: Get high throughput and low latency tailored for AI/ML workloads.

-

Cost-effective scaling: Start small with 50 GiB increments and scale more affordably as your data grows.

To learn more about Network file storage visit the product documentation page or access it directly in the DigitalOcean console. You can also learn more by attending the webinar to see a live demo and get your questions answered by experts.

By building these new capabilities, we are providing a more robust, flexible, and cost-effective infrastructure to help you address the challenges of scaling your business and keeping costs under control.

Ready to get started?

-

Try these features by heading to the DigitalOcean console.

-

Learn more by visiting our product documentation and regional availability.

-

Get expert guidance for free to strengthen your cloud architecture, optimize costs, scale your infrastructure, and improve backup and disaster recovery.

About the author(s)

Building Products @ DigitalOcean

Try DigitalOcean for free

Click below to sign up and get $200 of credit to try our products over 60 days!Related Articles

Now Available: Anthropic Claude Opus 4.6 on DigitalOcean’s Agentic Inference Cloud

- February 6, 2026

- 2 min read

Run Multiple OpenClaw AI Agents with Elastic Scaling and Safe Defaults — without Managing Infrastructure

- February 5, 2026

- 5 min read

Introducing OpenClaw on DigitalOcean: One-Click Deploy, Security-hardened, Production-Ready Agentic AI

- January 29, 2026

- 5 min read