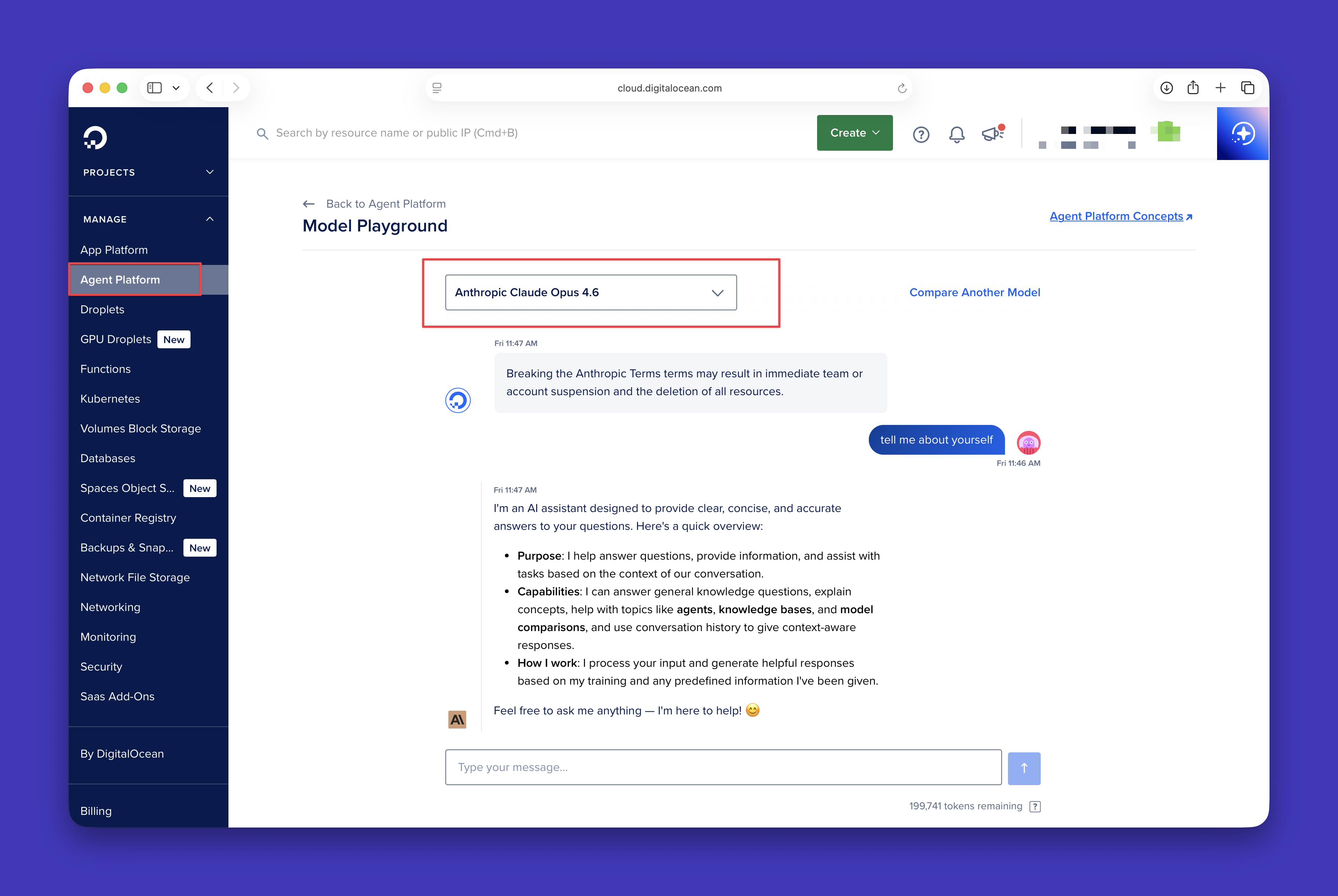

Now Available: Anthropic Claude Opus 4.6 on DigitalOcean’s Agentic Inference Cloud

By DigitalOcean

- Updated:

- 2 min read

Related Articles

Product updates

Run Multiple OpenClaw AI Agents with Elastic Scaling and Safe Defaults — without Managing Infrastructure

- February 5, 2026

- 5 min read

Product updates

Introducing OpenClaw on DigitalOcean: One-Click Deploy, Security-hardened, Production-Ready Agentic AI

- January 29, 2026

- 4 min read