- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Technical Writer

Introduction

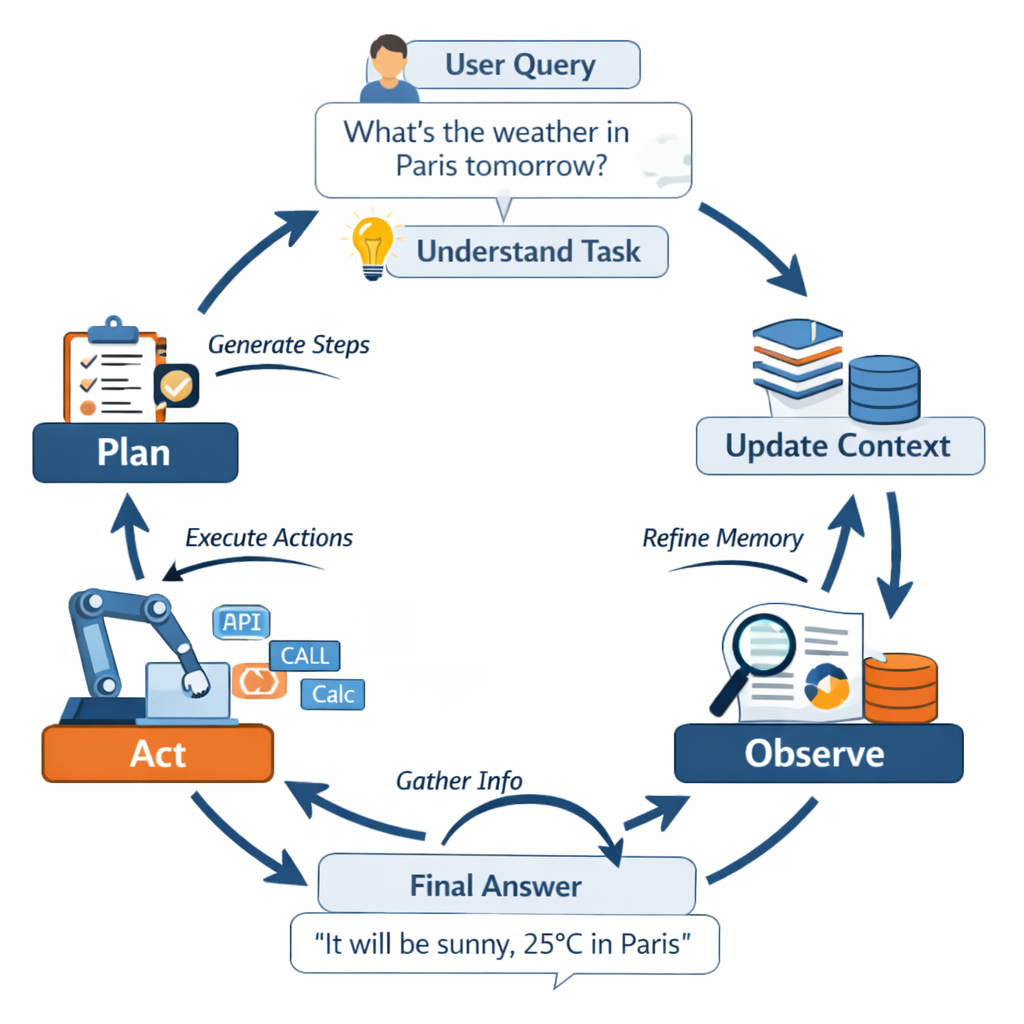

AI agents are becoming more powerful and widely used, from chatbots and research assistants to automated workflows and customer support systems. However, many AI systems still struggle to give consistent, accurate, and useful responses. One major reason is poor handling of context.

Context engineering is the practice of carefully designing, organizing, and managing everything an AI model sees before it responds. This includes system prompts, user instructions, memory, tools, retrieved data, and conversation history. When done well, context engineering helps AI agents behave more intelligently, reliably, and efficiently.

This article explains context engineering in simple terms and shows how you can use it to build better AI systems.

Key Takeaways

-

Context engineering is more than writing good prompts.: It involves managing system instructions, memory, tools, retrieved data, and conversation history together. When these elements are structured properly, AI agents can understand tasks better and give more consistent results.

-

Too much information can be as harmful as too little.: Overloading an AI with irrelevant or repeated content makes it harder for the model to focus. Clean, focused context improves accuracy and reduces confusion.

-

Well-designed system prompts are the foundation of reliable agents.: A clear system prompt defines the role, tone, and boundaries of the agent. This helps prevent unexpected behavior and keeps responses aligned with your goals.

-

Retrieval and ranking strategies are essential for scalable systems.: Instead of stuffing all data into prompts, good systems retrieve only the most relevant information. Reranking ensures the best content reaches the model first.

-

Effective agents balance automation and control.: While automation reduces manual work, strong workflows, state machines, and fallback rules ensure the system stays stable and trustworthy.

What Is Context Engineering?

AI agents often struggle because they do not fully understand what matters in a given situation. They may receive unclear instructions, too much irrelevant information, or poorly structured inputs. As a result, they may give generic answers, misunderstand tasks, or behave inconsistently.

Another common issue is memory overload. When too many past messages and documents are included, important details get lost. This makes it harder for the agent to reason properly and deliver high-quality outputs. These problems usually come from weak context design rather than weak models. Context engineering is the process of designing, selecting, and organizing all information that an AI model receives before generating a response.

This includes:

- System prompts

- User instructions

- Conversation history

- Retrieved documents

- Tool outputs

- Memory data

The goal is to give the AI exactly what it needs, no more and no less, to complete its task effectively.

Prompt Engineering to Context Engineering

In the early days of using large language models, most developers focused mainly on prompt engineering. This meant carefully writing instructions so that the model would give better answers. People experimented with wording, formatting, examples, and special phrases to guide the model’s behavior. A well-written prompt could often turn a weak response into a strong one.

Prompt engineering works well for simple and short tasks, such as summarizing a paragraph, answering a question, or generating a short piece of text. In these cases, all the required information fits into one prompt, and the model does not need long-term memory or external tools.

However, as AI systems became more advanced, developers started building multi-step agents that could search, analyze, plan, and act. These systems needed much more than a single instruction. They had to remember previous conversations, use external tools, follow long-term goals, and handle complex workflows. At this point, prompt engineering alone was no longer enough.

This is where context engineering becomes important.

Context engineering treats the prompt as only one part of a larger system. Instead of focusing only on how instructions are written, it focuses on everything that the model sees before generating a response. This includes system messages, developer rules, user input, memory, retrieved documents, tool outputs, and conversation history.

In a context-engineered system, information is organized into clear layers. The system prompt defines the role and behavior of the agent. The user prompt describes the current task. Retrieved data provides external knowledge. Memory stores important past interactions. The tool outputs real-time information. All of these pieces are carefully arranged so that the model can understand their purpose.

Another key difference is that prompt engineering is often static, while context engineering is dynamic. A prompt is usually written once and reused. Context, on the other hand, changes continuously based on the conversation, available data, and agent actions. The system decides what to include and what to remove at every step.

For example, in a simple prompt-based system, you might write:

“Summarize this document in simple language.”

In a context-engineered system, the model might receive:

- A system rule about tone and accuracy

- The user’s task

- A summarized version of previous conversations

- The most relevant parts of the document

- Guidelines on output format

All of this together forms the context.

Context engineering also improves reliability and scalability. With only prompt engineering, small changes in wording can cause large changes in behavior. This makes systems fragile. Context engineering reduces this risk by adding structure, validation rules, and consistent formatting. As a result, agents behave more predictably across different situations.

In addition, context engineering supports long-term reasoning. Agents can track goals, remember user preferences, and build knowledge over time. This is difficult to achieve with standalone prompts. In simple terms, prompt engineering teaches the AI what to say, while context engineering teaches the AI how to think within a system. Both are important, but for modern AI agents, context engineering is essential. By moving from isolated prompts to well-designed context systems, developers can build agents that are more intelligent, stable, and useful in real-world applications.

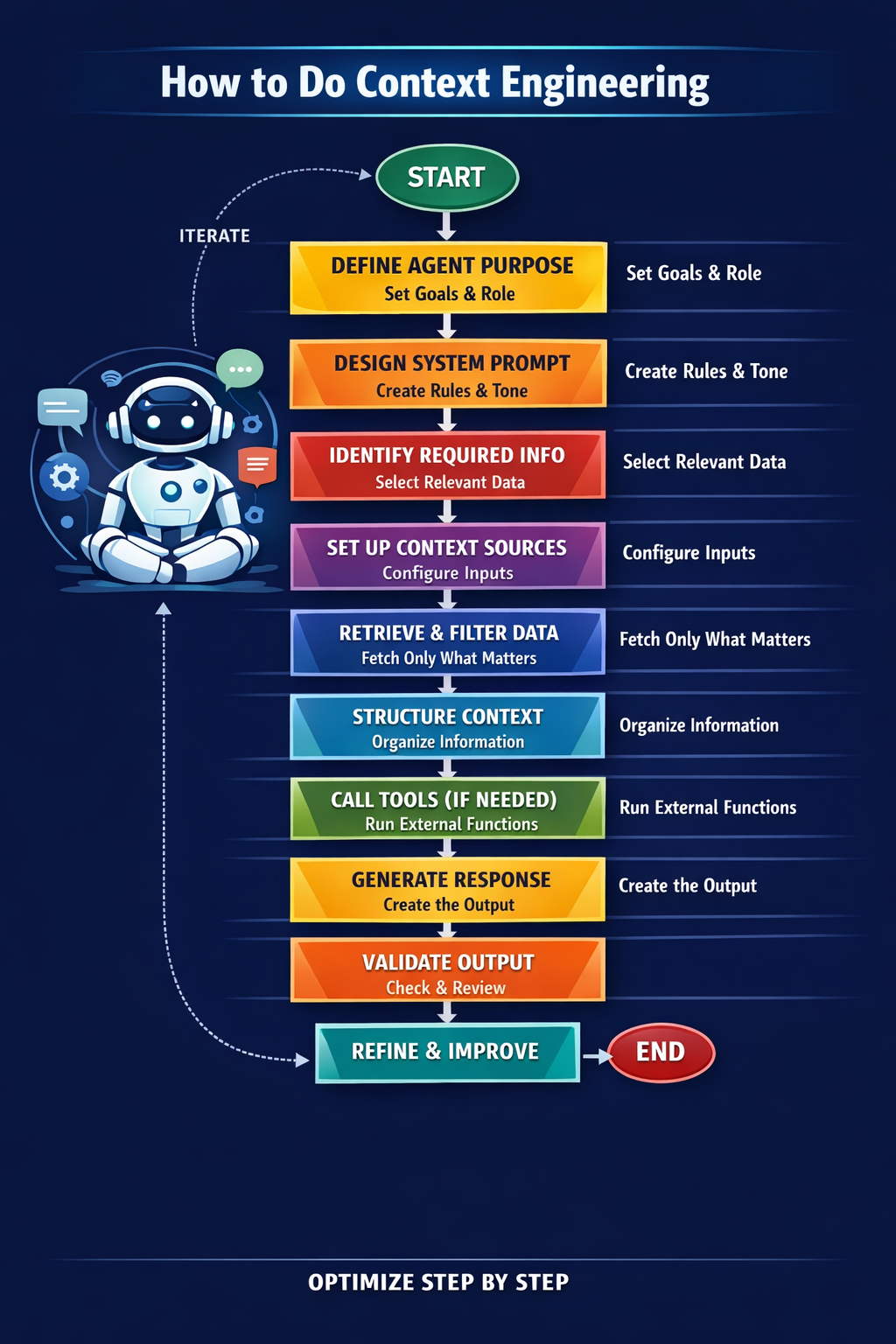

How to Do Effective Context Engineering

Context engineering is not a one-time activity. It is a continuous process of designing, testing, improving, and organizing everything that an AI agent sees before responding. The goal is to give the model the right information, in the right format, at the right time.

Instead of randomly adding instructions and documents, good context engineering follows a structured workflow.

Let’s break it down step by step. Before jumping into agent creation, create a plan and be clear about the workflow that you are trying to build.

Define the Agent’s Purpose

The first step is to clearly decide what your AI Agent is supposed to do. What problem is your AI Agent trying to solve? You should be clear with a few answers like:

- Is it a chatbot, researcher, assistant, or automation tool?

- Should it be formal, friendly, or technical?

- What problems should it solve?

- What should it never do?

This step shapes the entire system. Without a clear purpose, the agent will behave inconsistently.

Example: “Act as a technical assistant who explains AI concepts in simple language.”

This becomes the foundation of your system prompt.

Design the System Prompt

The system prompt controls the agent’s personality, tone, and boundaries. It is the most important layer of context.

A good system prompt includes:

- Role definition

- Output style

- Safety rules

- Formatting rules

- Priorities

It should be short, direct, and stable. Please remember that bad system prompts are vague and overly long.

Good system prompts are clear and focused.

Identify Required Information

Next, you decide what information the agent needs to perform well.

This may include:

- User instructions

- Past conversations

- Knowledge base content

- Company documents

- FAQs

- User preferences

- External data

Not all information should be included at once. Only useful and relevant data should be selected. This step prevents context overload.

Set Up Context Sources

Now you organize where the context will come from.

Common sources are:

- User input

- Memory system

- Vector database (RAG)

- Tool outputs

- Logs and history

- Configuration files

Each source should have a clear purpose.

For example:

Memory → stores preferences RAG → stores knowledge Tools → fetch live data

This separation improves clarity.

Retrieve and Filter Context

Before sending data to the model, the system must filter and rank it.

This step answers:

- Which documents are relevant?

- Which memories matter now?

- Which messages can be removed?

Techniques used here include:

- Similarity search

- Keyword filtering

- Reranking

- Summarization

Only high-value information is passed forward.

Execute Tools (If Needed)

If the task requires external information, the agent may call tools.

Examples:

- Search engines

- Databases

- Calculators

- APIs

Tool results should be:

- Clean

- Short

- Relevant

- Structured

Long raw outputs should be summarized before adding to the context.

Validate and Monitor Output

After generating responses, you must evaluate them.

Check for:

- Incorrect facts

- Policy violations

- Hallucinations

- Tone mismatch

- Missing details

This feedback helps improve future context design. Monitoring is essential for production systems.

START

│

▼

Define Agent Purpose

│

▼

Design System Prompt

│

▼

Identify Required Information

│

▼

Set Up Context Sources

│

▼

Retrieve & Filter Data

│

▼

Structure Context

│

▼

Call Tools (If Needed)

│

▼

Generate Response

│

▼

Validate Output

│

▼

Refine & Improve

│

└───────◄─────────────┘

(Repeat Cycle)

A Few Key Points One Should Keep in Mind

Building an effective AI system is not only about choosing a powerful model. It is mainly about designing the system in a way that allows the model to understand tasks clearly, access the right information, and respond consistently. Here are a few key points one should consider to build a better AI system.

- Context Window: The context window is the maximum amount of text an AI model can process at once. If your input exceeds this limit, older or less important information may be ignored. This makes it important to prioritize. Instead of adding everything, select only the most useful content. Summaries and structured data can help reduce space while preserving meaning.

- Tool Calls: Modern AI agents often use tools such as search engines, databases, calculators, or APIs. Tool calls allow agents to fetch real-time or specialized information. However, tool outputs also become part of the context. If they are too long or poorly formatted, they can confuse the model. Clean and summarized tool responses work best.

- If Too Much Is Stuffed: When too much content is added to the prompt, performance usually drops. The model may miss key instructions or focus on irrelevant details.This problem is called context bloat. It increases costs, slows responses, and reduces accuracy. Good context engineering avoids unnecessary repetition and trims unused data.

- Needle in a Haystack Problem: The “needle in a haystack” problem happens when important information is buried inside large amounts of text. Even powerful models can fail to locate critical details. To solve this, important points should be highlighted, summarized, or placed near the top. Ranking and filtering techniques also help reduce noise.

- Effective System Prompt: A good system prompt clearly defines the agent’s identity and behavior. It should explain what the agent is, what it can do, and what it must avoid. The tone, format, and priorities should be stated upfront. Simple and direct language works better than long and complex rules. It is also useful to update system prompts based on real usage and feedback.

- Taking Prompts Seriously: Many developers treat prompts as temporary text instead of core system components. This leads to rushed designs and poor testing. Prompts and context deserve the same attention as code. Well-written instructions can dramatically improve system performance without changing the model.

- Analyzing the Prompts: Prompt analysis involves checking how each part affects the output. You should test variations and observe changes in behavior. This helps identify weak instructions, unnecessary content, and missing constraints. Over time, this leads to more robust systems.

- Reranking Strategies: Reranking helps select the most useful retrieved documents. Instead of sending everything, the system sorts content by relevance. This ensures that the most important information appears first in the context, improving answer quality.

Context Challenges

Context engineering plays a central role in building reliable AI systems. However, it is very common to mess up a few steps, and as a result, the entire AI system you are trying to build gets compromised. Even small mistakes in context design can lead to large drops in performance. Understanding the challenges helps developers build more stable and trustworthy AI agents. One of the biggest challenges in context engineering is working within limited context windows. Every AI model has a maximum amount of text it can process at one time. When conversations grow longer or when large documents are added, important instructions and knowledge may be pushed out. This causes the model to forget rules, lose track of goals, or misunderstand user requests. Deciding what information should stay and what should be removed requires careful prioritization and frequent adjustment.

Too many tool integration adds further complexity. Many context pipelines include outputs from external tools such as search engines, databases, and APIs. These outputs are often long, noisy, or poorly formatted. They may contain irrelevant details or technical errors. Including such raw data in the context can confuse the model. Converting tool outputs into clean, summarized, and structured text is difficult but necessary.

Another challenge is maintaining consistency across updates. AI systems evolve over time. Models are upgraded, prompts are changed, and new tools are added. Each change can affect how context behaves. Without proper testing, updates may break existing workflows.

Finally, context engineering requires continuous maintenance. It is not a one-time setup, and sometimes a human in the loop is also required for improvement.

FAQs

What is the difference between prompt engineering and context engineering? Prompt engineering focuses on creating clear instructions for the model to follow. Context engineering goes beyond that by managing everything the model sees, including memory, retrieved data, and tool outputs. It ensures the model has the right information at the right time.

Why do AI agents give inconsistent answers? AI agents can produce inconsistent responses when prompts are unclear or when the context contains too much or conflicting information. If the input changes slightly or includes noise, the model may interpret it differently. Proper context management helps reduce this issue.

How much context should I provide? You should include only the information that is directly relevant to the task. Adding too much context can confuse the model and reduce performance. Keeping the input clean and focused leads to better and more consistent outputs.

Is RAG always necessary? RAG is useful when the model needs access to external or up-to-date information, especially private or domain-specific data. However, for simple or general tasks, it may add unnecessary complexity. It should be used only when there is a clear need.

Can context engineering replace better models? Context engineering cannot replace strong models, but it can significantly improve their performance. Even smaller or cheaper models can perform well if given the right context. It is often a cost-effective way to enhance results.

What are the major challenges for context engineering?

Context engineering faces challenges like limited context windows and varying data quality. Tool outputs can be noisy or inconsistent, which affects performance. Keeping the context clean, relevant, and updated requires continuous effort, especially as systems scale.

Conclusion

Context engineering is the foundation of reliable and effective AI agents. It goes beyond writing good prompts and focuses on managing system rules, memory, tools, and retrieved data in a structured way.

By keeping context clear, focused, and relevant, developers can build agents that are more accurate, scalable, and trustworthy. Finally, continuous maintenance is a long-term challenge. AI systems are not “build once and forget” products. Models change, user needs evolve, and data grows over time. Prompts, retrieval methods, workflows, and memory rules must be updated regularly. Without ongoing improvement, even well-designed systems gradually lose effectiveness.

As AI systems grow more complex, strong context engineering will become even more important for long-term success.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

With a strong background in data science and over six years of experience, I am passionate about creating in-depth content on technologies. Currently focused on AI, machine learning, and GPU computing, working on topics ranging from deep learning frameworks to optimizing GPU-based workloads.

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- **Introduction**

- **Key Takeaways**

- **What Is Context Engineering?**

- **Prompt Engineering to Context Engineering**

- **How to Do Effective Context Engineering**

- **Context Challenges**

- **FAQs**

- **Conclusion**

Join the many businesses that use DigitalOcean’s Gradient™ AI Inference Cloud.

Reach out to our team for assistance with GPU Droplets, 1-click LLM models, AI Agents, and bare metal GPUs.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.