- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Technical Evangelist // AI Arcanist

We have extensively covered the popularization of open-source large language modeling on this blog, and for good reason. Over the past few years, ever since the release of [GPT 3.5 https://platform.openai.com/docs/models/gpt-3-5) in our opinion, LLMs have become one of the most powerful, capable, and useful results of the AI revolution. This is because their capabilities most closely mimic those of an actual human, making their interactivity and integration potential second to none in the world of AI.

But, LLMs have one glaring problem: they only operate in a single mode. Specifically, this means they only take in language and output language. While language/text data is versatile in ways that other data types can not be, this limitation prevents LLMs from capitalizing on all the available data sources for training.

From tasks like OCR to video understanding, this capability to take in image data has far reaching implications for AI tasks. In the past few months, we have looked at vision-language LLMs closely, trying to see how this newer domain of LLM development will go. Models like BAGEL and OmniGen2 show awesome capabilities for vision understanding tasks, for example, but were not as highly performant as proper LLMs. The problem really is: how do we create a vision language model that is equally robust across both domains?

In this article, we will look at the latest model seeking to tackle this problem with regards to vision, GLM 4.1V. The GLM 4.1 Vision Language Thinking model series from KEG & THUDM/KEG is a new family of LLMs available to the open-source community that excel at reasoning on both language and image related tasks. In this article, we will discuss the rationale behind the development of the model family, look at the architecture that makes the model possible, and demonstrate how to run the model on a AMD powered MI300X GPU Droplet.

Key Takeaways

- GLM 4.1V is a SOTA vision language model capable of high quality image-text-to-text transcription

- GLM 4.1V researchers found that multi-domain reinforcement learning leads to robust cross-domain generalization

- Running GLM 4.1V on a DigitalOcean Gradient GPU Droplet takes only the time to download the model

GLM 4.1V Breakdown

GLM 4.1V is the latest in a long series of projects in the GLM series from KEG/THUDM. Starting with the original GLM, they have successfully iterated on their design and data sources to create more and more robust versions of their flagship LLM. GLM 4.1V Base and GLM 4.1V Thinking are their next tangible step forward, extending and expanding the capabilities of their models to cover new modalities and reasoning tasks.

In order to successfully create GLM 4.1V, the authors made several notable contributions to reinforcement learning for LLMs:

- Multi-domain reinforcement learning demonstrates robust cross-domain generalization and mutual facilitation. They determined that training on one domain boosts performance in others, and joint training across domains yields even greater improvements in each.

- Dynamically selecting the most informative rollout problems is essential for both efficiency and performance. Therefore, they propose strategies including Reinforcement Learning with Curriculum Sampling (RLCS) and dynamic sampling expansion via ratio-based Exponential Moving Average (EMA).

- A robust and precise reward system is critical for multi-domain RL. When training a unified VLM across diverse skills, even a slight weakness in the reward signal for one capability can collapse the entire process (Source)

Cumulatively, these contributions lead to GLM 4.1V Base and GLM 4.1V Thinking. Let’s take a deeper look at what goes on under the model’s hood.

GLM 4.1V Model Architecture & Pipeline

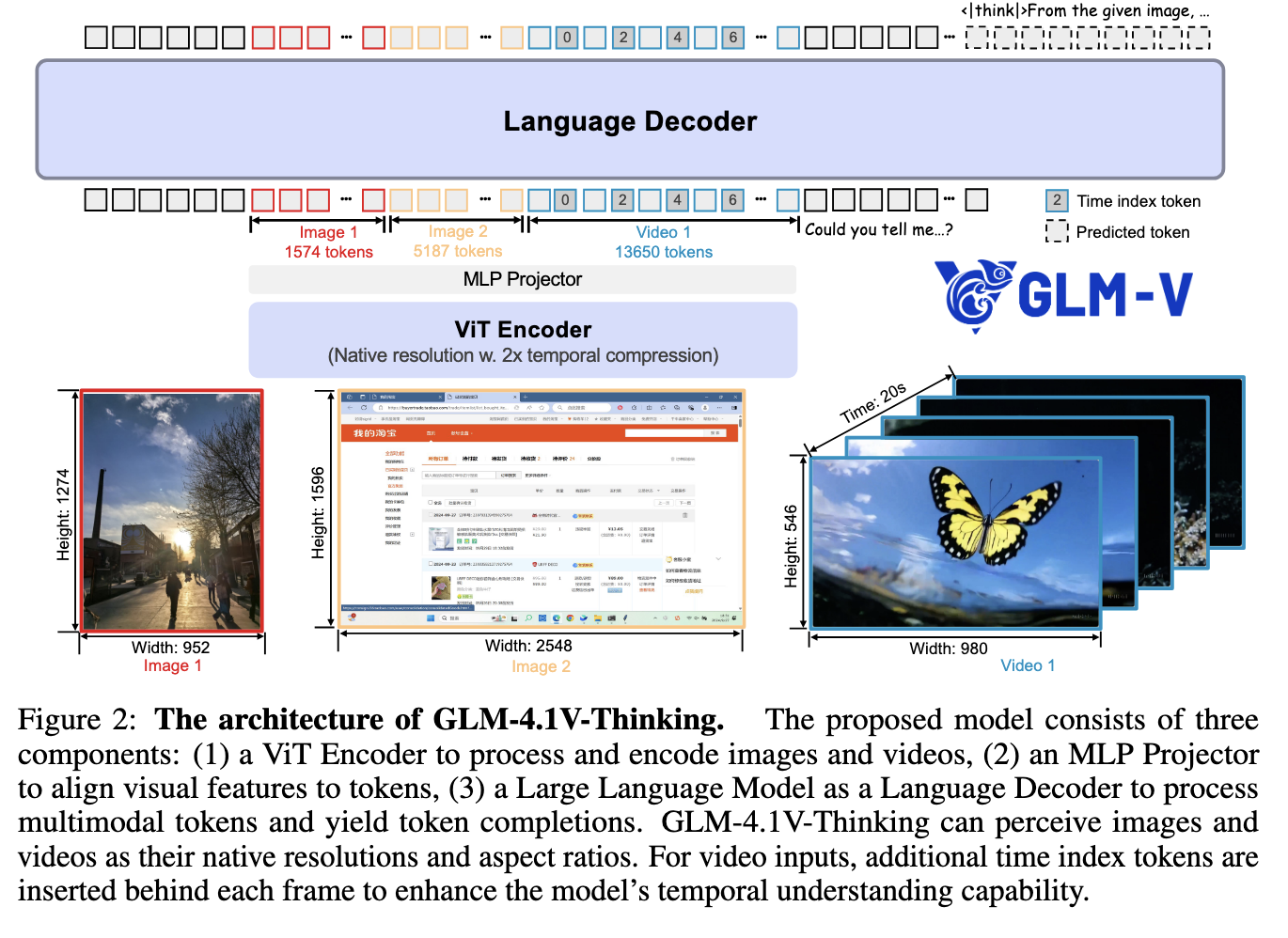

GLM-4.1V-Thinking is composed of three core components: a vision encoder, an MLP adapter, and a large language model (LLM) as the decoder. They use AIMv2-Huge as the vision encoder, and GLM 4.1 as the LLM. Within the vision encoder, they adopt a strategy similar to Qwen2-VL where they replace the original 2D convolutions with 3D convolutions. This enables temporal downsampling by a factor of two for video inputs, thereby improving model efficiency. For single-image inputs, the image is duplicated to maintain consistency.

To support arbitrary image resolutions and aspect ratios, they introduce two adaptations. First, they integrated 2D-RoPE, Rotary Position Embedding for Vision Transformer, enabling the model to effectively process images with extreme aspect ratios (over 200:1) or high resolutions (beyond 4K). Second, to preserve the foundational capabilities of the pre-trained ViT, they opted to retain its original learnable absolute position embedding.During training, these embeddings are dynamically adapted to variable-resolution inputs via bicubic interpolation. Source

Running GLM 4.1V on GPU Droplet

Running GLM 4.1V on a GPU Droplet is simple, and it’s easy to use either an AMD or NVIDIA powered GPU Droplet. Consider which machine type may be better for you when choosing your machine for this project, but we recommend at least an NVIDIA H100 or AMD MI300X. These will provide sufficient GPU memory to load and run the models quickly. An A6000 may also be sufficient, but will be much slower.

Setting up the environment

Follow the instructions laid out in our environment set-up tutorial to get your machine env started. We will need to follow each step, since we are going to use a Jupyter Lab environment for this demo. Once you have finished setting up your Python environment for the demo, launch Jupyter Lab with the following command:

pip3 install jupyter

jupyter lab --allow-root

Use the outputted link with your local VS Code or Cursor application’s simple browser feature to access the Notebook in your browser.

Using the Model for Vision-Language Tasks

Now that our environment is set up, create a new IPython Notebook using the Jupyter Lab window. Once that’s done, open it and click into the first code cell. Paste the following Python code into the cell.

from transformers import AutoProcessor, Glm4vForConditionalGeneration

import torch

MODEL_PATH = "THUDM/GLM-4.1V-9B-Thinking"

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"url": "https://upload.wikimedia.org/wikipedia/commons/f/fa/Grayscale_8bits_palette_sample_image.png"

},

{

"type": "text",

"text": "describe this image"

}

],

}

]

processor = AutoProcessor.from_pretrained(MODEL_PATH, use_fast=True)

model = Glm4vForConditionalGeneration.from_pretrained(

pretrained_model_name_or_path=MODEL_PATH,

torch_dtype=torch.bfloat16,

device_map="auto",

)

inputs = processor.apply_chat_template(

messages,

tokenize=True,

add_generation_prompt=True,

return_dict=True,

return_tensors="pt"

).to(model.device)

generated_ids = model.generate(**inputs, max_new_tokens=8192)

output_text = processor.decode(generated_ids[0][inputs["input_ids"].shape[1]:], skip_special_tokens=False)

print(output_text)

What this does is, first instantiate and load in our model from HuggingFace. Then we take the input image URL and text into a formatted response, and enter them into the model. The model then returns the response which is decoded into plain text for us.

In our experience, GLM 4.1 is a stronger Vision-Language model than any open-source competition we have found so far. It excels at tasks like OCR, Object description, and image captioning. We encourage everyone to consider adding GLM 4.1 V to their deep learning pipeline, especially if you are working with image rich data.

Closing Thoughts

GLM 4.1V is an exceptional Vision Language model. Not only is it powerful, but it is very simple to get started using it on a DigitalOcean GPU Droplet. We encourage you to try this model out in your workflow, and consider it for tasks like OCR for RAG.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.