- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

By Rajesh Nair and Anish Singh Walia

Introduction

The world is changing rapidly, specifically in the technology sector, which is the driving force of the changing patterns in different industries and businesses. These changes push the underlying application layer to be at its best and transmit data in real time across different layers of the application. Using combinations of solutions like Kafka and MongoDB is one such capability that organizations are adopting to make their applications more performant and real-time.

Why does this combination make the perfect duo? It addresses a long-standing gap in integrated systems: streaming millions of events per second, along with having the capacity to handle complex querying, together with long-term storage.

The spectrum of the market that this combination has supported is enormous, including powering event-driven architectures for financial transaction processing, IoT sensor ingestion, real-time user activity tracking, and dynamic inventory management.

MongoDB and Kafka are the catalyst for immediate, reactive, and persistent data solutions that require real-time data processing with historical context.

Key takeaways

- Kafka handles high-throughput event streaming and acts as the central event backbone; MongoDB stores and queries data durably for real-time and historical use.

- Combining both gives you real-time data pipelines plus durable storage, so you can stream events and still run complex queries and analytics.

- Use producers to publish events to Kafka topics, consumers to process streams, and MongoDB collections to persist results.

- This pattern fits e-commerce order flows, AI agents, IoT ingestion, and any system that needs live events plus long-term data.

- For production, use Kafka’s idempotent producer, schema validation, and monitoring; pair with DigitalOcean Managed Kafka and Managed MongoDB for managed scaling and operations.

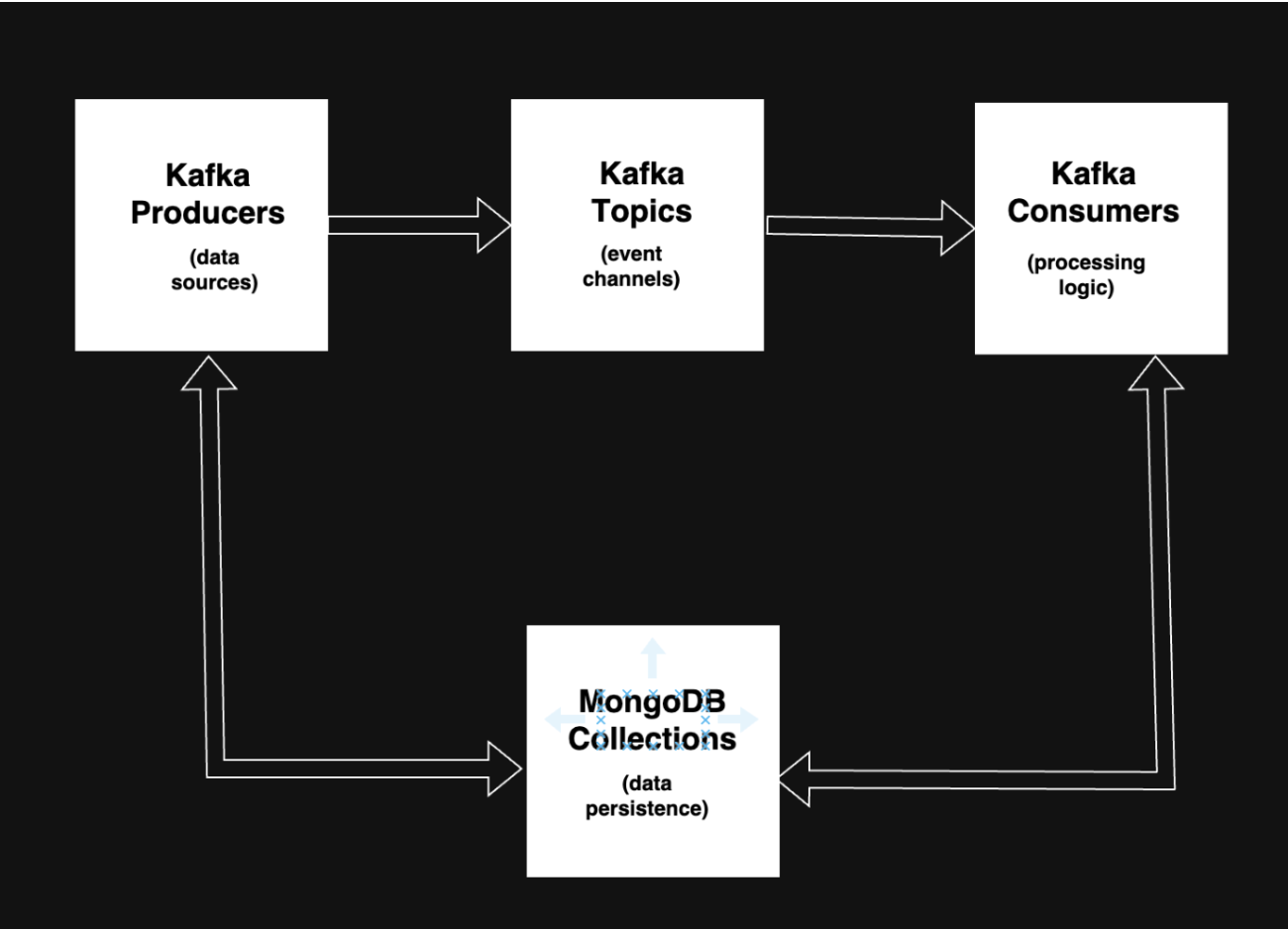

Architectural overview

The integrated architecture of Kafka and MongoDB exemplifies how modern systems achieve both real-time responsiveness and durable data persistence. At its core, Kafka functions as the central nervous system of the entire data ecosystem, capturing events as they occur, buffering them efficiently, and distributing them across different consumers that need to act upon this data in real time. This dynamic flow enables continuous streams of information to move through the pipeline without dropping events, so signals and insights are not lost in transit.

Each component plays an essential part in keeping the system alive, responsive, and consistent.

1. Kafka producers (data sources)

Producers are like the reporters in a busy newsroom. For more on how Kafka works and how to produce events, see our Introduction to Kafka and How to Set Up a Kafka Producer to Source Data Through CLI. They gather live feeds of information (transactions, sensor readings, user interactions, and log events) and deliver them to Kafka. These producers can be applications, services, or devices that continuously publish data streams. Their job is simply to send information in its raw form to Kafka without worrying about who needs it or how it will be used next, ensuring a steady flow of updates into the system.

2. Kafka topics (event channels)

Once produced, the data doesn’t go directly to a specific destination. Instead, it lands in Kafka topics, which function much like categorized folders or broadcast channels. Each topic groups similar types of events together: for example, one may hold payment events, another user activity, and another IoT telemetry data. This categorization allows multiple teams or systems to tune in to the events they care about, just as television channels cater to different audiences without interfering with one another.

3. Kafka consumers (processing logic)

Consumers are the analysts or decision-makers of this ecosystem. They subscribe to relevant Kafka topics and process the incoming streams in real time. Depending on the need, consumers may clean, transform, or enrich the data before acting on it or sending the processed results forward. You can think of them as chefs who take raw ingredients from the producers, use their own recipe (business logic), and prepare a finished dish that’s meaningful and ready to be served.

4. MongoDB collections (data persistence)

Finally, the processed results reach MongoDB, which plays the role of a digital archive or knowledge base. To work with MongoDB data in depth, see How To Perform CRUD Operations in MongoDB and How To Use the MongoDB Shell. Within MongoDB, data is stored in collections, the equivalent of organized shelves that hold every piece of information produced and processed so far. These collections ensure durability and make it easy to retrieve historical data to analyze trends, generate reports, or offer users context-aware experiences. MongoDB’s flexible document model allows it to store both structured and semi-structured data, perfectly complementing Kafka’s free-flowing event streams.

In practice, this architectural synergy allows businesses to harness the immediacy of streaming data while maintaining the reliability of durable storage. Following best practices, high-throughput communication between Kafka and MongoDB can be achieved using Kafka connectors with built-in fault tolerance, replication for both ingestion and storage layers, and thoughtful schema evolution strategies to ensure consistency across evolving data models. Together, Kafka and MongoDB establish an architecture that is reactive, resilient, and ready for the demanding data landscapes of the modern enterprise.

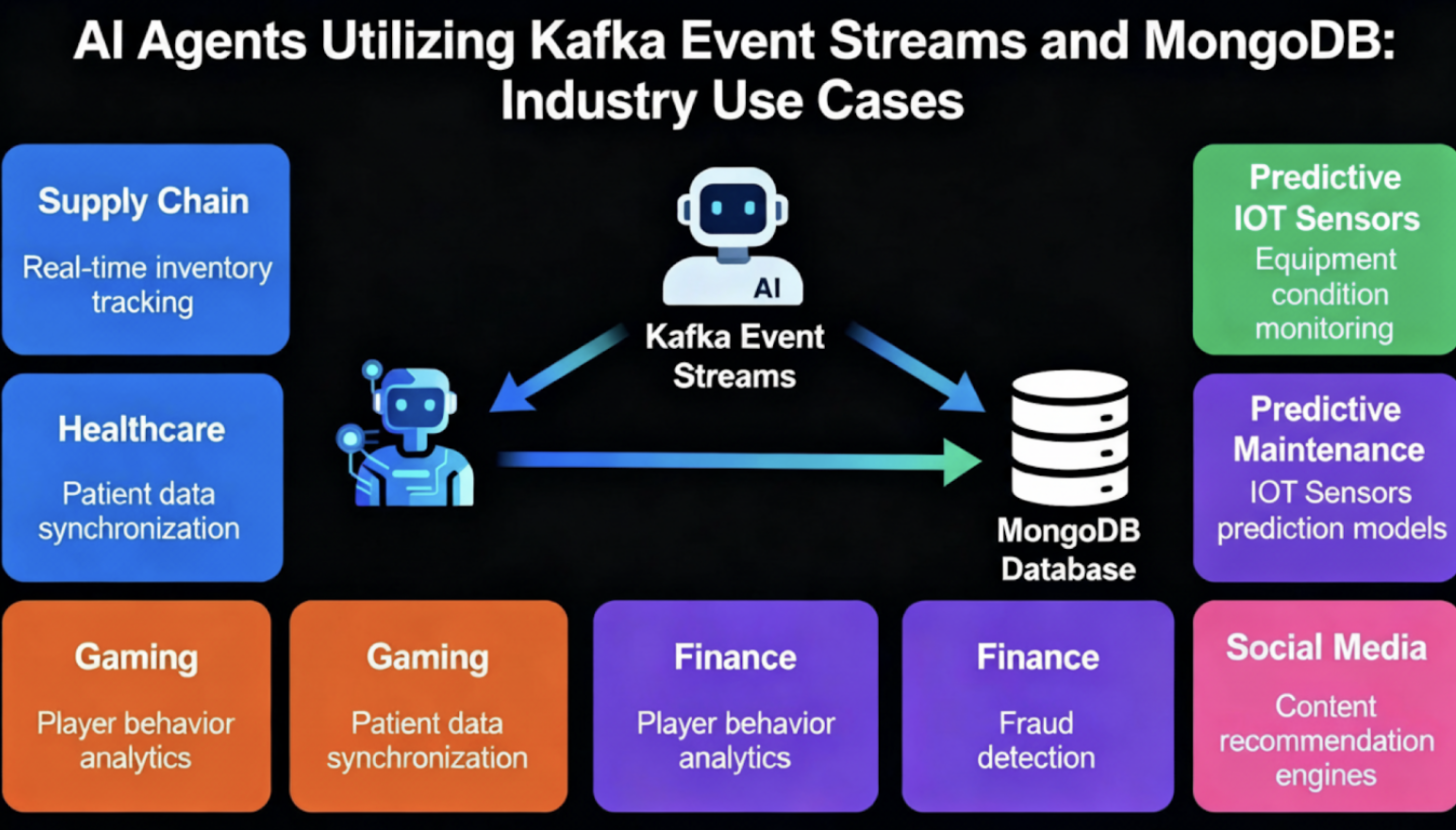

Key use cases of MongoDB & Apache Kafka - AI agents and beyond

The integration of Kafka and MongoDB fundamentally expands what’s possible for modern and intelligent applications beyond merely processing static data.The integration creates a responsive digital ecosystem which comes alive with the constant flow of real-world events.

This combination powers AI agents by turning them into real-time decision engines that digest live event streams and persist state for continuous learning. AI agents can respond to user queries by using both live and historical data from MongoDB, which helps them excel in autonomous customer support.

When a customer sends a message, that event lands instantly in Kafka, where an AI agent picks it up, makes a contextual decision based on live and historical data from MongoDB, and responds within seconds. Every interaction and every decision is logged in MongoDB for both immediate context and for learning over time, which allows the agents to continuously improve.

There are also use cases for AI agents in recommendation systems that adapt instantly to evolving user behavior. Other use cases involve fraud detection systems analyzing transactional patterns on the fly and predictive maintenance applications that monitor IoT sensor data to preempt failures.

Beyond AI agents, the Kafka and MongoDB synergy unlocks diverse industry applications where immediate reaction to streaming data and complex historical data analysis is essential:

- Supply chain management: AI-driven event streams help coordinate logistics dynamically, rerouting shipments based on weather conditions and traffic disruptions while referencing past delivery data.

- Healthcare: Providers benefit from real-time patient monitoring and comprehensive longitudinal health record analysis for personalized care.

- Gaming platforms: Event streams are used to adjust gameplay and offers instantly, supported by persistent player data.

- Financial institutions: AI agents are deployed to trade with split-second timing, maintaining trade histories for compliance.

- Social media platforms: Streaming analytics is used for content moderation and personalized recommendations, tuned to both live trends and long-term user behavior.

In essence, while AI agents illustrate the pinnacle of Kafka and MongoDB’s power, the broader ecosystem includes scalable, reliable solutions for event-driven architectures across industries. This integration supports both split-second insights and in-depth retrospective analytics, enabling organizations to stay responsive today while building intelligence for tomorrow.

Let’s see a real-world example of a real-time pipeline using Kafka and MongoDB in the context of an e-commerce order event.

For this example, we will use Java as the coding language and a MongoDB Atlas cluster, with a Kafka broker running on DigitalOcean.

Prerequisites

• MongoDB cluster (cloud-based): A MongoDB Atlas cluster provides a fully managed, scalable, and secure database environment. You will need the connection string from your Atlas cluster for your Java application.

• A Kafka broker set up (DigitalOcean): A Kafka broker runs on a DigitalOcean Droplet, and ideally, it’s deployed via Docker containers for simplicity and ease of management.

• A Java Development Environment: Use a Java 11+ environment with build tools such as Maven or Gradle to manage dependencies.

• Necessary client libraries: The dependencies for Kafka and MongoDB Java drivers are org.apache.kafka:kafka-clients and org.mongodb:mongodb-driver-sync or mongodb-driver-reactivestreams.

Step-by-step installation and setup

Note that this guide uses Zookeeper for simplicity, but production systems should use Kafka’s KRaft mode (Zookeeper-free) available in Kafka 3.3+.

MongoDB Atlas setup

-

Sign up for or log into MongoDB Atlas.

-

Create a free-tier cluster with your preferred cloud provider.

-

Whitelist your IP to allow your Java app to access the cluster.

-

Copy the connection string (URI) provided by MongoDB Atlas; replace

<password>and<dbname>accordingly.

Kafka broker on DigitalOcean

-

Create a DigitalOcean droplet running Ubuntu or your preferred Linux distro.

-

Install Docker, if not already installed:

sudo apt update && sudo apt install docker.io -

Run Kafka and Zookeeper containers on the droplet:

docker network create kafka-net docker run -d --name zookeeper --network kafka-net -p 2181:2181 zookeeper docker run -d --name kafka --network kafka-net -p 9092:9092 \ -e KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 \ -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://<your_droplet_ip>:9092 \ -e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1 confluentinc/cp-kafka:latest -

Create the order-events topic on the Kafka instance:

docker exec -it kafka kafka-topics --create --topic order-events --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1 -

Configure the DigitalOcean firewall to allow inbound traffic on ports 2181 and 9092.

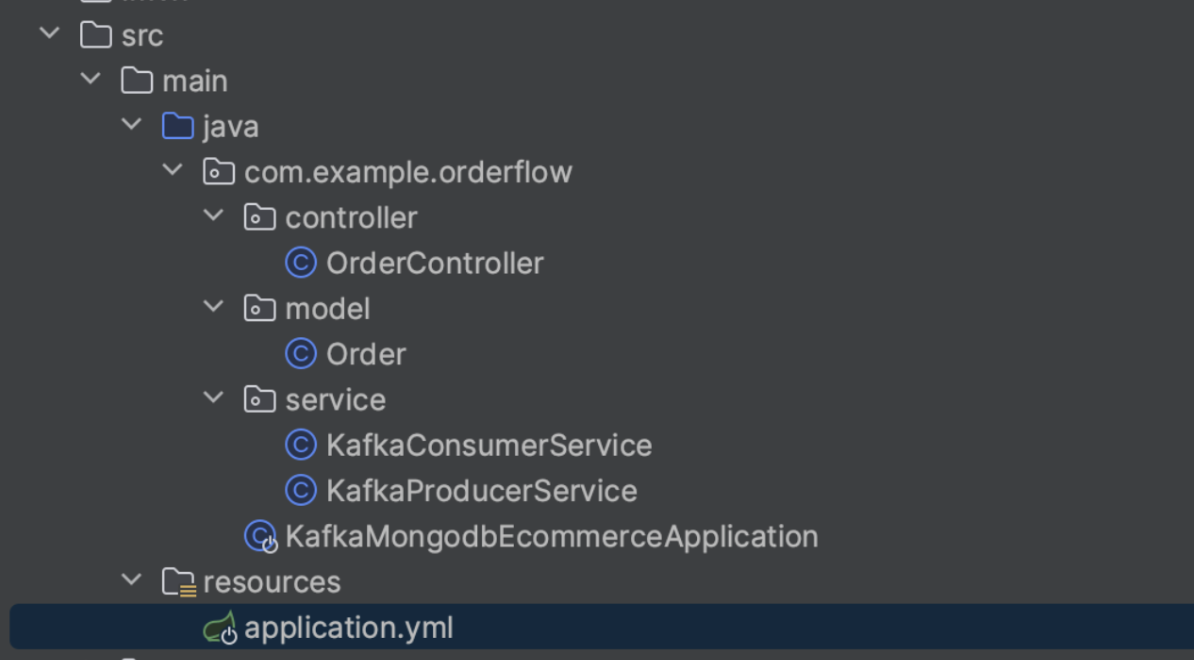

Java project

-

Create a Spring Boot Maven Java project locally with the following structure:

-

Add dependencies to your

pom.xml(Maven) for Kafka clients and MongoDB Drivers:<dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-mongodb</artifactId> </dependency> -

Use the MongoDB Atlas connection string to connect your consumer application to MongoDB and Kafka bootstrap servers to your DigitalOcean Kafka broker IP and port. Add the following to your Java project configuration (e.g.

application.yml):spring: kafka: bootstrap-servers: <droplet_ip_address>:9092 consumer: group-id: order-consumer-group auto-offset-reset: earliest key-deserializer: org.apache.kafka.common.serialization.StringDeserializer value-deserializer: org.apache.kafka.common.serialization.StringDeserializer producer: key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializer data: mongodb: uri: mongodb+srv://<database_name>:<database_pass>@clusterdo.pcpso25.mongodb.net/<database_name>?retryWrites=true&w=majority auto-index-creation: true order: topic: order-events logging: level: org.springframework.data.mongodb: DEBUG com.mongodb: DEBUG

Core implementation: Building a real-time pipeline

Now, let’s dig into the core implementation of our setup and see how we could easily use the MongoDB and Kafka cluster we created in few clicks on MongoDB Atlas and DigitalOcean respectively to create an e-commerce order event flow from a Kafka producer to a Kafka consumer and store those events in MongoDB Atlas.

1. Producer: Capturing new orders for real-time processing

In an e-commerce application, every time a customer places an order, that event needs to be captured and communicated instantly to other parts of the system for processing (inventory update, payment verification, shipping, and so on).

Our Kafka producer acts as this first step: When the REST API receives a new order via the /orders endpoint, the producer serializes the order details into a JSON message and publishes it to a Kafka topic named order-events.

This decouples order intake from downstream processes, enabling asynchronous, scalable, and fault-tolerant event handling.

public class KafkaProducerService {

@Value("${order.topic}")

private String topic;

private final KafkaTemplate<String, String> kafkaTemplate;

public KafkaProducerService(KafkaTemplate<String, String> kafkaTemplate) {

this.kafkaTemplate = kafkaTemplate;

}

public void sendOrderEvent(String orderJson) {

kafkaTemplate.send(topic, orderJson);

}

}

2. Consumer: Processing and persisting orders efficiently

Downstream services interested in orders subscribe as Kafka consumers on the same topic

Our Kafka consumer listens to order-events and receives every order message in real time. Upon receipt, it deserializes the JSON back into an Order object and writes it into MongoDB Atlas, making the order durable and queryable.

The consumer also performs idempotency checks: before inserting an order, it verifies if that order ID already exists to prevent duplicates, ensuring data integrity despite retries or duplicate events.

public class KafkaConsumerService {

@Autowired

private MongoTemplate mongoTemplate;

private final ObjectMapper objectMapper = new ObjectMapper();

@KafkaListener(topics = "${order.topic}", groupId = "${spring.kafka.consumer.group-id}")

public void consumeOrderEvent(String orderJson) throws Exception {

Order order = objectMapper.readValue(orderJson, Order.class);

try {

mongoTemplate.save(order);

System.out.println("Order saved: " + order.getOrderId());

} catch (DuplicateKeyException ex) {

// Duplicate orderId; safely skip

System.out.println("Duplicate Order skipped (DB constraint): " + order.getOrderId());

}

}

}

3. Defining schema model

Let’s now set up the model for our data, which is produced into the Kafka topic and also used to persist the data into our MongoDB cluster.

@Document(collection = "orders")

public class Order {

@Id

private ObjectId id;

@Indexed(unique = true)

private String orderId;

private String customerId;

private double amount;

}

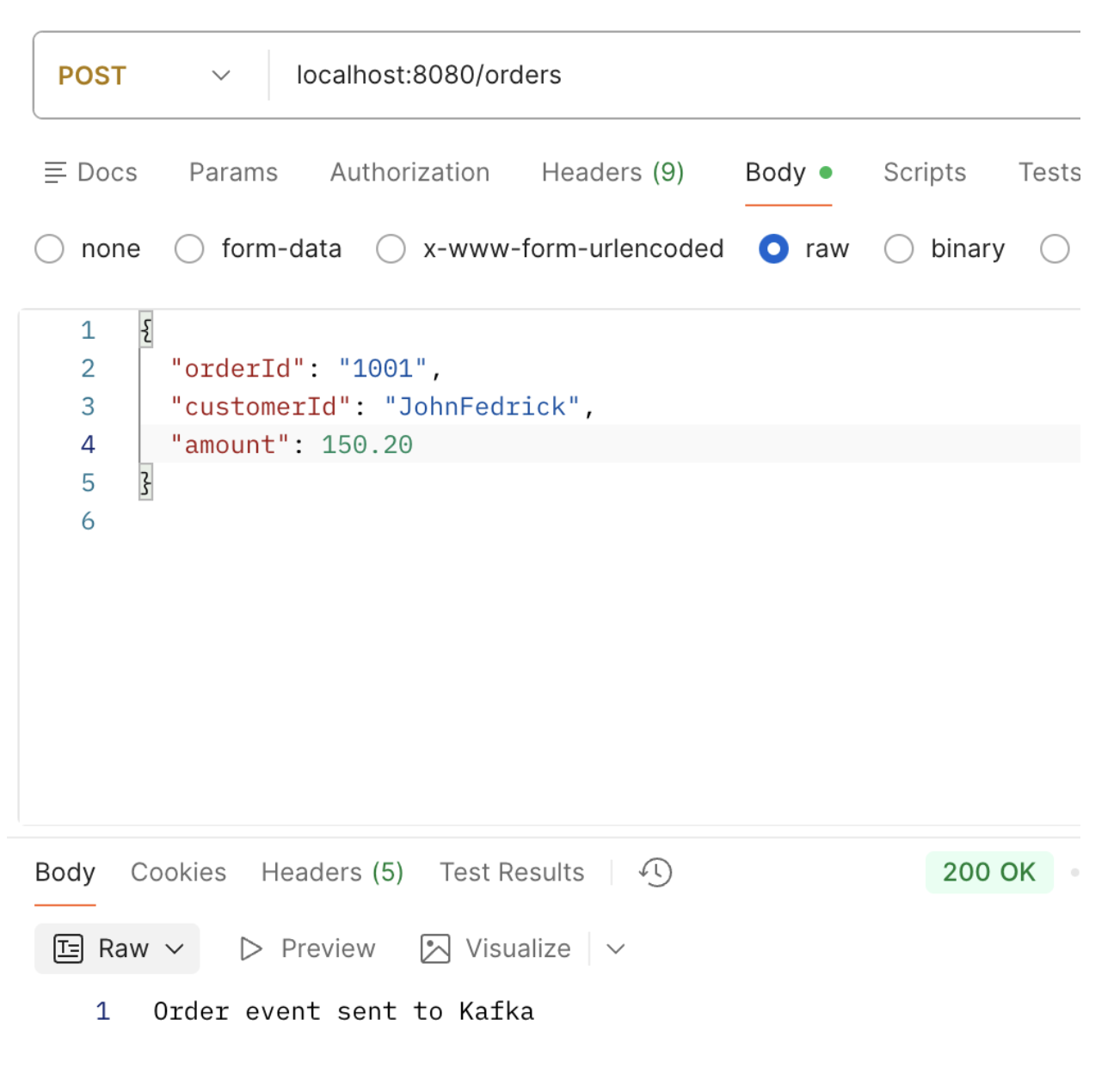

4. Exposing API for producing order events

Now, we will expose an endpoint through the controller so that we can test placing new orders and see the data flow to the Kafka topic and MongoDB.

@RestController

@RequestMapping("/orders")

public class OrderController {

private final KafkaProducerService producerService;

private final ObjectMapper objectMapper = new ObjectMapper();

public OrderController(KafkaProducerService producerService) {

this.producerService = producerService;

}

@PostMapping

public ResponseEntity<String> createOrder(@RequestBody Order order) {

try {

String orderJson = objectMapper.writeValueAsString(order);

producerService.sendOrderEvent(orderJson);

return ResponseEntity.ok("Order event sent to Kafka");

} catch (Exception e) {

// In case of failed order event, set up a dead letter queue for retry of failed events.

return ResponseEntity.status(500).body("Failed to send order event");

}

}

}

5. Testing the pipeline flow

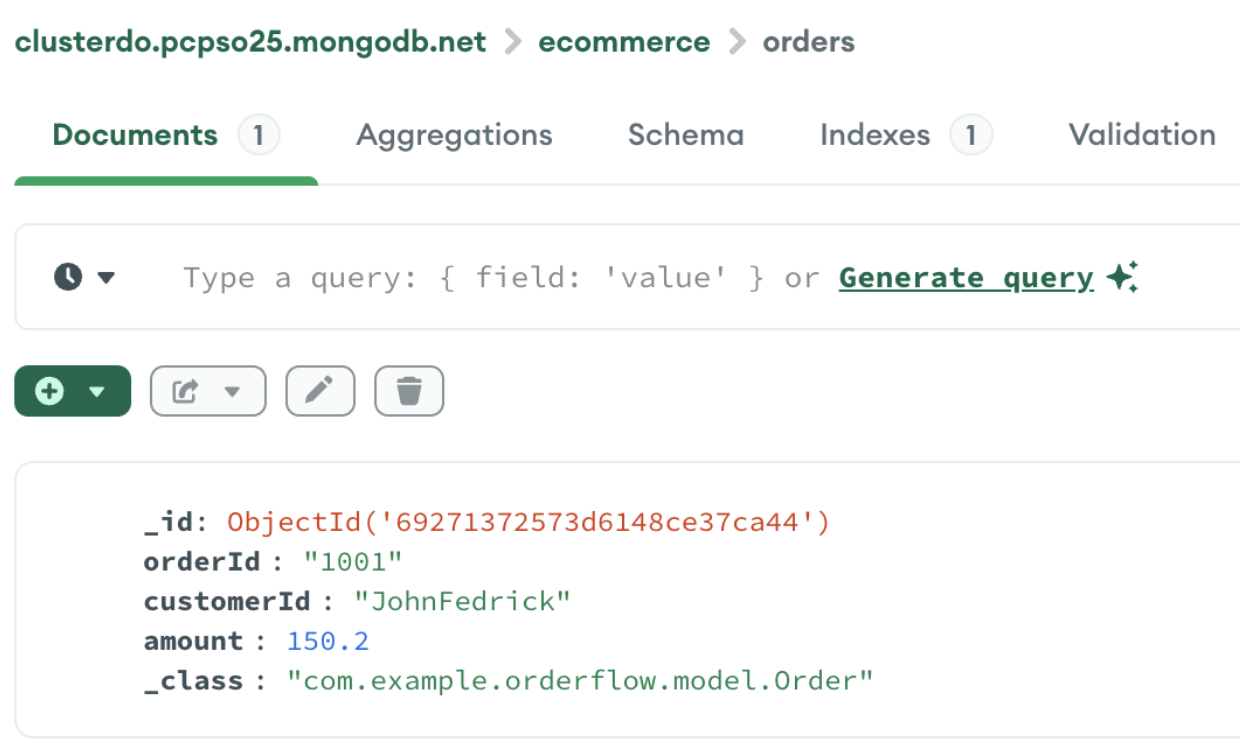

If we start our application and test posting an order using the endpoint, we can see the data being propagated to the Kafka topic. Then, the consumer service that is listening on the topic will consume that data and persist it into our orders collection in the MongoDB Atlas cluster.

We can now check the message in the droplet Kafka topic:

docker exec -it kafka kafka-console-consumer --bootstrap-server localhost:9092 --topic order-events --from-beginning

Example output:

{"id":1,"orderId":"1001","customerId":"JohnFedrick","amount":150.2}

The consumer has also processed this message from the topic and persisted it to the MongoDB cluster in real time.

This architecture connects real-time event streaming with durable storage. It scales effortlessly by allowing multiple consumers to independently process order events. Leveraging Kafka’s fault-tolerance and MongoDB’s flexible document model helps the e-commerce platform stay responsive and reliable. The producer-consumer flow ensures that each order is captured immediately and persisted reliably, while enabling the system to evolve with new processing needs without tight coupling.

You can check the codebase on GitHub.

Advanced topics and best practices

In production systems integrating Kafka and MongoDB for e-commerce or similar real-time applications, several critical considerations are essential to ensure data reliability, consistency, and efficient processing:

Ensuring exactly-once processing

-

Enable Kafka’s idempotent producer (

enable.idempotence=true) to prevent duplicate messages at the Kafka level. -

Use Kafka’s transactional APIs to package, produce, and offset commits into a single atomic operation, ensuring messages are processed exactly once. This is especially critical in financial or order processing systems.

Implementing schema validation

-

Adopt schema registries (e.g., Confluent Schema Registry) with formats like Avro or JSON Schema to enforce message schemas, prevent corruption, and enable evolution.

-

Consumers should validate incoming messages against schemas, decreasing errors and increasing system resilience.

Distributed tracing and monitoring

-

Incorporate distributed tracing (e.g., OpenTracing, Jaeger) to track message flow across Kafka, consumers, and databases.

-

Monitor Kafka metrics (lag, throughput, retries) and MongoDB metrics (connections, query performance) via Prometheus or Grafana dashboards. For programmatic Kafka management, see How To Manage Kafka Programmatically.

Partitioning and scaling strategies

-

Carefully select partition keys to ensure even load distribution and preserve order for related messages.

-

Scale consumers horizontally, balancing consumption across partitions for high throughput.

Security and compliance

-

Encrypt data in transit and at rest.

-

Use appropriate access controls, authentication, and authorization (e.g., Kafka ACLs, MongoDB roles).

FAQs

1. What is the MongoDB Kafka Connector?

The MongoDB Connector for Apache Kafka is a Confluent-verified connector that can act as both a sink and a source. As a sink, it writes data from Kafka topics into MongoDB. As a source, it publishes changes from MongoDB (for example, via change streams) into Kafka topics. This guide uses a custom Java consumer to write from Kafka to MongoDB; for a fully connector-based setup, see MongoDB’s Kafka Connector documentation.

2. How do you connect Kafka to a database like MongoDB?

You connect Kafka to MongoDB either by using the official MongoDB Kafka Connector (sink and source) or by writing a consumer application that reads from Kafka topics and writes to MongoDB, as in the e-commerce example in this tutorial. Kafka Connect provides source connectors (database to Kafka) and sink connectors (Kafka to database). For programmatic patterns, see our Introduction to Kafka and How to Set Up a Kafka Producer to Source Data Through CLI.

3. Can Apache Kafka replace a database?

No. Kafka is an event streaming platform, not a replacement for databases like MongoDB, MySQL, or Elasticsearch. Kafka stores streams of events with retention and replay, while databases offer querying, indexing, and durable storage with different guarantees. In real-time pipelines, Kafka and MongoDB are used together: Kafka for streaming and buffering, MongoDB for persistent storage and querying.

4. Is Kafka used for real-time data streaming?

Yes. Apache Kafka is widely used to build real-time streaming data pipelines and applications. It supports high-throughput, fault-tolerant event streaming and works with stream processing tools such as Kafka Streams. Combining Kafka with MongoDB gives you real-time event flow plus durable, queryable storage.

5. When should I use Kafka with MongoDB?

Use Kafka with MongoDB when you need to ingest or process high-volume event streams and also store results for the long term, run complex queries, or support both real-time and historical analytics. Common scenarios include e-commerce order processing, change data capture, event-driven microservices, and AI agents that need live events and persistent state.

Conclusion

The steady rhythm of Kafka’s event streams paired with the flexible memory of MongoDB’s document store forms a powerful asset for various use cases, including e-commerce, as you have seen in the above illustration. Building your own pipeline is like assembling a high-performance engine: Once the parts are connected correctly, it can drive the most demanding applications smoothly and efficiently. Of course, actual performance will vary based on hardware, network conditions, and configurations.

Now, it’s your turn to bring this engine to life. Experiment by creating your own Kafka-MongoDB integrations, tailor them to your unique data flows, and explore how these can be extended further with next-generation AI agents like those powered by the GradientAI™ Platform, which add a layer of intelligence and autonomy to your data streams.

You can always tap into vibrant community resources, share your experiments, and learn from others who are also racing along this innovative path. The journey of mastering Kafka and MongoDB is ongoing, ever-evolving, and filled with exciting possibilities.

Next steps and related resources

- Try managed Kafka and MongoDB on DigitalOcean: Use Managed Databases for Apache Kafka and Managed MongoDB to run production-ready clusters without managing servers. See Getting started with Kafka in our docs.

- Go deeper on Kafka: Introduction to Kafka, How To Manage Kafka Programmatically, and How to Set Up a Kafka Producer to Source Data Through CLI.

- How to Set Up a Multi-Node Kafka Cluster Using KRaft

- How to Set Up a Kafka Schema Registry

- Go deeper on MongoDB: MongoDB Tutorial, How To Perform CRUD Operations in MongoDB, and How To Use the MongoDB Shell.

- Checkout the codebase: The full e-commerce pipeline is available on GitHub.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author(s)

13+ years of experience in working in multiple multinational companies in a wide variety of domains, donning the role of Database administrator for a brief period and now working as a senior software engineer at Tommy Hilfigher and Calvin Klein. Have been part of the MongoDB Champions program for 5 years, have created multiple tech courses, vlogs & blogs on MongoDB and other tech topics.

I help Businesses scale with AI x SEO x (authentic) Content that revives traffic and keeps leads flowing | 3,000,000+ Average monthly readers on Medium | Sr Technical Writer @ DigitalOcean | Ex-Cloud Consultant @ AMEX | Ex-Site Reliability Engineer(DevOps)@Nutanix

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Introduction

- Key takeaways

- Architectural overview

- Key use cases of MongoDB & Apache Kafka - AI agents and beyond

- Prerequisites

- Step-by-step installation and setup

- Core implementation: Building a real-time pipeline

- Advanced topics and best practices

- FAQs

- Conclusion

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.