- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Introduction

In a previous article we went over Kimi K2. We talked about its MoE architecture, innovative optimizer (MuonClip), and performance optimizations. What we didn’t talk about- at least not in enough depth- was post-training. And that, as it turns out, might be the most interesting part.

Notably, Kimi K2, being an agentic model, has been trained with tool-use in mind. The era of experience is upon us where post-training is pivotal. Here, the Kimi team explains in their Kimi K2 launch post, that “LLMs learn from their own self-generated interactions – receiving rewards that free them from the limits of human data and surpass human capabilities.” This aligns with the discourse shifting from human-level task performance (AGI) to that of superintelligence.

This article takes a look at Kimi K2’s post-training methodology: how it synthesizes agentic data, aligns behaviour via verifiable and self-critic rewards, and scales reinforcement learning infrastructure.

We encourage you to read the Kimi K2 and K1.5 tech report as well as the paper on scaling LLM training with Muon alongside this article for more information and context. If you haven’t read our previous article on Kimi K2, make sure to read that first before proceeding.

Feel free to skip any sections that aren’t of use to you.

Key Takeaways

- Post-training is crucial for agentic models like Kimi K2: It refines the model’s behaviour to be useful and safe, especially in the “Era of Experience” where LLMs learn from self-generated interactions to surpass human capabilities.

- Kimi K2’s post-training combines synthetic data generation for SFT and RL: It leverages large-scale synthetic tool-use data for Supervised Fine-Tuning (SFT) and a Reinforcement Learning (RL) framework with both verifiable and non-verifiable rewards.

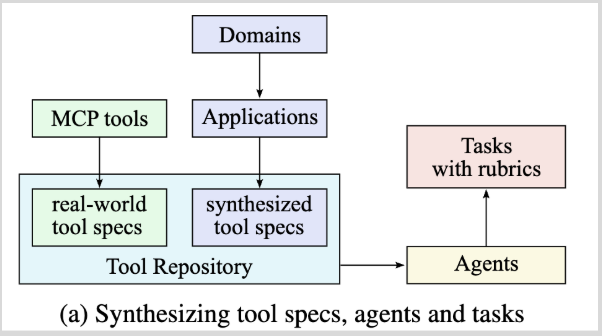

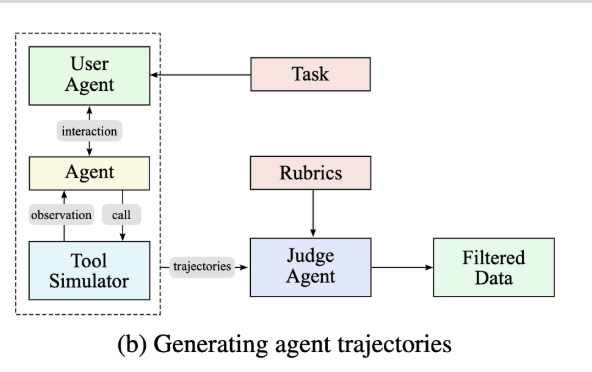

- Data synthesis for tool-use involves three stages: Creating a repository of tool specifications (from real-world and synthetic tools), generating diverse agents and tasks, and then generating successful multi-turn trajectories in simulated environments.

- Verifiable Rewards Gym is a key component of K2’s RL: It uses simple, rule-based functions with a binary reward system (1 for correct, 0 for incorrect) across domains like Math, STEM, Logic, Complex Instruction Following, Faithfulness, Coding, and Safety.

- Non-verifiable rewards use a self-critic approach: For subjective tasks like creative writing, K2 performs pairwise comparisons on its own outputs, guided by rubrics encompassing core values (clarity, conversational fluency, objective interaction) and prescriptive rules (no initial praise, no justification).

- On-policy rollouts improve the critic’s judgment on complex tasks that lack explicit reward signals: Verifiable rewards are used in rollouts to iteratively update the critic. This transfer learning from verifiable tasks enhances accuracy on non-verifiable reward tasks.

Note that the papers linked likely include a repo. Be sure to check those out if you’re interested in looking at and potentially using the code.

For those that aren’t familiar, let’s begin by distinguishing between pre-training and post-training.

Pre-training vs Post-training

Pre-training refers to the initial phase of training an LLM on massive amounts of data, typically scraped from the internet, books, and other sources. During this stage, the model learns to predict the next token in a sequence through self-supervised learning, developing language or multimodal understanding, factual knowledge, and reasoning capabilities. This process requires enormous amounts of compute and results in a base model that can generate text but may not follow instructions well or align with human preferences.

Post-training encompasses the techniques applied after pre-training to refine the model’s behaviour to be more useful and safe. This includes supervised fine-tuning (SFT) on high-quality instruction-following datasets, and reinforcement learning from human feedback (RLHF) to align outputs with human values. Post-training transforms the raw pre-trained model into one that can follow instructions, engage in dialogue, and behave appropriately according to human expectations.

Like we said before, we’re going to focus on Kimi K2’s post-training, which combines large-scale synthetic tool-use data for SFT with a unified RL framework that leverages both verifiable and self-critic rewards.

We will begin with a discussion of supervised fine-tuning and then transition into reinforcement learning.

Supervised Fine-Tuning

Supervised fine-tuning (SFT) adapts pre-trained models to specific use cases by training them on labeled data. This enhances the model’s performance on tasks such as question answering, summarization, and conversation.

Recall from our previous Kimi K2 article on the token-efficient Muon optimizer. In addition to K2’s pre-training, the muon optimizer is also used for SFT and the researchers also suggest its use for those who want to further fine-tune the model.

The researchers also initialized the critic capability of K2 in the SFT stage (K2, section 3.2.2) for judging non-verifiable rewards.

We’ll describe how the dataset for SFT was developed in the next section.

Data Synthesis for Tool Use

So here, the researchers have three stages:

| (1) Create a repository of tool specs from real-world tools and LLM tools. | To create this repository, there were 2 ways tools were sourced: (1) They fetched 3000+ MCP tools from GitHub repositories. (2) They used methods presented in WizardLM (“creating large amounts of instruction data with varying levels of complexity using LLM instead of humans”) to “evolve” synthetic tools. |

|---|---|

(2) Generate an agent for each tool-set sampled from the tool repository for some task  |

Researchers create thousands of diverse agents by combining system prompts with various tools. Tasks and evaluation rubrics were created for each agent configuration. |

(3) Generate trajectories for each agent and task  |

The researchers created simulated environments where tool calls were executed and persistent state was maintained. They logged interactions between synthetic user agents and tool-using agents as multi-turn trajectories, retaining only those interactions that were successful according to predefined rubrics. |

Reinforcement Learning

Kimi K1.5: Scaling Reinforcement Learning with LLMs demonstrated the effectiveness of innovative approaches in scaling RL. RL is believed to exhibit superior token efficiency and generalization than SFT and therefore a worthwhile area of optimization. In this section, we’re going to look at K2’s Verifiable Rewards Gym (Section 3.2.1 of the Tech Report) and rubrics for non-verifiable rewards.

Verifiable Rewards

Reinforcement Learning with Verifiable Rewards (RLVR) employs simple, rule-based functions to evaluate the accuracy of a model’s responses. It uses a binary reward system, assigning a 1 for correct outputs and a 0 for incorrect ones - where, in the case of Kimi K2, the predefined criteria could be as straightforward as passing the test cases for a coding problem.

Moonshot extended this idea into a Verifiable Rewards Gym - an extensible collection of task templates with well-defined evaluation logic, comprised of datasets in domains depicted in the table below:

| Domain | Techniques / Data Sources | Focus Areas | Evaluation Methods |

|---|---|---|---|

| Math, STEM, and Logic | Expert annotations, internal QA extraction pipelines, open datasets (e.g., NuminaMath, AIMO-2) | Multi-hop tabular reasoning, logic puzzles (24-game, Sudoku, riddles, cryptarithms, Morse code decoding) – all of moderate task difficulty | tags to increase coverage of undercovered domains, difficulty filtering using SFT model’s pass@k accuracy |

| Complex Instruction Following | Two verification mechanisms: (1) Code interpreter looking at instructions with verifiable outputs (e.g., length, style constraints) (2) LLM-as-judge for more nuanced evaluation; Additional “hack-check” layer to ensure model isn’t pretending to have followed instructions Training data comes from three sources: expert-crafted prompts, automated instruction augmentation (inspired by AutoIF), and a model fine-tuned to generate edge cases. | instruction following, edge case robustness, consistency over dialogues | rubric-based scoring, “hack-check” layer for deceptive completions |

| Faithfulness | Sentence-level faithfulness judge trained using FACTS Grounding framework, verifying factual grounding of self-generated reasoning chains, automated detection of unsupported claims in output | factual accuracy, grounding verification, claim validation | automated faithfulness scoring, unsupported claim detection |

| Coding & Software Engineering | open-source coding datasets ( e.g., OpenCoder,Kodcode), human-written unit tests from pre-training data, GitHub PRs and issues | competitive programming, pull request generation, multi-file reasoning | unit test pass rates, execution in real sandboxes (Kubernetes-based) (K1.5, section 2.6.4) |

| Safety | human-curated seed prompts, prompt evolution pipeline: attack model, target model, judge model | jailbreak detection, toxic or harmful outputs | attack model crafts adversarial prompts to test the target model’s limits, while the judge model assesses the response, awarding a binary reward (success/failure) based on a task-specific rubric |

Non-verifiable Rewards

A self-critic reward is used for tasks that rely on subjective preferences such as, creative writing and open-ended question answering, where K2 performs pairwise comparison on its own outputs.

| Category | Rubric | Description |

|---|---|---|

| Core: to encompass Kimi’s fundamental values as a helpful AI assistant | Clarity & Relevance | Be concise, stay on-topic, avoid unnecessary details |

| Conversational Fluency | Natural dialogue, appropriate engagement, judicious follow-ups | |

| Objective Interaction | Stay grounded, avoid metacommentary and excessive praise | |

| Prescriptive: aim to eliminate reward hacking | No Initial Praise | Don’t start with “Great question!” or similar compliments |

| No Justification | Don’t explain why your response is good or successful | |

| Human Annotated: For specific instructional contexts | varies | varies |

Rollouts

In reinforcement learning and agent development, rollouts refer to the process of running an agent through episodes or sequences of interactions with an environment to collect experience data.

During a rollout, the agent follows its current policy to take actions in the environment, receives observations and rewards, and continues until the episode terminates (either naturally or after a maximum number of steps). This generates a trajectory or sequence of state-action-reward tuples that can be used for learning.

Here, on-policy rollouts with verifiable rewards were used to iteratively update the critic, improving its evaluation accuracy on the latest policy. In other words, verifiable rewards were used to improve the estimation of non-verifiable rewards.

For those who want to improve their intuition around Reinforcement Learning, we’re fans of the Hugging Face Deep Reinforcement Learning Course.

Note:

We didn’t cover RL infrastructure in this article (this article will be updated soon) and encourage those curious to read the Kimi papers (K1.5, section 2.6 and K2, section 3.3 and Appendix G) in the mean time.

Conclusion

By combining large-scale synthetic tool-use data for SFT with both verifiable and self-critic rewards for RL, Kimi K2 demonstrates a robust methodology for aligning model behaviour. This focus on post-training with agentic models, particularly within the “Era of Experience,” positions Kimi K2 as a notable model in the pursuit of more intelligent and adaptable AI systems.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

Melani is a Technical Writer at DigitalOcean based in Toronto. She has experience in teaching, data quality, consulting, and writing. Melani graduated with a BSc and Master’s from Queen's University.

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Introduction

- Key Takeaways

- Pre-training vs Post-training

- Supervised Fine-Tuning

- Reinforcement Learning

- Conclusion

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.