- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Technical Evangelist // AI Arcanist

The capabilities of image editing models cannot be understated. There are so many possibilities for how these models can be used for easy modification of existing images such as improving photo quality for artists and designers, creating marketing materials, and developing game assets. They can go so much further than that, too, with AI enabled capabilities like Style Transfer.

Seeing the effortless capability of these models in our review of image editing models, we wanted to create a functional example of how these models can be applied to real world use cases.

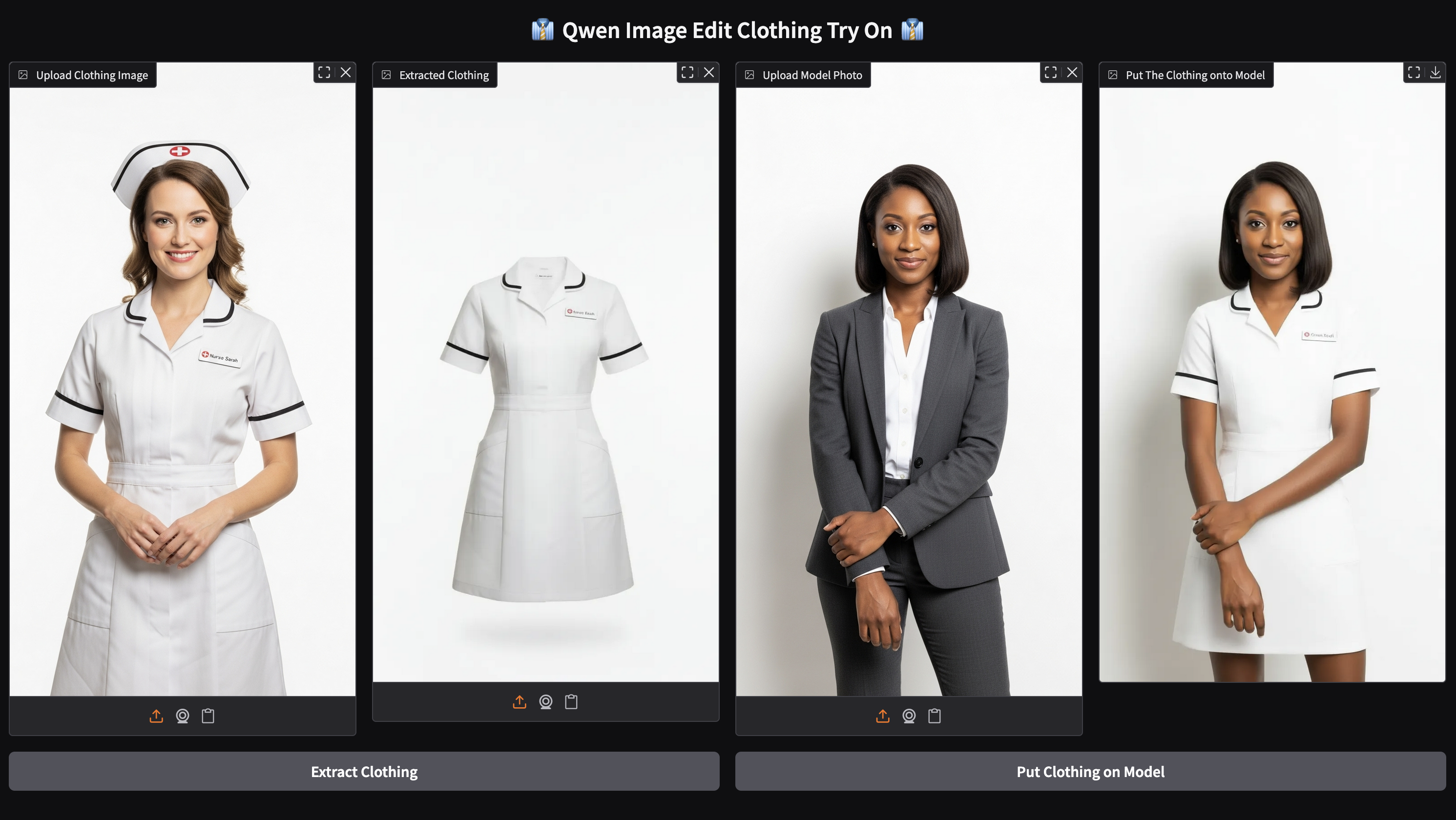

In this article, we introduce the Try On Clothes application for Qwen Image Edit 2509. This application works by pipelining two different paths for two different Qwen Image Edit 2509 model loras. The first step extracts the clothing from an input model, resulting in a set of clothes over a white backdrop. The second step then puts that set of clothing onto a chosen subject. Together, this pipeline makes a quick and easy strategy for seeing how clothing will look on different people.

Follow along for a breakdown of the application and instructions for running the application on a DigitalOcean GPU Droplet.

Key Takeaways

- Qwen Image Edit 2509 with Loras can be used to create a powerful clothing try on application

- Running the application on a DigitalOcean GPU Droplet is easy!

- The application is also (now available on Hugging Face, and the models can be accessed here for extracting clothing and here for trying on the clothes

The Clothing Try on Web Application

Let’s start by breaking down the web application. We have added comments throughout the application code below to help you read the contents within.

## Load in the packages

import spaces

import torch

from PIL import Image

from diffusers import QwenImageEditPlusPipeline

from diffusers.utils import load_image

import gradio as gr

## Instantiate the model

pipe = QwenImageEditPlusPipeline.from_pretrained(

"Qwen/Qwen-Image-Edit-2509", torch_dtype=torch.bfloat16

).to("cuda")

## Extract clothes function: first load the lora weights into the pipeline, then load the image, and then run the pipeline. Then remove the weights from the pipeline, and return the image

@spaces.GPU()

def extract_clothes(img1):

pipe.load_lora_weights("JamesDigitalOcean/Qwen_Image_Edit_Extract_Clothing", weight_names = "qwen_image_edit_remove_body.safetensors", adapter_names = "removebody")

pil_image = Image.fromarray(img1, 'RGB')

# image_1 = load_image(img1)

# print(type(image_1))

image = pipe(

image=[pil_image],

prompt="removebody remove the person from this image, but leave the outfit. the clothes should remain after deleting the person's body, skin, and hair. leave the clothes in front of a white background",

num_inference_steps=50

).images[0]

pipe.delete_adapters("removebody")

return image

## tryon_clothes function: first load the lora weights into the pipeline, then load the two images, and then run the pipeline. Then remove the weights from the pipeline, and return the image.

@spaces.GPU()

def tryon_clothes(img2, img3):

pipe.load_lora_weights("JamesDigitalOcean/Qwen_Image_Edit_Try_On_Clothes", weight_names = "qwen_image_edit_tryon.safetensors", adapter_names = "tryonclothes")

pil_image2 = Image.fromarray(img2, 'RGB')

pil_image3 = Image.fromarray(img3, 'RGB')

image = pipe(

image=[pil_image2, pil_image3],

prompt="tryon_clothes dress the clothing onto the person",

num_inference_steps=50

).images[0]

pipe.delete_adapters("tryonclothes")

return image

## Gradio application code

with gr.Blocks() as demo:

gr.Markdown("<div style='display:flex;justify-content:center;align-items:center;gap:.5rem;font-size:24px;'>👔 <strong>Qwen Image Edit Clothing Try On</strong> 👔</div>")

with gr.Column():

with gr.Row():

img1 = gr.Image(label = 'Upload Clothing Image')

img2 = gr.Image(label = 'Extracted Clothing')

img3 = gr.Image(label = 'Upload Model Photo')

img4 = gr.Image(label = 'Put The Clothing onto Model')

with gr.Column():

with gr.Row():

remove_button = gr.Button('Extract Clothing')

tryon_button = gr.Button('Put Clothing on Model')

gr.on(

triggers=[remove_button.click],

fn=extract_clothes,

inputs=[img1],

outputs=[img2],

)

gr.on(

triggers=[tryon_button.click],

fn=tryon_clothes,

inputs=[img2, img3],

outputs=[img4],

)

if __name__ == "__main__":

demo.launch(share=True)

As we can see, the application code is relatively straightforward. It consists of two quick functions that use Diffusers to load in the model and respective lora, and then run the clothing extraction on a single image or clothing try on on the model using two images. This will generate a single image output which can then be viewed or downloaded in .webp format.

The heavy lifting is being done by the Low Rank Adaptation (lora) models. Each was trained for 3,000 steps at a batch size of 4 on an image set of twenty images on an NVIDIA H200 on a DigitalOcean GPU Droplet. The models were trained using Ostris’s AI-Toolkit.

Put all together, we get the web application shown above. The above example shows a full run of the clothing extraction to try on pipeline enabled by the web application. As we can see, the model first extracts the clothing onto a white backdrop, and then it places the clothing onto the second subject.

Running the Clothing Try On Application

To get started with the Clothing Try On Application, we first need sufficient compute. We recommend using an NVIDIA H100 powered GPU Droplet on the Gradient Platform from DigitalOcean. To get started, sign into your account, and spin up the GPU Droplet. You can find step by step instructions for setting up the GPU Droplet in this article.

Once your GPU Droplet has spun up, we can get started with the environment. First, clone the Hugging Face repository onto your machine. Then, create a virtual environment for the packages, and start it. Finally, install the required packages. You can do this using the snippet below.

git clone https://huggingface.co/spaces/JamesDigitalOcean/Qwen_Image_Edit_Try_On_Clothes

cd Qwen_Image_Edit_Try_On_Clothes/

python3 -m venv venv

source venv/bin/activate

pip3 install -r requirements.txt

With that completed, we are ready to run the web application. Simply paste the following code in, and click the publicly shared link to open the application in any web browser.

python app.py

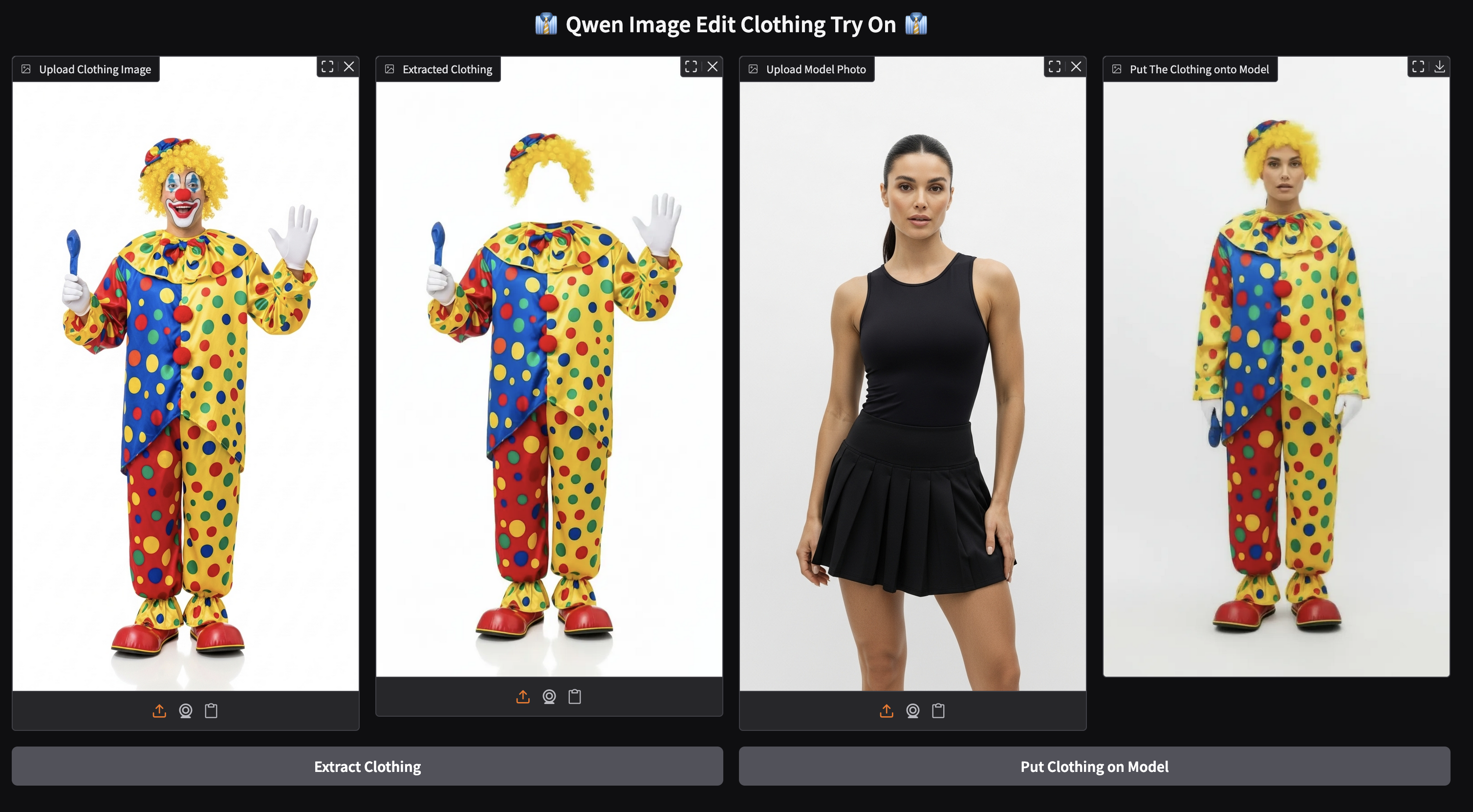

Here is another example run. As we can see, the model pipeline is extremely effective at both stages of the pipe: clothing extraction and try on. We recommend trying this out with your own photos to see how you look in various outfits!

Closing Thoughts

This web app was created in response to the long-standing success of the Kolors Clothing Try On application on Hugging Face. We sought to improve on this design with more modern models, and ensure that this sort of technology is publicly accessible to anyone who wants to make use of it. Qwen Image Edit 2509 is an exceptionally powerful image editing model, and we are very impressed with the results even without the addition of loras. But the loras are nonetheless essential to the pipeline, and remain the main contribution of this project. These model files can be found here for the clothing extraction and here for the clothing try on lora, respectively.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Running the Clothing Try On Application

- Closing Thoughts

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.