Note: Pricing and product information are correct as of September 23, 2025, and subject to change

The AI space is crowded with major players, but two research labs in particular stand out as industry leaders: OpenAI and Anthropic. Interestingly, these competing giants share a founding connection—Anthropic’s co-founders Dario and Daniela Amodei were senior leaders at OpenAI before leaving in 2021 to start their own AI safety-focused company. Both organizations have also developed AI assistants that have become household names: ChatGPT and Claude.

These platforms have evolved beyond simple chatbots into powerful tools transforming how we work, learn, and solve complex problems. ChatGPT has diagnosed rare conditions after doctors were stumped—from tethered cord syndrome in a child who saw 17 physicians to hidden thyroid cancer requiring immediate surgery. Meanwhile, Claude placed top 3% globally in elite cybersecurity competitions against human experts, among countless emerging use cases.

This article will help you compare the features, pricing, and models of Claude and ChatGPT, so that you can decide which one fits your specific workflow or business needs. Spoiler alert: many users maintain accounts with both platforms, for example, using ChatGPT’s Deep Research for market analysis and literature reviews while turning to Claude Code for complex software development and debugging—it’s often not either/or, but which tool for which task.

Key takeaways:

- ChatGPT started earlier as a text-only model in late 2022 and now supports multimodal input web browsing, custom GPTs, and automation through agents and connectors. Claude was launched as an AI assistant in 2023, and has now expanded it into a safety-focused productivity tool.

- Claude excels at handling long inputs, structured reasoning, and cautious, principle-guided outputs, while ChatGPT is known for app integrations, task automation, and multimodal capabilities like voice, image, and deep research.

- Both platforms offer pricing plans which include free tier and scale up to enterprise tiers.

What is Claude?

Claude is an LLM built by Anthropic which serves as a ‘thinking partner’ to help users with tasks like learning, writing, coding, analysis, and research. Anthropic began testing Claude in early 2023 through a closed alpha (a limited early test with specific invited users) with select partners such as Notion, Quora, and DuckDuckGo. In March 2023, Anthropic positioned Claude as a next-generation AI assistant grounded in research on helpful, honest, and harmless systems. Anthropic initially released two variants: the high-performance “Claude” and a lighter, faster “Claude Instant”. Today, Claude has evolved through multiple generations, with Claude Sonnet 4.5—released in September 2025—representing Anthropic’s most advanced model, capable of autonomous work for up to 30 hours and achieving state-of-the-art performance on coding benchmarks.

It is offered via web, desktop, mobile, and API platforms. Claude is also designed to integrate with documents, tools, and external knowledge sources to provide contextual, conversational assistance.

Highlights:

-

Options to upload files (PDF, Word, Excel) or images to Claude to analyze them, and answer questions or draw insights based on that content.

-

Allows creation of “Artifacts” which are shareable, interactive visualizations, tools, diagrams, or reports useful for turning raw ideas or data into more structured format or visuals.

-

Supports switching between typing and speaking (voice input/output) with features to organize work by topic (Projects) so related conversations or context are grouped for future reference.

-

Pull in external knowledge (via web search), and integrate with tools like Google Workspace.

-

Built under Anthropic’s security program, backed by compliance such as SOC 2 Type II and ISO 27001.*

💡Manage Kubernetes smarter with Claude AI. Explore Claude AI integration to your Kubernetes clusters using the K8sGPT MCP server, analyze issues, and get detailed, privacy-safe remediation steps through natural language ⬇️

Claude features

Claude is suitable for very long context handling, structured outputs, and a focus on safety-aligned reasoning.

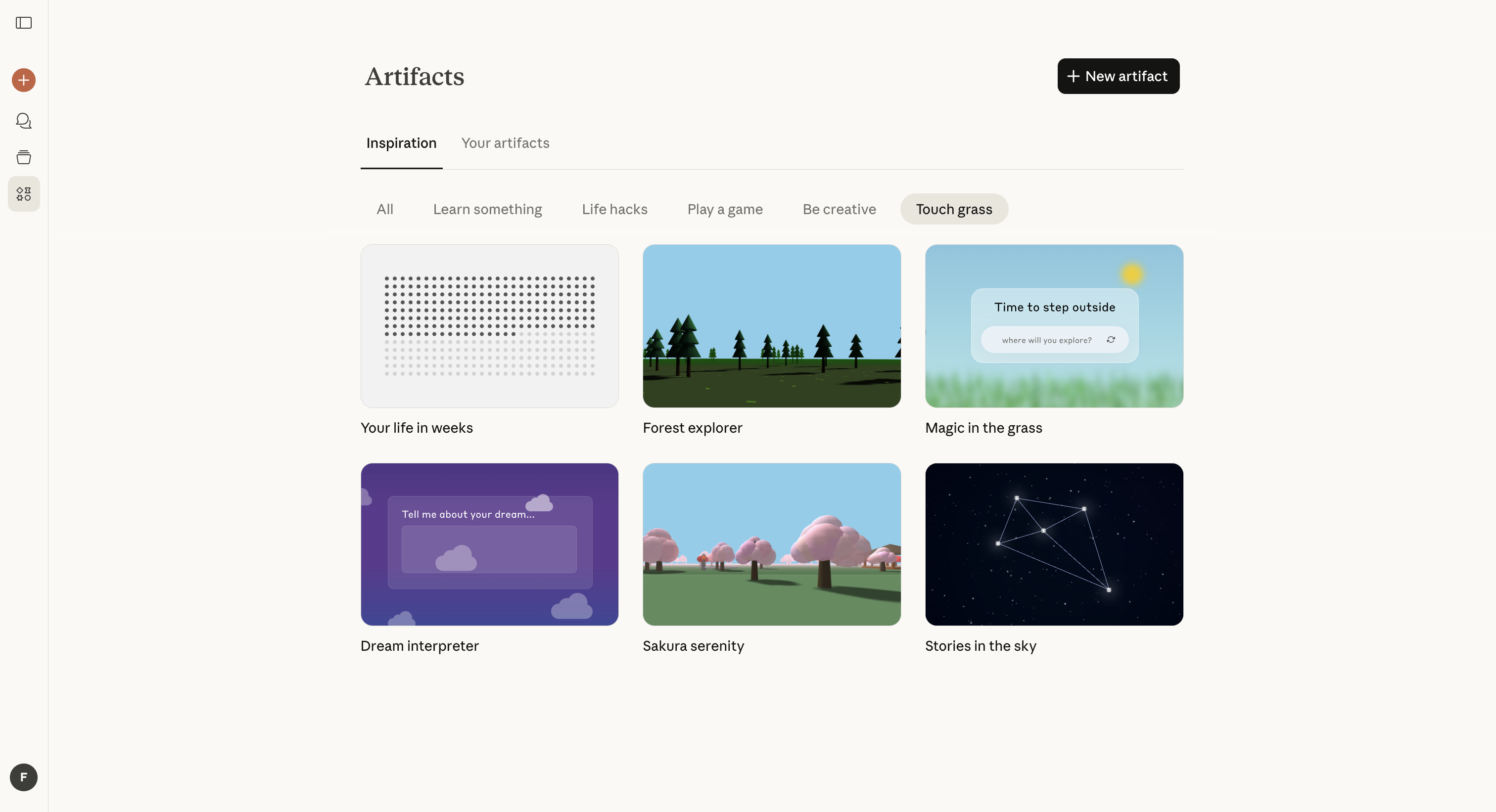

1. Artifacts

Artifacts give you a dedicated workspace beside the chat to view, iterate on, and organize what Claude creates, anything from code snippets and flowcharts to websites and interactive dashboards. Anthropic has since added an artifacts “space” and the ability to build AI-powered apps directly in that view, with availability on web and the iOS/Android apps for Free, Pro, and Team users. It’s designed to turn raw ideas into tangible, shareable work products quickly.

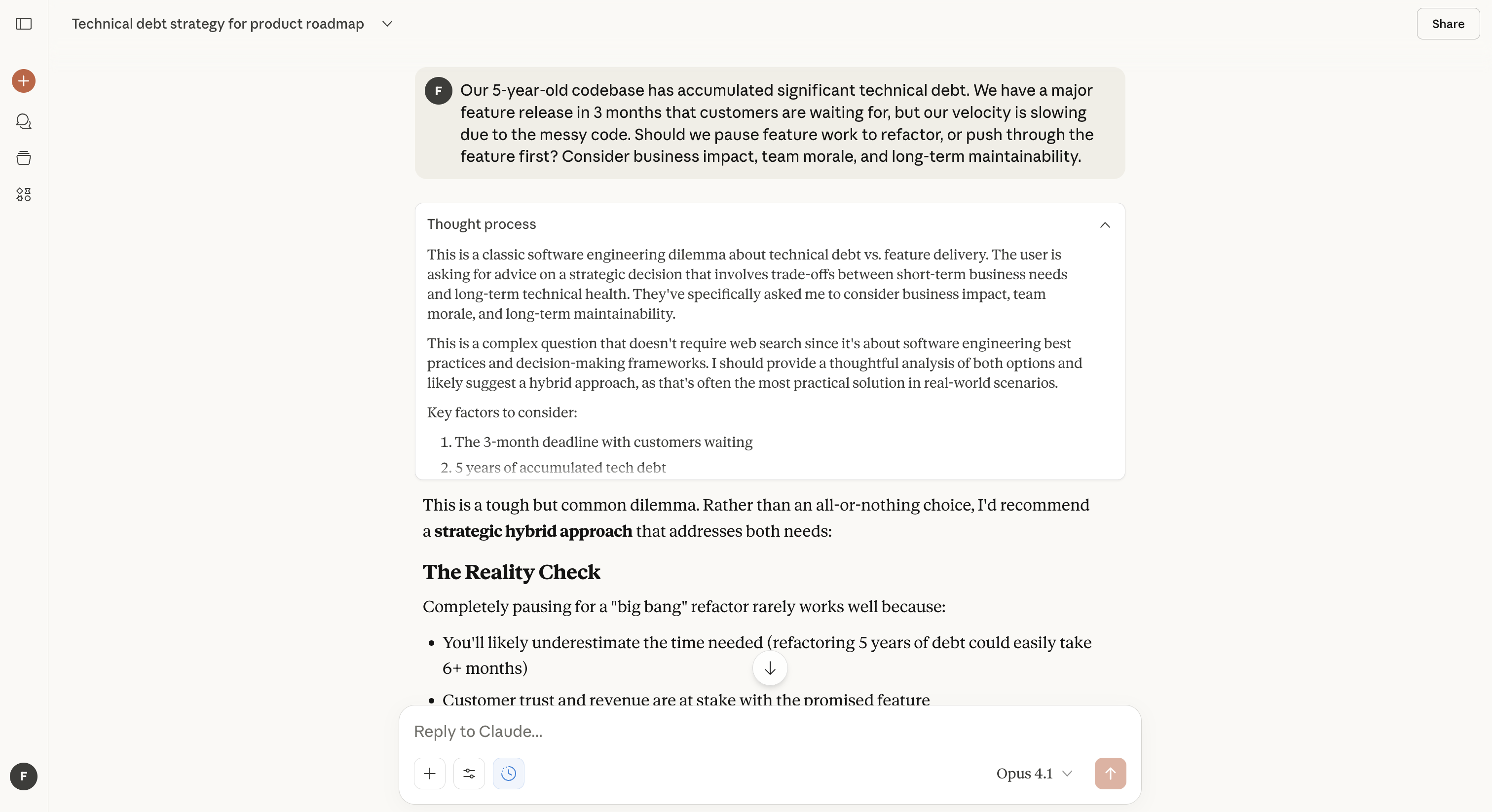

2. Extended thinking

With “extended thinking” you can toggle deeper reasoning on or off (first shipped with Claude 3.7 Sonnet) and even set a “thinking budget” so the model spends more or less time on hard problems. Anthropic also experimented with making parts of the thought process visible, which can help users inspect reasoning but comes with trade-offs and is treated as a research preview. The feature aims to boost performance on tougher tasks without switching models.

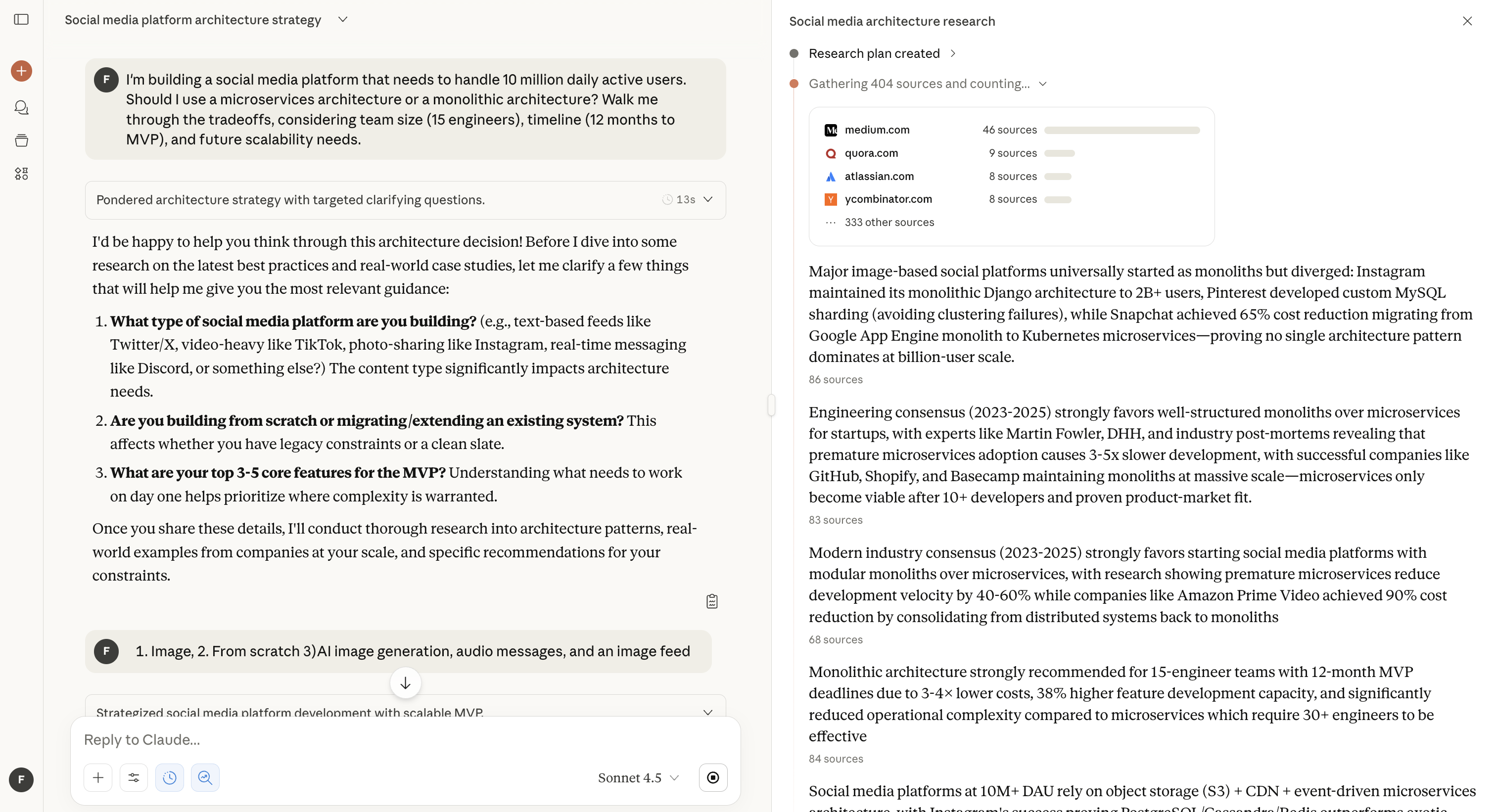

3. Research

Research is Claude’s agentic, citation-first workflow for investigations that draw from the web and your connected work context. It runs multi-step searches, explores alternative angles automatically, and returns a structured report with sources you can verify. Research also ties into Google Workspace, so Claude can search Gmail, Calendar, and Docs to ground findings in your own materials.

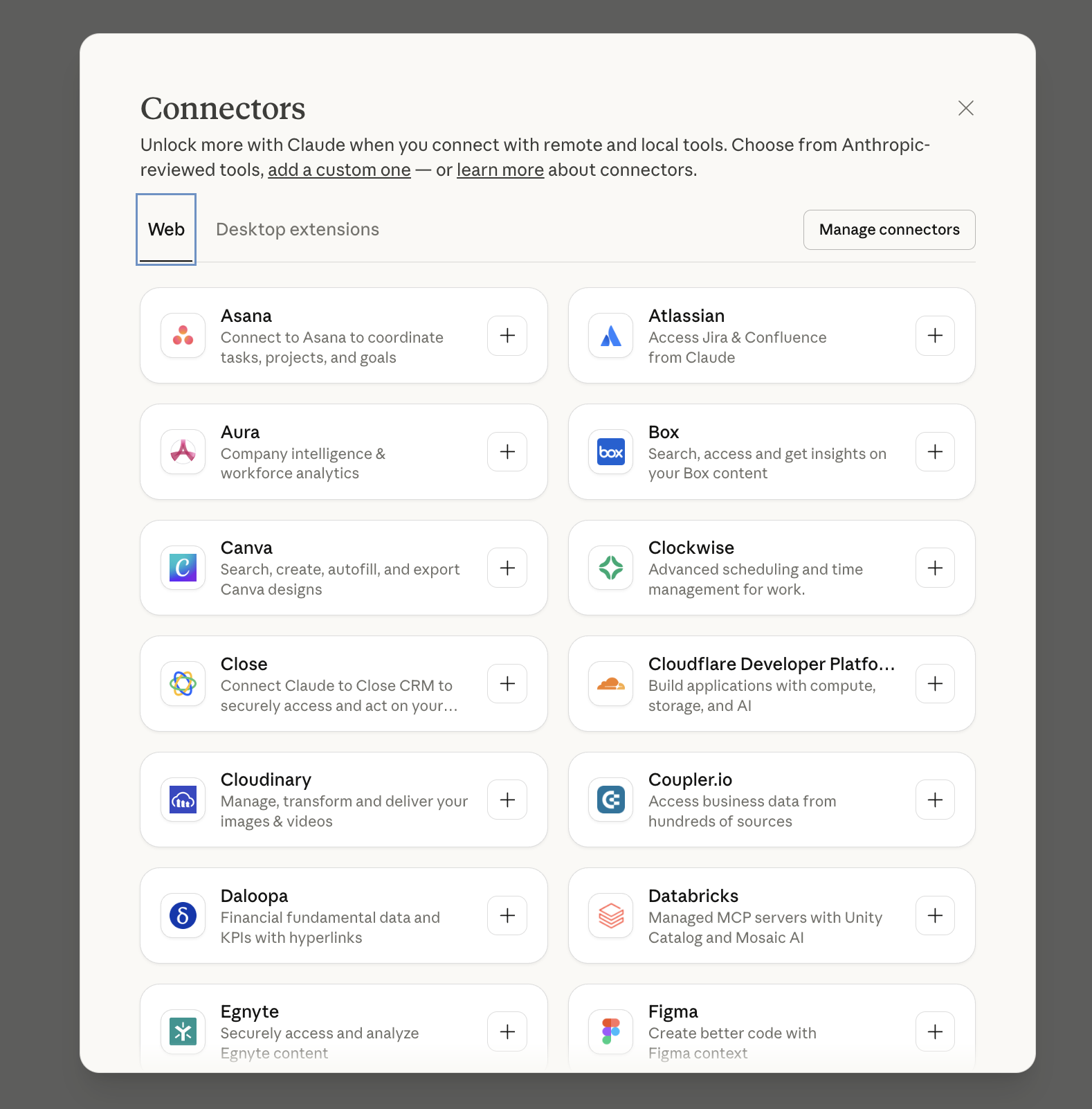

4. Connectors

The Connectors directory is a one-click catalog of tools Claude can use, using remote services like Notion, Canva, and Stripe as well as local desktop apps such as Figma, Socket, and Prisma. Connecting tools gives Claude direct access to the same context you have, so prompts can reference live project data instead of pasted summaries. The directory is available on web and desktop; remote app connectors require a paid plan.

5. Integrations and MCP

Anthropic’s Model Context Protocol (MCP) is an open standard for secure, two-way connections between AI assistants and data/tools. It provides a single protocol, SDKs and open-source servers.

Integrations extend this by letting Claude talk to remote MCP servers (not just local desktop), so it can act across services like Jira, Confluence, Zapier, Intercom, Asana, Square, Sentry, PayPal, Linear, and Plaid, and fold those sources into Research with citations. The result is a more informed assistant that can both retrieve context and take actions across your stack.

💡Tired of clicking through endless dashboards to deploy and manage apps? The below video explains how to use the DigitalOcean MCP server to connect to tools like Claude Code, Cursor, or VS Code 🔽

6. Imagine

With Claude Sonnet 4.5, Anthropic released a short-lived research preview called “Imagine with Claude” which is an interactive experience where Claude generates software on the fly without prewritten code, so you can watch it build and adapt in real time. Access was limited (e.g., available to Max subscribers for a few days), meaning that it was an experiment rather than a permanent feature.

💡Looking for ChatGPT alternatives? You have nine options to consider across features, price, and specialization.

7. Agents

With Claude’s AI agents, users can build and deploy autonomous or semi-autonomous agents via the Anthropic API. These agents plan multi-step workflows, integrate with other tools, systems, and maintain conversational context. They are trained to take on structured tasks like routing requests, coordinating across applications, or automating repetitive steps, while keeping a human in control.

Claude models

Claude is a family of LLMs with several model variants optimized for different needs.

-

Claude Sonnet 4.5: Most recent and strongest coding model released under AI Safety Level 3 (ASL-3) protections with better performance in software development workflows, refactoring, maintenance, and improved agent capabilities.

-

Claude Opus 4.1: The top-end model for complex reasoning, advanced coding, and vision with text tasks; supports a 200,000-token context window, and an output of 32000 tokens. Trained until Mar 2025.

-

Claude Opus 4: Suitable for strong reasoning, multilingual, vision capabilities, 200K token context window, maximum output of 32000 tokens. Trained until Mar 2025.

-

Claude Sonnet 4: High performance with very good reasoning and efficiency, 200K token context (with a beta for 1M tokens in some use cases), balances capability vs speed and cost. Trained until Mar 2025.

-

Claude Sonnet 3.7: Earlier model in the Sonnet branch, supports many of the high-quality features of Sonnet 4, but with older snapshot date and slightly lower maximum outputs. While this model is trained on publicly available internet until November 2024, the knowledge cut-off date is the end of October 2024.

-

Claude Haiku 3: Compact, quick and accurate targeted performance. 200K token context window, 4096 output tokens. Trained until Aug 2023.

💡Build a tutorial generator using Claude 3.5 Sonnet, and React—see how to scaffold interactive guides programmatically.

Claude plans

Claude offers pricing tiers ranging from a free basic plan to custom enterprise solutions with custom pricing for organizations needing advanced compliance and administration features.

| Plan | Cost | Description |

|---|---|---|

| Free | $0 / month | Basic access like chat via web, iOS, Android, desktop; ability to write, edit content; generate code; visualize data; analyze images/text; web search; desktop extensions. |

| Pro | $17/month if billed annually, discounted at $200/year, or $20/month if monthly billing. | Everything in Free, plus more usage; access Claude Code directly in terminal; unlimited Projects for organizing chats/documents; access to Research; connect Google Workspace (email/calendar/docs); connect other everyday tools; extended thinking for more complex work; more model options. |

| Max | From $100 / person / month | Everything in Pro, but much higher usage per session; higher output limits; early/priority access to new features; priority during high traffic. Two tiers: ~5× more usage than Pro (lower Max tier), or ~20× more (upper Max tier). |

| Team | $25 per person/month (annual); $30 if monthly billing; minimum 5 members. Premium seat with Claude Code at $150 per person / month. Minimum 5 members. | Adds collaboration: central billing, organization-level administration; more usage; early access to collaboration features; access to Claude Code (premium seat). |

| Enterprise | Custom price | All Team plan features, improved context window; single sign-on; domain capture; role-based permissions; SCIM; audit logs; Google Docs cataloging; compliance and monitoring (e.g. via Compliance API); access to Claude Code via premium seat. |

💡Learn how to integrate Claude Code with DigitalOcean’s MCP server to control infrastructure via natural language commands.

What is ChatGPT?

ChatGPT is a conversational AI assistant developed by OpenAI. It uses models such as GPT-5 and related variants to help users with answering questions, brainstorming, writing, coding, research, data analysis, and creative tasks. ChatGPT was released in 2022, gaining over 100 million users within its first two months. After the initial GPT-3.5–based model, OpenAI expanded ChatGPT’s capabilities with additional support for images, voice, web browsing, custom GPTs, and enterprise-ready tools like connectors and agent mode. In August 2025, OpenAI released GPT-5 as the new default model for all users, unifying OpenAI’s various model capabilities into a single system that automatically switches between fast responses and extended reasoning depending on task complexity.

It is available via web, mobile, and desktop platforms, and supports multiple modes of interaction (text, voice, image) with integrations to external tools.

Highlights:

-

Options to link tools like Google Drive, Notion, Microsoft Teams, and GitHub, so ChatGPT can access files, templates, calendar or email data.

-

“Agent” mode where it can perform multi-step tasks on behalf of the user, like instance research, booking appointments, gathering data, or combining work across web tools.

-

Supports voice and image as inputs; file upload, data analysis, chart creation; This allows people to interact with it in different formats depending on their needs.

-

Offer features like data privacy guarantees, admin controls, connectors, dedicated workspaces, compliance with standards like GDPR, SOC2 etc.

💡Confused between Auto-GPT and ChatGPT? Understand how Auto-GPT tackles tasks autonomously across multiple steps, while ChatGPT stays conversational and user-guided, helping you decide which fits your workflow.

ChatGPT features

ChatGPT is valued for its agent capabilities, cited sources, broad integrations, and versatility across tasks.

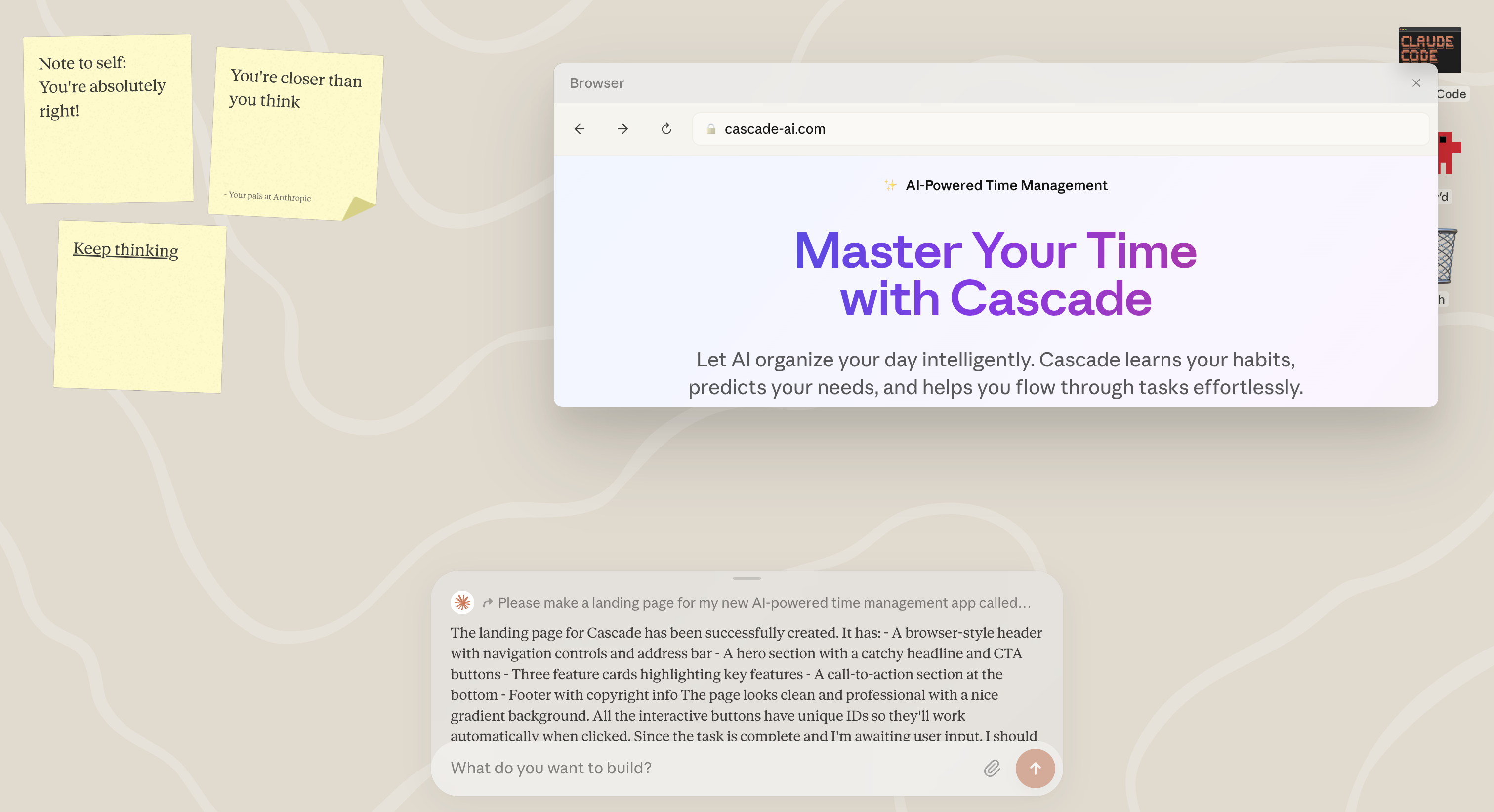

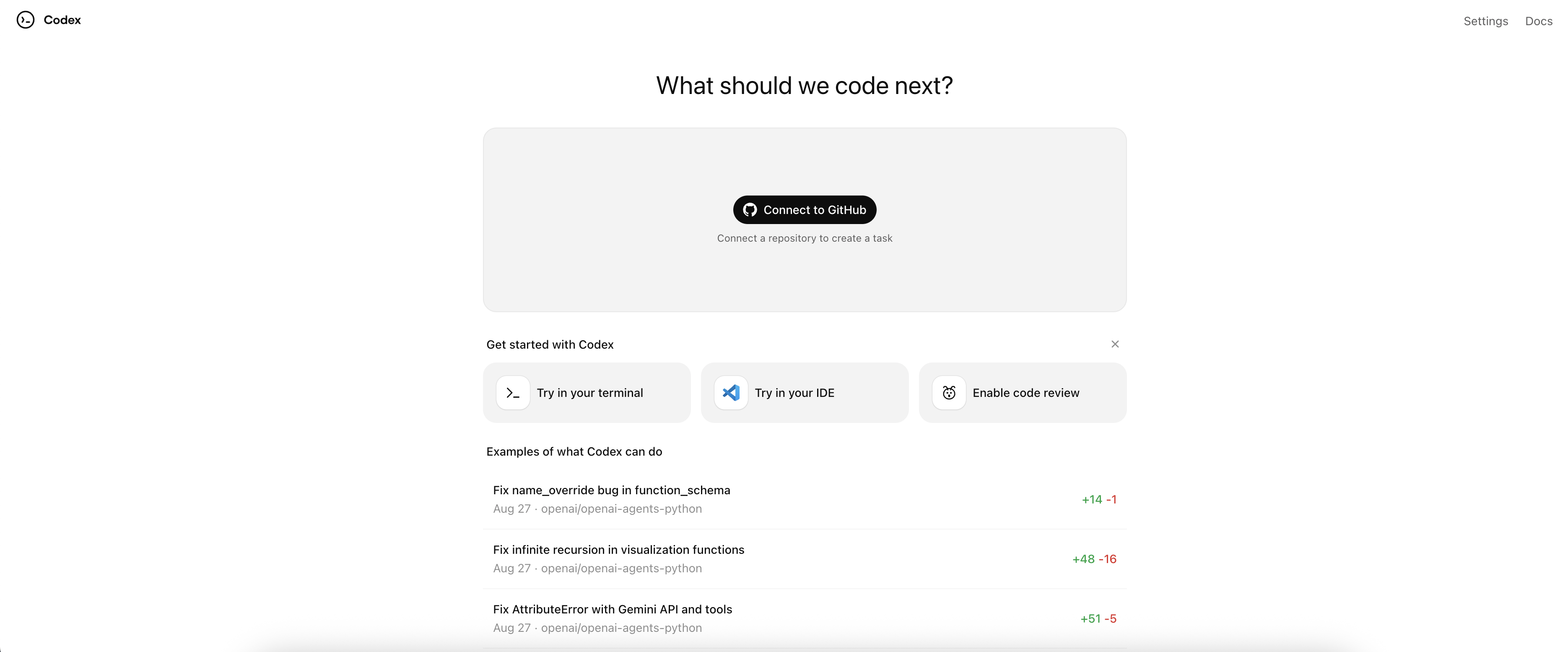

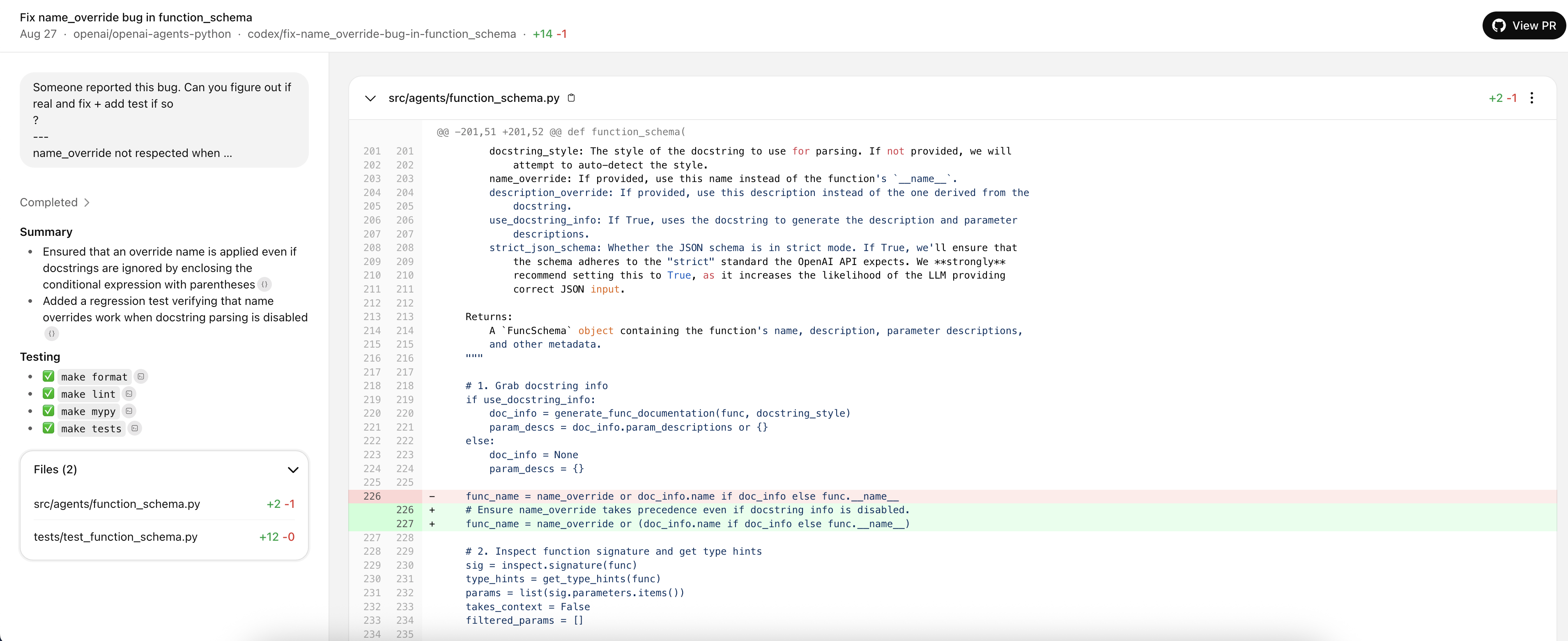

1. Codex

Codex is a cloud-based software engineering agent offered via ChatGPT. It lets users ask questions about their codebase, run or execute code, and generate or draft pull requests. It’s aimed at developers who want help understanding, refactoring, or expanding existing code, especially in larger or more complex projects.

2. Sora

Sora Explore is a discovery or navigation interface for custom GPTs or user-created models in ChatGPT. It’s a place to browse what others have built (GPTs, agents, etc.) so you can try them out or get inspiration. With Sora 2 users can create realistic videos from text, remix content, and include “cameos” (user likeness) by adding a visual and social layer to how GPTs can operate.

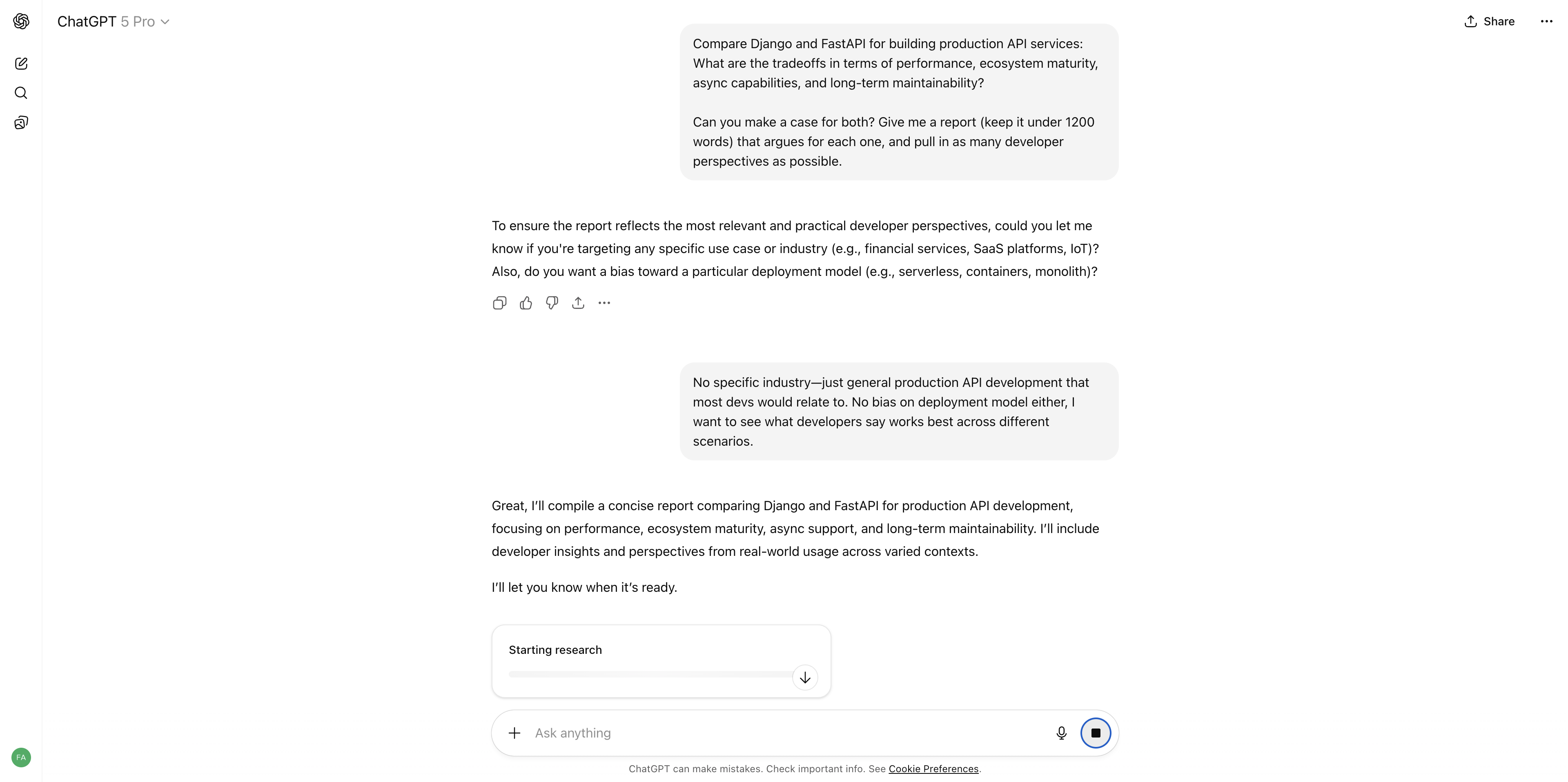

3. Deep research

Deep Research by ChatGPT conducts in-depth explorations on complex topics by gathering and comparing information from many external sources. It produces comprehensive, well-cited reports (e.g. with summaries, comparisons), useful for decisions, analysis, or topics which need broad coverage and precision.

5. Agent mode

With the Agent mode, the model responds to queries and takes actions on your behalf, like browsing websites, filling out forms, editing documents, or running code. It uses an internal virtual browser and tools to execute multi-step workflows while keeping the user in charge with permissions. The feature merges capabilities like Deep Research (for analytic depth) and Operator (for action execution) into a unified system.

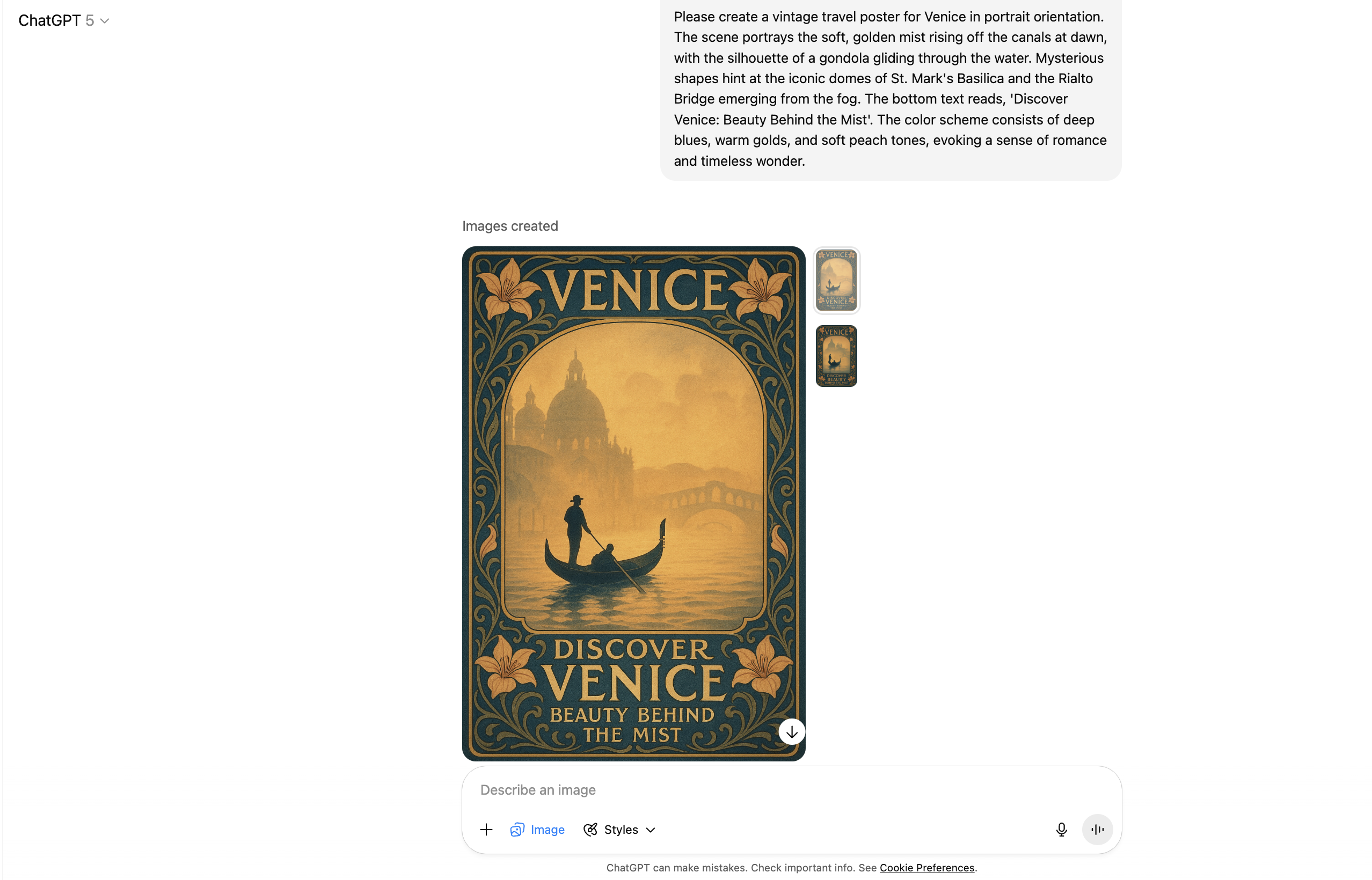

4. Image generation

With image generation users can create or transform images from scratch using prompts (or to modify an existing image). OpenAI’s advanced image generation model, DALL·E 3 is better at interpreting and following complex text prompts, generates more accurate and detailed visuals, and supports in-painting (editing parts of an image). You can specify styles, details, or moods, for a polished, professional-quality visual output.

5. Study mode

People who are curious to learn more beyond just finding answers use the Study Mode. It gives step-by-step guidance, offers personalized explanations, quizzes or checks the user’s understanding, and tracks progress.

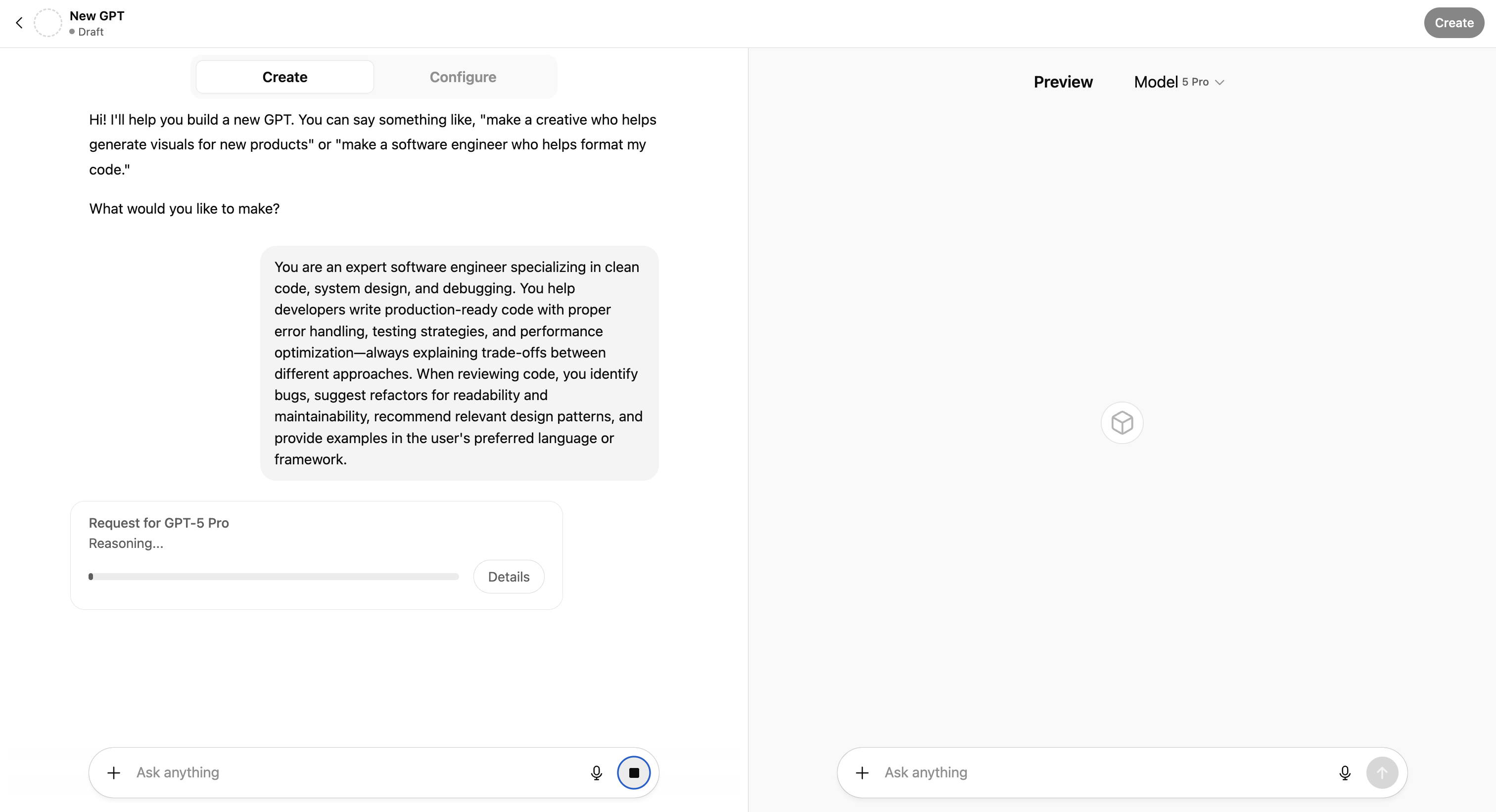

6. GPTs

The “Explore GPTs” feature allows users to find, use, and create specialized GPT-based assistants. These are customized versions of GPT that may have specific capabilities, configurations, or domain focus, built either by OpenAI or by other users. It gives users a way to use ChatGPT more than a general conversational AI by using assistants specific for particular tasks or workflows.

💡Explore 15 custom GPTs built for ChatGPT showing how people are tailoring AI assistants to specific tasks, niches, and workflows.

ChatGPT models

OpenAI offers many LLMs exposed via its API with each offering different trade-offs in capability, speed, cost, and context size. Users choose models based on their requirements, whether they need stronger reasoning, code generation, or multi-modality options.

-

GPT-5: Unified system that combines a fast model and a deeper reasoning model, with a real-time router deciding which to use based on prompt complexity and context. Offers improved performance in writing, coding, health, and vision tasks, while reducing hallucinations and refining style.

-

Whisper: An automatic speech recognition (ASR) model for converting spoken audio into text. Not a “chat engine,” but used when you need voice transcription.

-

Embedding models: Models trained to embed text (or other data) into vector space; used for similarity, search, retrieval, clustering. They are useful in the processing stages of application pipelines.

💡See how an ASR app runs on GPU Droplets using NVIDIA NIM in this hands-on demo ⬇️

ChatGPT plans

ChatGPT offers flexible plans, from a free tier for casual use to advanced Pro, Business, and Enterprise options with stronger models, collaboration tools, security controls, and larger context windows for complex work.

| Plan | Price | Description |

|---|---|---|

| Free | $0 / month | Access to GPT-5; real-time web search; limited file uploads, data analysis, image generation & voice; code edits on macOS; create/use projects & custom GPTs. |

| Plus | $20 / month | Everything Free has and extended access to GPT-5; extended limits for messaging, file uploads, data analysis, image generation; standard & advanced voice mode (including video & screen sharing); access to ChatGPT Agent; limited Sora video generation; early access / previews (Codex agent, new features). |

| Pro | $200 / month | All of Plus, but with “unlimited” GPT-5 access; greater compute (“GPT-5 Pro”) for harder queries; higher / unlimited voice/video/screen-sharing; extended ChatGPT agent usage; expanded access to Sora video gen. |

| Business | $25/user/month (annual) / $30/user/month (monthly) | Secure workspace; admin controls (SAML SSO, MFA); data excluded from training by default; compliance with GDPR, CCPA, plus certifications (SOC2, ISO, etc.); connectors to internal apps (Drive, SharePoint, GitHub etc.); features like deep research, custom workspace GPTs, tasks, projects; includes Codex & agent functionality. |

| Enterprise | Contact sales | Everything in Business and larger context windows (longer inputs, big files); enterprise-grade security and controls (role-based access etc.); data residency options; 24/7 priority support & SLAs; custom legal terms; built for scale with volume discounts etc. |

When to use Claude vs ChatGPT

When comparing Claude and ChatGPT, each with strengths catering to different needs. The best choice depends on your specific use case, whether that’s deep document analysis, workflow automation, or day-to-day productivity.

Coding

Many developers appreciate Claude Code for its deep awareness of large codebases, multi-file editing, and terminal integration. It makes users feel like a pair programmer embedded directly in your workflow. Codex and GPT-5 by ChatGPT, is also strong for coding and has a mature plugin/IDE ecosystem, but some find Claude better at coding projects. If you want tight local dev integration and long context, Claude has an edge; if you want broader automation and existing tool support, ChatGPT is more flexible.

Cost

Both have a free tier for casual use, but Claude Pro is slightly cheaper ($17/month if billed annually) than ChatGPT Plus ($20/month). Claude’s Max plan starts at $100/month, while ChatGPT’s Pro tier jumps to $200/month. For someone watching costs but needing advanced features, Claude Pro feels better value; for heavy users who need maximum compute, ChatGPT Pro is more expensive and is built for those who need the highest performance available with faster responses, greater compute for complex coding or data tasks, and priority access to OpenAI’s newest capabilities.

Enterprise

Both offer enterprise-grade security (SOC 2, GDPR, SSO, admin controls), but the focus differs.

-

Claude Enterprise emphasizes data control, compliance APIs, and very large context windows for document-heavy teams.

-

ChatGPT Business/Enterprise integrates deeply with apps (Drive, SharePoint, GitHub), has workspace admin tools, and agent automation at scale.

Pricing models are similar (both around $25–30/user/month for business tiers), but ChatGPT adds a high-compute Pro plan ($200/month) while Claude has Max tiers for heavier individual workloads.

Research

ChatGPT’s Deep Research is currently more polished. It can browse the web, pull from connected apps, and produce well-cited, structured reports. Claude’s research is capable (and benefits from long context), but many users find ChatGPT better at surfacing diverse sources and formatting results. If deep, citation-rich analysis matters, ChatGPT tends to lead right now.

Agent mode

ChatGPT’s Agent mode has more mature automation tools, connectors, and multi-step execution, making it great for integrating with everyday work apps and automating workflows. Claude’s agents are strong for structured, multi-step technical tasks (especially when working with code or complex docs), but its ecosystem is newer. For business workflows or cross-tool automation, ChatGPT’s agent is ahead; for developer-centric or document-driven tasks, Claude’s agent is promising.

References

Claude vs ChatGPT FAQ

What is the difference between Claude and ChatGPT?

Claude (by Anthropic) and ChatGPT (by OpenAI) are both LLM AI assistants, but they differ in design and focus. Claude emphasizes long context handling (up to 200K tokens and a 1M-token beta), safety-aligned reasoning through Constitutional AI, and features like Artifacts and deep file/document support. ChatGPT offers broader tool integrations (connectors to apps and data), Agent Mode for task automation, and a wider range of model and pricing tiers, including Deep Research and multimodal (voice, image, video) features.

Which is better in 2025: Claude or ChatGPT?

Neither is universally “better.” Claude excels at long document analysis, natural writing, structured outputs, and safety-focused reasoning, while ChatGPT is stronger for workflow automation, integrations, and multimodal interactions. The right choice depends on your use case.

Which AI is safer: Claude or ChatGPT?

Both prioritize safety but approach it differently. Claude uses Constitutional AI to guide responses toward safe, ethical output and reduce harmful content, while OpenAI uses RLHF, system prompts, and layered safety filters.

Can Claude and ChatGPT be used for enterprise applications?

Yes. Both provide enterprise-grade security, compliance (e.g., SOC 2, GDPR), single sign-on (SSO), and admin controls. ChatGPT Enterprise plans offers app connectors and workspace management, while Claude Enterprise focuses on data control, compliance APIs, and large context windows for document-heavy workflows.

Does Claude outperform ChatGPT in creative writing?

Claude is mostly preferred for natural, nuanced, and stylistically rich writing, making it a favorite choice for creative storytelling or long-form essays. ChatGPT also produces creative content and is preferred when you need to combine creativity with structured workflows or tool use. Preferences come down to the tone and style that you prefer.

Accelerate your AI projects with DigitalOcean Gradient™ AI Droplets

Unlock the power of GPUs for your AI and machine learning projects. DigitalOcean GPU Droplets offer on-demand access to high-performance computing resources, enabling developers, startups, and innovators to train models, process large datasets, and scale AI projects without complexity or upfront investments.

Key features:

-

Flexible configurations from single-GPU to 8-GPU setups

-

Pre-installed Python and Deep Learning software packages

-

High-performance local boot and scratch disks included

Sign up today and unlock the possibilities of GPU Droplets. For custom solutions, larger GPU allocations, or reserved instances, contact our sales team to learn how DigitalOcean can power your most demanding AI/ML workloads.

About the author

Sujatha R is a Technical Writer at DigitalOcean. She has over 10+ years of experience creating clear and engaging technical documentation, specializing in cloud computing, artificial intelligence, and machine learning. ✍️ She combines her technical expertise with a passion for technology that helps developers and tech enthusiasts uncover the cloud’s complexity.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.