- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Technical Evangelist // AI Arcanist

In this tutorial, we walk step by step through the process of creating 3D assets from 2D images using Hunyuan3D 2.1 on a DigitalOcean GPU Droplet. Readers can expect to leave with a full understanding of how to create these assets, and use them for 3D projects.

We have extensively covered the capabilities of image and video generation on this blog, including models like Flux, Hi-Dream, Wan and much more. In our opinion, one of the most incredible promises of AI is taking pure ideas and making them reality. More than any other generative AI, image and video modeling is the closest to that promise for the average user.

But 2 dimensional images and videos are just that: 2D. The ability to bring these concepts into our 3 dimensional world requires further steps and processing. Until recently, this meant people hand-crafting these assets into existence, and creating 3D assets is a time consuming process, without a doubt. It requires the same careful patience of any discipline to get correct. But now, creators have a new avenue to take.

Image to 3D modeling is one of the pioneering new sub-disciplines of computer vision, and it is taking off quickly. Already, we have models capable of generating 3D assets with overlays for coloration, and these workflows are proving to be incredible time savers for 3D asset creation.

In this tutorial, we introduce our pipeline for creating 3D Assets using a DigitalOcean Gradient GPU Droplet. Readers can get a full breakdown of our process of generating images with Flux, optionally removing backgrounds, and transforming them into 3D assets.

Key Takeaways

- Creating 3D Assets from simple images is easy with Hunyuan3D 2.1. First, generate images with your favorite image generator, and then submit them to the web application

- Hunyuan3D 2.1 can generate 3D meshes from images in numerous formats, including .glb and .ply

- Gradient GPU Droplet’s with at least 40gb of RAM can run this pipeline

Hunyuan3D 2.1 Model Breakdown

Until recently, there was not an open-source foundation model for image to 3D modeling. This gap in the space was noticed by researchers at Tencent, who sought to ameliorate this increasingly glaring deficit from the AI industry. To that end, they released the first Hunyuan3D and Hunyuan3D 2.0 models this year. These models immediately made waves across the industry with engineers at all levels rushing to take advantage of the new technologies.

Seeking to take their achievements even further, they have recently introduced Hunyuan3D 2.1. Hunyuan3D 2.1 is “a comprehensive 3D asset creation system to generate a textured mesh from a single image input”. Source It is mostly composed of 2 completely public foundation models. These are namely Hunyuan3D-DiT, “a shape-generation model combining a flow-based diffusion architecture with a high-fidelity mesh autoencoder (Hunyuan3D-ShapeVAE), and Hunyuan3D-Paint: a mesh-conditioned multi-view diffusion model for PBR material generation, producing high-quality, multi-channel-aligned, and view-consistent textures.” Source

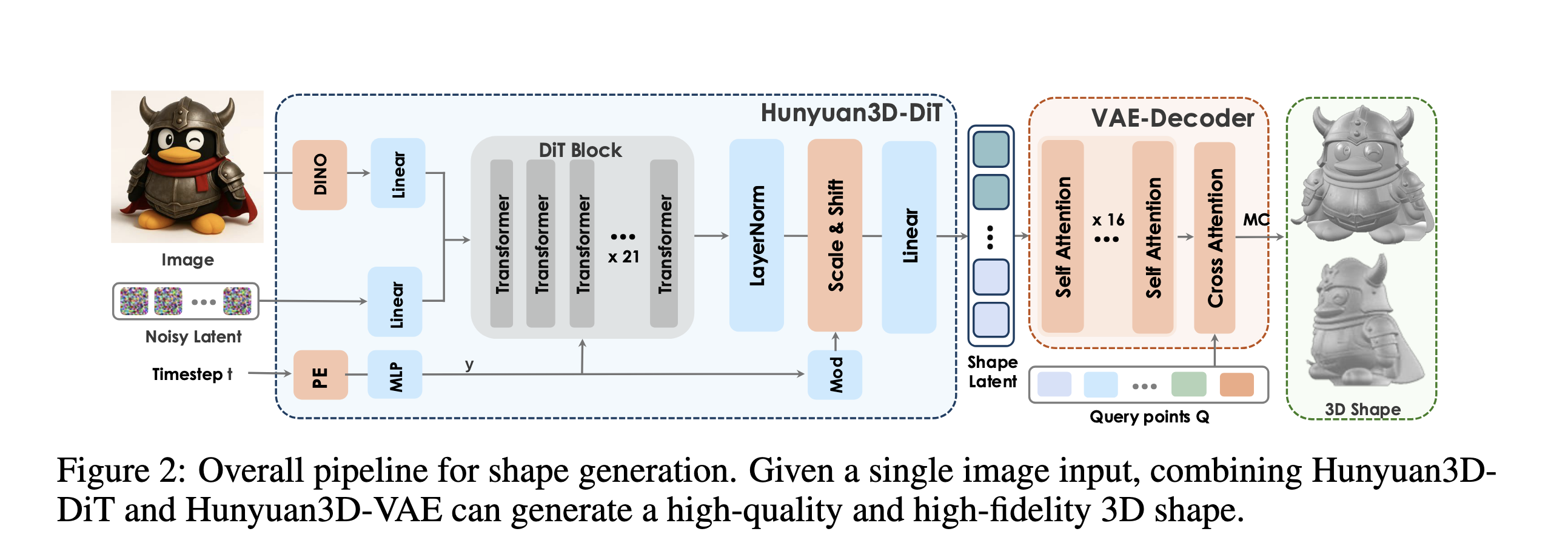

To simplify, for shape generation, they leverage Hunyuan3D-ShapeVAE and Hunyuan3D-DiT to achieve high-quality and high-fidelity shape generation. Specifically, Hunyuan3D-ShapeVAE employs mesh surface importance sampling to enhance sharp edges and variational token length to improve intricate geometric details. Hunyuan3D-DiT inherits the recent advanced flow matching models to construct a scalable and flexible diffusion model.

Above, we can see how the pipeline fits together. We start with a singular image input of a 2-dimensional subject. First, the Hunyuan3D-DiT takes the input and generates a high-quality shape representation of the original object. Next, Hunyuan3D ShapeVAE uses mesh surface sampling to detect and enhance edges and improve geometric detailing, and outputs the 3D shape object.

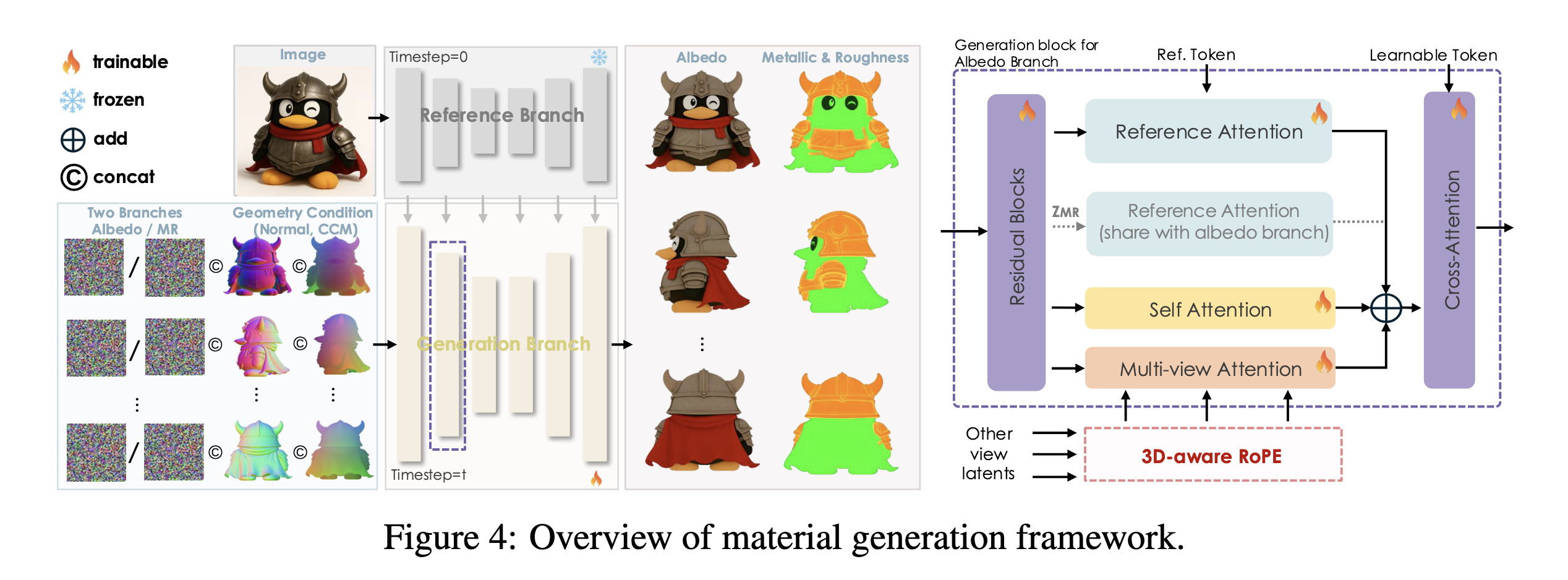

For texture synthesis, Hunyuan3D-Paint introduces a multi-view PBR diffusion that generates albedo, metallic, and roughness maps for meshes. Notably, Hunyuan3D-Paint incorporates a spatial-aligned multi-attention module to align albedo and Metallic and Roughness (MR) maps, 3D-aware RoPE to enhance cross-view consistency, and an illumination-invariant training strategy to produce light-free albedo maps robust to varying lighting conditions. Hunyuan3D 2.1 separates shape and texture generation into distinct stages, an more advanced strategy proven effective upon previous large reconstruction models. This modularity allows users to generate untextured meshes only or apply textures to custom assets, enhancing flexibility for industrial applications.Source

Creating 3D assets from images with Hunyuan3D 2.1

Setting up the GPU Droplet

To actually get started with the pipeline, we need a GPU powered machine with sufficient VRAM to run both the painting and 3D modeling stages of the pipeline. As such, we recommend an NVIDIA GPU Droplet on DigitalOcean Gradient with at least 40 GB of VRAM, such as the NVIDIA L40, A6000, H100, or H200 GPUs. To get started with your GPU droplet and environment, we suggest following the setup instructions shown in this tutorial.

Creating the GPU Droplet Environment for Hunyuan3D 2.1

Installing all the required libraries for running Hunyuan3D 2.1 just takes a few moments. To get started, we will make a virtual environment, then clone the repository and install the packages, and finally download the upscale model. To run the installation and downloads, paste the following into the command line of your remote machine.

git clone https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

cd Hunyuan3D-2.1

vim requirements.txt # hash out the installations for numpy, pymeshlab, ninja, open3D, onnxruntime, and bpy

pip install -r requirements.txt

pip install pymeshlab open3D onnxruntime ninja numpy

pip install fake-bpy-module-2.80

cd hy3Dpaint/custom_rasterizer

Python -m setup.py install

cd ../..

cd hy3Dpaint/DifferentiableRenderer

bash compile_mesh_painter.sh

cd ../..

wget https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth -P hy3Dpaint/ckpt

Once that is completed, we can begin making assets.

Running Hunyuan3D 2.1 on a DigitalOcean GPU Droplet to create 3D assets

To get started with making 3D assets, we first need to launch the Gradio application provided by the authors. Since we have already setup our environment, we just need to paste in the launch command for the app.

python3 gradio_app.py \

--model_path tencent/Hunyuan3D-2.1 \

--subfolder hunyuan3D-dit-v2-1 \

--texgen_model_path tencent/Hunyuan3D-2.1 \

--low_vram_mode

Then take the output link, and use the simple browser feature of VS Code or Cursor to access the link through your local browser.

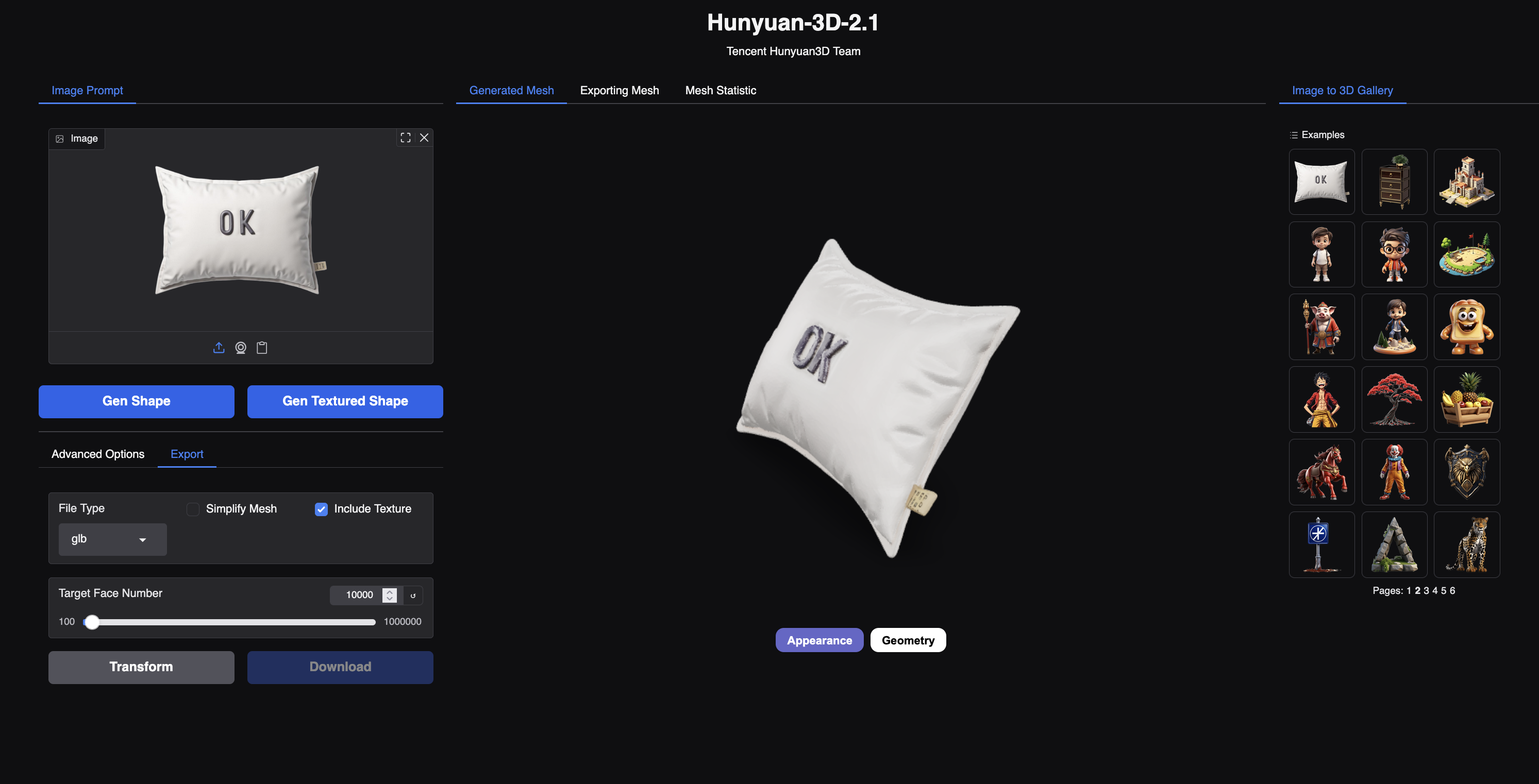

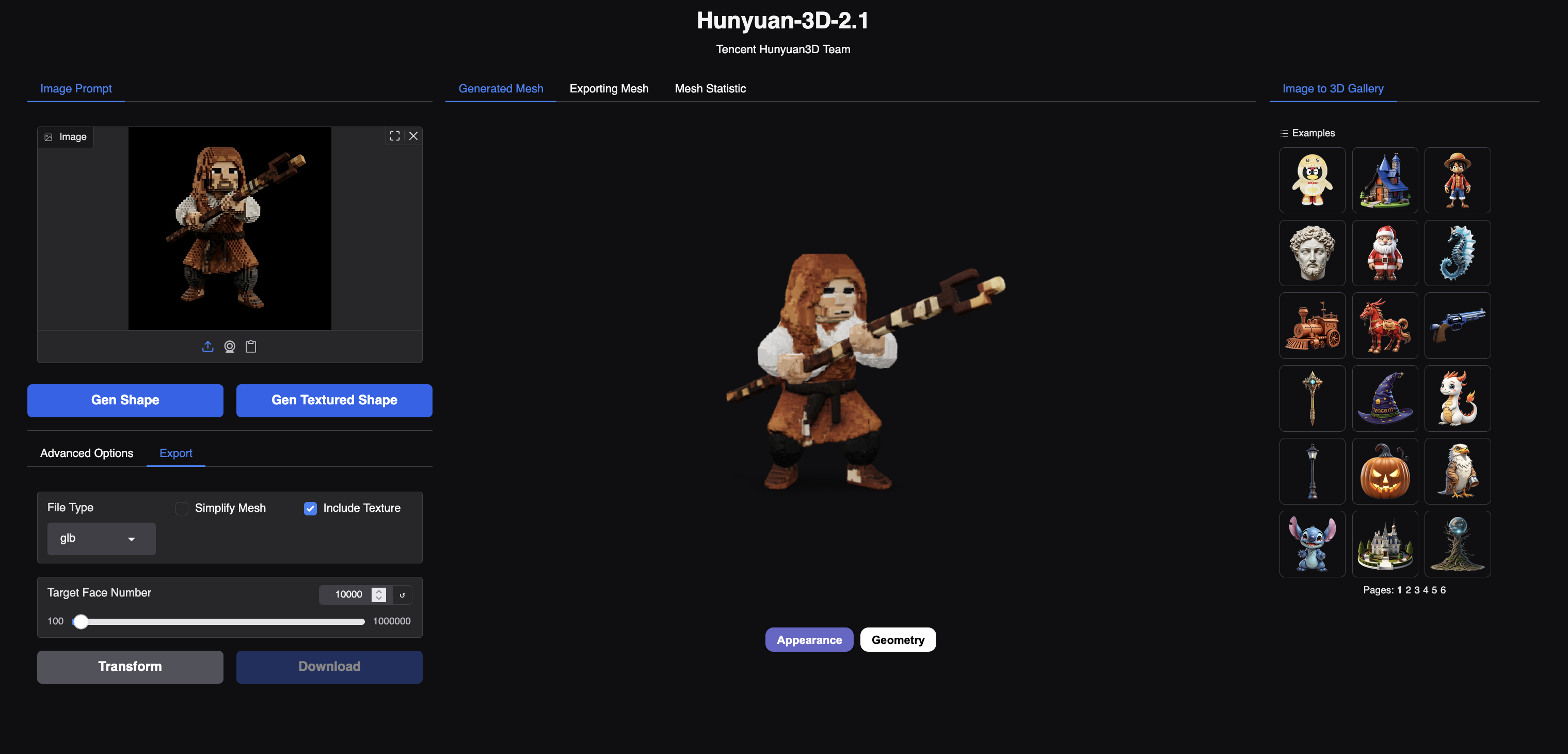

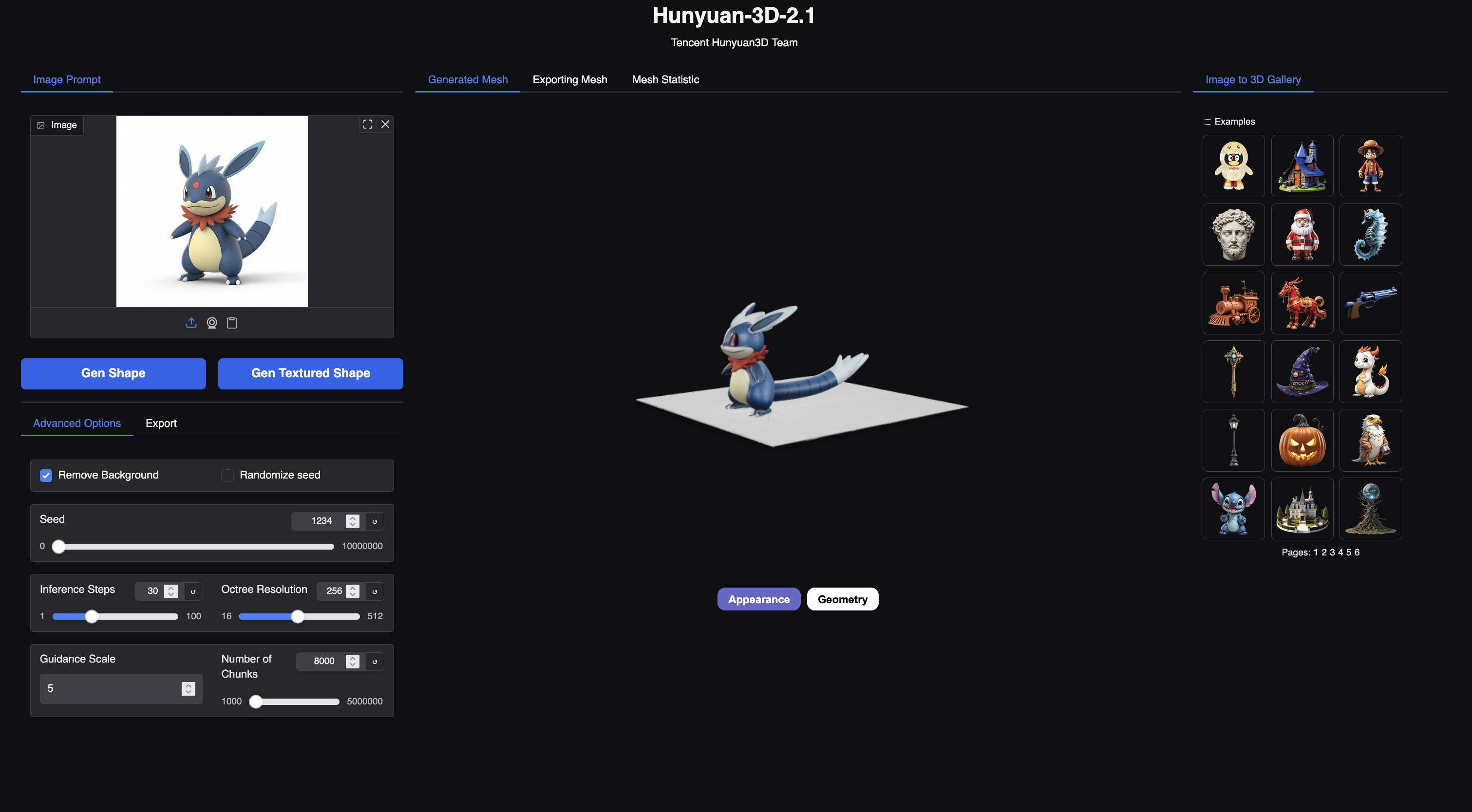

From here, we are left with the web GUI shown above. Upload an image of your choice, or select one from the gallery on the right, and change any relevant advanced options you need to. We recommend unchecking the randomize seed button for controlability and increasing the number of inference steps for a clearer output.

For image selection, we recommend clear images of 3D style figures with no background. Blank, black backgrounds worked best in our experimentation. We generated our examples with Imagen 4 and Flux.1 [dev]. We used a prompt with tags like “blank black background, 3D style”. If you submit a high quality image to the pipeline, these will produce accurate 3D representations of the shape and texture. We can then transform and download these assets in the format of our choice, including glb, ply, stl and obj formats.

The limitations of this process are twofold: the accuracy of the representation models and the inability to model 2D objects. The accuracy of the assets we produced, while excellent in terms of frontal capture, often had issues with the unseen sides of the original input. For example, we can see the extremely extended tail and ground platform that were captured in the example we showed above. As for 2D style image capture, we found the model needs rounded features and edges to accurately project the image to 3D, and these features are inherently missing in 2D drawings or animations. We recommend sticking to “3D style” images such as renders or photographs, for Hunyuan3D 2.1.

Closing Thoughts

All together, Hunyuan3D 2.1 is the most powerful tool for creating 3D assets on the fly that we have seen come about from AI. With it, we can create hundreds of 3D assets in a matter of hours, a process that would have taken days without it. We recommend getting started by generating your own 2D images by following these tutorials on Flux and Wan to generate images for this tutorial.

Not only that, projects like HunyuanWorld Mirror make it possible to transport into 3D worlds with just images and navigate them. We look forward to seeing how this technology continues to advance going forward.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Key Takeaways

- Hunyuan3D 2.1 Model Breakdown

- Creating 3D assets from images with Hunyuan3D 2.1

- Closing Thoughts

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and SMBs

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.