- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

By Adrien Payong and Shaoni Mukherjee

Introduction

Artificial intelligence has evolved from rule-based automation to the development of adaptive and flexible systems that can learn, reason, and engage in conversation. While impressive, today’s most advanced language models and intelligent agents lack one key ingredient of human intelligence: the ability to remember specific personal experiences. That’s where episodic memory for AI comes in. It’s an emerging field working to establish a new generation of context-aware and continuously learning agents.

Humans use their memories of past experiences to plan future actions. Episodic AI agents should be able to do the same by storing, retrieving, and learning from their unique experiences. In this article, we’ll explore what episodic memory means for AI agents, how it differs from other types of memory, why it’s so important, how it works, and some of the challenges ahead.

Key Takeaways

- Episodic memory enables artificial agents to remember and retrieve specific events from their past experiences, making them more context-aware and better able to learn from their own history. It provides detailed contextual information, while semantic memory maintains general facts.

- Episodic memory enables cumulative learning, better decision-making, improved personalization, and increased efficiency.

- It is often implemented in AI systems using dedicated memory modules, vector databases, and retrieval algorithms, and can be structured as a time-indexed log, key-value pairs, or graphs.

- Episodic memory raises new challenges related to accuracy, scalability, privacy, and system complexity that must be addressed to ensure safe and effective AI systems.

What Is Episodic Memory in AI?

Episodic memory is any memory that can recall events or experiences it has encountered during its operation. They are very similar to an agent’s internal “diary” and are made up of discrete events that the agent has personally experienced.

For example, if a human user told a virtual assistant to cancel their subscription because it increased in price, an episodic memory would recall this event (experience) later as the set of things they had specifically told the agent to do (cancel the subscription), with the rationale of the price increase. Episodic memories are event-based and contextual ( tied to when and why something happened).

Types of Memory in AI Agents

To see where episodic memory fits in the cognitive architecture of artificial agents, let’s compare major memory types:

| Memory Type | What It Stores | Example Usage in AI |

|---|---|---|

| Short-Term (Working) | Immediate context and recent information. | The last few user queries in a chatbot’s conversation (within the context window of the model). |

| Episodic Memory | Specific past events and their context (temporal, spatial, causal). | Remembering that “User X asked for tech support on Monday and was given solution Y,” and using that history to follow up next time. |

| Semantic Memory | General facts, concepts, and world knowledge. | Knowing that Paris is the capital of France or understanding domain rules (without any personal experience attached). |

| Procedural Memory | Skills and procedures (how to perform tasks). | Knowing how to execute a sequence like logging into an email server and sending an email, learned through practice or programming. |

| (Optional) Emotional | Preferences or affective associations (user-specific nuances). | Recalling that a user responded positively to a friendly tone last week, adjust the style accordingly in future replies. |

Semantic memory stores general factual knowledge, while episodic memory contains personal experiences with contextual information. Short-term memory is temporary (equivalent to the model’s context window), and procedural memory encodes learned skills or actions.

Why Episodic Memory Matters in AI Agents

The majority of AI models (standard chatbots or game-playing agents) only have access to their pre-trained knowledge and short-term context. At the end of a session, all information about past interactions is lost after the conversation/episode is over. Episodic memory breaks this limitation by enabling an agent to remember and learn from experience. Here are the main reasons why episodic memory is important:

- Learning from Experience: Episodic memory allows cumulative learning by AI agents. Agents can then learn continuously without retraining.

- Improved Decision-Making & Planning: It can provide valuable hindsight as context for an agent’s deliberations. A decision-making AI agent with episodic memory can make better informed plans if it can recall situations similar to the current one.

- Personalization and Contextual Continuity: Episodic memory can be used to personalize AI assistants or chatbots. It can also provide long-term context across interactions by remembering a user’s preferences, past questions, and previous interactions.

- Efficiency and Adaptability: Memory enables agents to become more efficient as they explore and interact with the world. Agents can remember and reuse their episodic memories instead of recalculating or re-learning knowledge.

- Towards Human-Like Intelligence: Episodic memory forms the basis for autobiographical knowledge. It is required for human-level identity, creativity, and flexible reasoning. It’s also considered a step towards artificial general intelligence, since agents with such capabilities can analogize from their own “lived” experiences.

How Episodic Memory Works in AI Agents

Episodic memory for an AI agent is usually implemented as a memory module connected to the agent’s decision-making logic.

Memory Storage

Each time a significant event occurs, the agent creates a new entry in memory. Each memory entry may include metadata features(Such as timestamp information, entities involved, results, and potentially embeddings for similarity searches).

Memory Retrieval

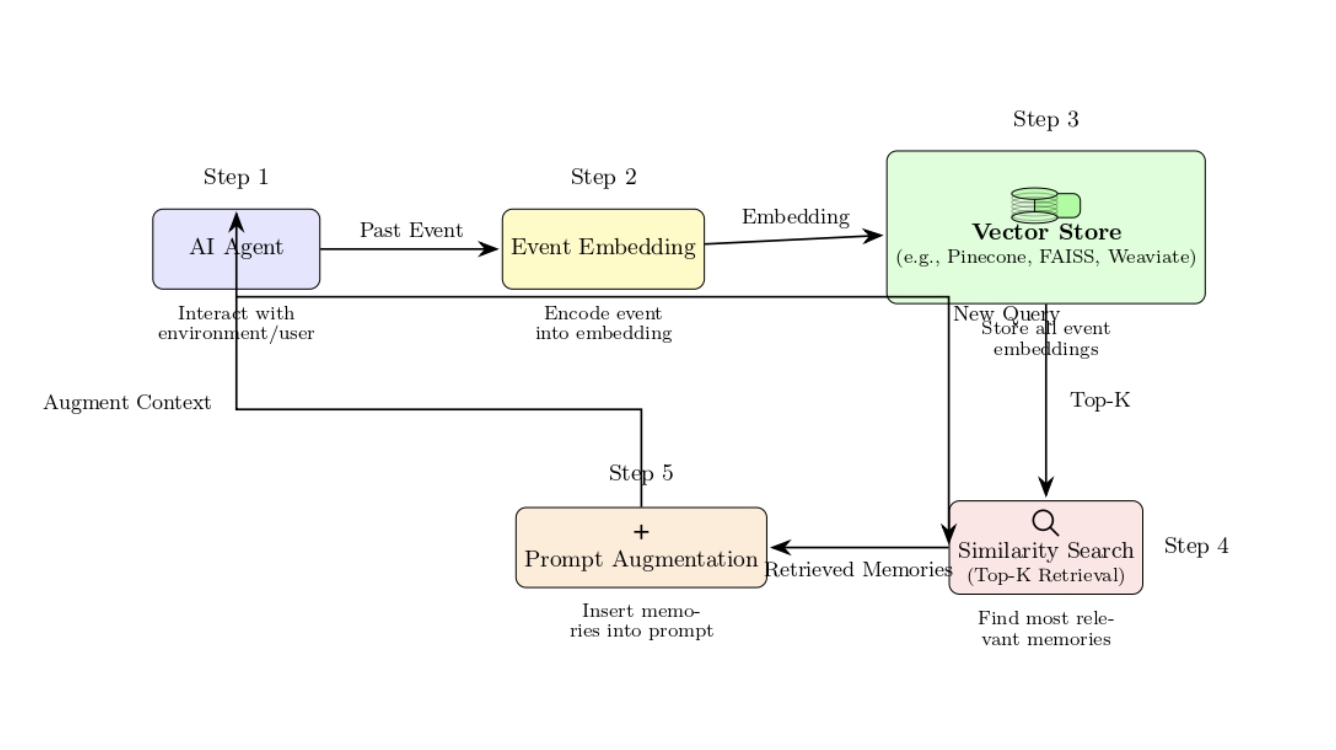

Whenever the agent is presented with the new inputs, it queries its episodic memory. This is typically done through semantic search. The new query is encoded into a vector representation, and this vector is used to search the memory store for similar vectors (past events that are similar to the current context). The best-matching episodes are retrieved and “injected into” the agent’s reasoning process.

Integration with Agent Reasoning

Once relevant episodic memories are retrieved, they must influence the agent’s output. In practice, there are a few ways this integration happens:

- Context Augmentation*:* We must prepend or embed the retrieved memory text into the model’s prompt/context window. This way, the model “sees” those past details when generating a response. The context window is extended through selective recall.

- Memory-augmented Models*:* Some models incorporate a dedicated module (such as a differentiable memory network, a knowledge base, etc.) to store and access information. For example, the agent’s policy/decision function may explicitly call a memory read function when it reaches a certain state.

- Planner and Tool Use*:* In more complex agent systems (e.g., autonomous task-solving agents), a planner component might decide when to consult memory. For instance, an agent could have a high-level loop: “If the current goal is similar to a past goal, recall that episode’s solution and re-use it.” Memory could be viewed as an additional tool/database that the agent has access to as part of its reasoning chain.

In practical applications, episodic memory is often materialized as a database or vector store. A large number of modern AI agents store embeddings of past interactions in external vector databases (Pinecone, FAISS, Weaviate, etc.) and then use a similarity search to pull in the top k most relevant memory entries to include in the prompt.

Some systems follow this pattern:

- Auto-GPT (initially with short-term memory but facing limitations due to context length) and later introduced with long-term memory through vector search.

- The Teenage-AGI agent explicitly stores its chain of thought and results in a memory database. When starting a new task, it queries the database to recall earlier reasoning steps.

By storing episodic memory as an external knowledge base, an agent can scale to hundreds/thousands of past episodes without running out of context, as it only fetches relevant ones.

Pseudocode: storing and retrieving episodic memory

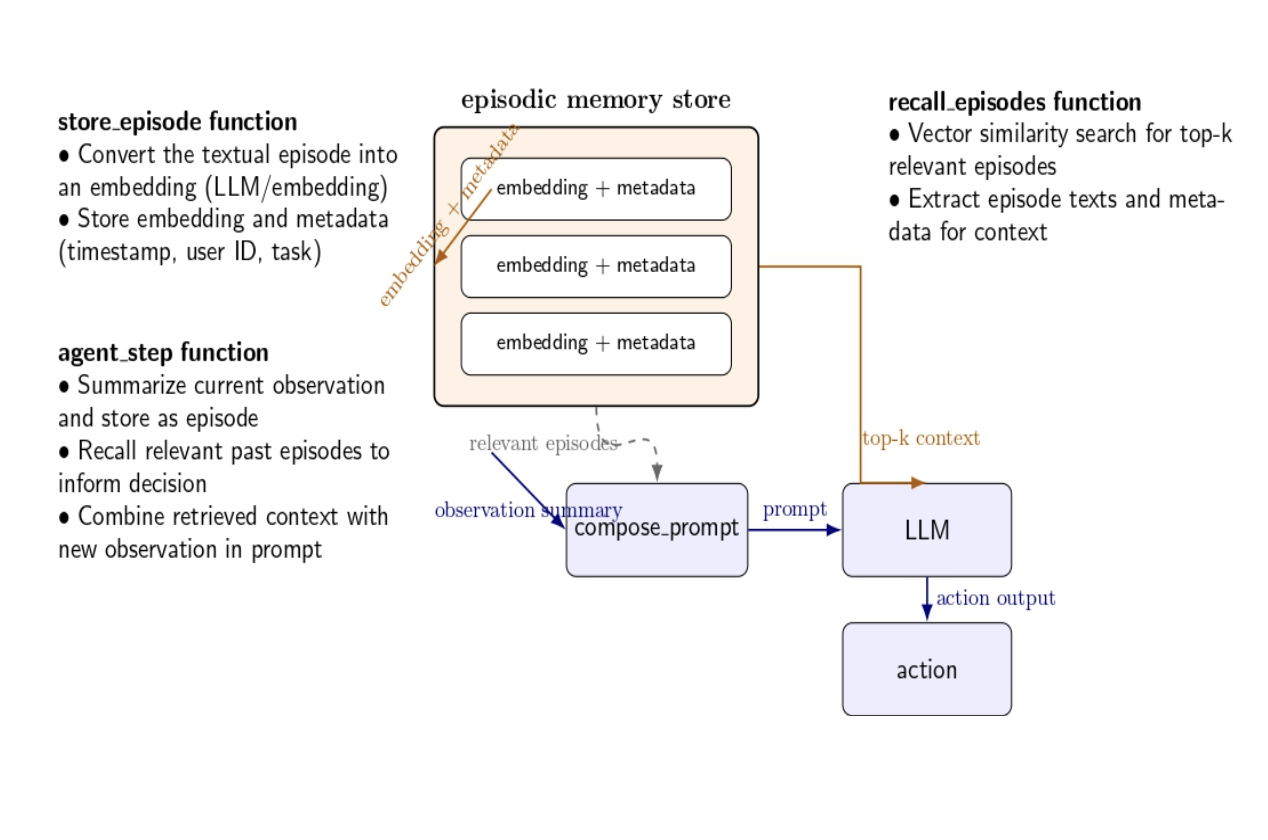

The pseudocode below describes an episode logging and retrieval workflow using embeddings. It can be tailored to other agent frameworks,

# Function to store an episode in a vector store

def store_episode(episode_text: str, metadata: dict, vector_store):

# Convert the textual episode into an embedding using an LLM or embedding model

embedding = embed_text(episode_text)

# Store embedding and metadata (e.g., timestamp, user ID, task)

vector_store.add(embedding, metadata)

# Function to retrieve relevant past episodes

def recall_episodes(query: str, vector_store, top_k=5):

query_embedding = embed_text(query)

# Perform vector similarity search to retrieve top_k relevant episodes

results = vector_store.search(query_embedding, k=top_k)

# Extract episode texts and metadata for context

return [(item.text, item.metadata) for item in results]

# During agent interaction

def agent_step(observation):

# Summarise current observation and store it as an episode

episode_text = summarise_observation(observation)

metadata = {"timestamp": current_time(), "task": current_task()}

store_episode(episode_text, metadata, episodic_memory_store)

# Recall relevant past episodes to inform decision

retrieved = recall_episodes(observation, episodic_memory_store)

# Combine retrieved context with the new observation in the prompt

prompt = compose_prompt(retrieved, observation)

# Query the LLM and produce action

response = llm.generate(prompt)

return response

Note that this pseudocode highlights both the write (storing episodes) and read (retrieving episodes) operations. Agents commonly summarise episodes with natural language summarisation before storage to reduce the storage footprint. Retrieval is typically based on vector similarity or graph traversal to find episodes relevant to the current context.

Memory Organization

Episodic memory is often organized in a suitable data structure to store memories in a way that is efficient for retrieval. Popular techniques include:

- Time-indexed logs: Storing episodes in the order they occurred in time (helpful for replay or for timeline-style analysis).

- Key-value memories: Indexing into a memory with a key (embedding or some symbolic key, such as an event type or ID) for fast retrieval from memory.

- Graph-based memories: Representing episodic memories as a graph, where events are stored as nodes and connected with edges by relationships between them (sequence in time, involved common entities, etc.). Graph memories can be useful for retrieving related events and reasoning over them (e.g., a knowledge graph that represents events).

Maintaining and Pruning Memory

As the episodic store grows, the agents will also need to make decisions about what to remember and what to forget. Memory management strategies vary depending on the importance of different events. These could include:

- Relevance-based retention: Keep only episodes with high-impact results. (E.g., trivial user interactions may be pruned or archived.)

- Summarization: Fully store recent episodes, but compress longer-term memories into summaries. E.g., a chat session from a year ago might be compressed into a brief note of key points.

- Time-based decay: Memories must be given an “age score” and eventually phased out as they become old, unless they are frequently accessed.

- User control & safety: In particular for personal AI assistants, the user should be able to delete or review any memories that have been stored.

Building episodic memory requires software architecture (memory modules that handle storage and retrieval) and algorithmic intelligence (Identifying which memories to call from storage in which situations). There’s fast-moving research in this area, with new frameworks and techniques being proposed to endow AI agents with stronger memory.

Applications and Use Cases of Episodic Memory

Episodic memory enables a host of applications and use cases for AI agents. It makes agents more capable and practical in scenarios involving long-term understanding and adaptation. Some examples of episodic memory applications and areas of use include:

| Application/Use Case | Description & Benefits |

|---|---|

| Personalized Virtual Assistants | Episodic memory can be used in digital assistants (e.g., customer support, productivity) to recall preferences, history, and context between sessions. This can lead to user recommendations (e.g., remembering a user’s preferred window seat or type of hotel), providing a service bespoke to the user (as a human assistant would learn and remember their client’s habits). |

| Continuous Learning Agents | Agents such as tutors or human resources onboarding assistants use episodic memory to engage in lifelong learning. They adapt to each user how and what they teach in a new session, depending on what happens in previous ones, allowing them to avoid repeating or persisting with things that have not worked previously. They provide agents the ability to generalize knowledge and prevent “catastrophic forgetting”, since important experiences from the past are explicitly stored and available. |

| Enhanced Reinforcement Learning | In robotics and gaming, episodic memory can be used by intelligent agents to recall successful strategies or important mistakes. For instance, a robot vacuum cleaner may remember the layout of a home and which areas are more likely to be dirty. That way, it can clean more efficiently in the future. |

| Complex Task Automation (Agent Workflows) | Episodic memory is useful for LLM agents that have to perform operations on emails, calendars, or projects, because they must remember the actions they’ve taken in the past to prevent repeating the same mistakes (e.g., double-booking a meeting). Logs of decisions and their results can be maintained in episodic memory, making the agent’s behavior auditable and explainable. |

| Collaboration and Multi-Agent Systems | It may be used to preserve a shared context among multiple agents or human-AI teams. Agents can maintain a consistent view by synchronizing their knowledge base (e.g., exchanging episodic memories in the form of map sharing in robotics). |

| Domain-Specific Expert Systems | In domains such as medicine, law, or customer support, episodic memory can be used by agents to learn and retrieve anonymized case-based knowledge (symptoms, diagnostics, resolutions, etc.). When a new case occurs, the agent can retrieve similar episodes to inform the current problem-solving. |

Limitations and Challenges

Episodic memory offers huge potential, but it also presents several challenges and potential pitfalls. These are some of the challenges and limitations of episodic memory. It’s important to keep these in mind when designing memory-augmented agents:

| Challenge | Summary |

|---|---|

| Memory Accuracy & Relevance | Ensuring retrieved memories are accurate and relevant is difficult. Stale or out-of-context episodes may lead to wrong outputs. Agents need robust retrieval, continual updating, and validation mechanisms. |

| Scalability & Performance | Memory data can grow indefinitely, causing slow retrieval and increased costs. Optimizations like compression and indexing are essential for fast, efficient access at scale. |

| Knowledge Retention vs. Forgetting | Too much memory can store irrelevant or sensitive data, raising privacy/security concerns. Agents need memory governance, deletion, and anonymization features to avoid issues. |

| Consistency & Alignment | Past wrong or biased memories may bias agent decisions. Agents must be tested to ensure learning aligns with user intent and ethics, especially in high-stakes scenarios. |

| Complexity of Implementation | Episodic memory increases system and debugging complexity. Poor design can cause unpredictable behavior. Managing triggers, retrieval, and updates adds development challenges. |

| Alternatives & Limitations | Longer context windows or fine-tuning models help, but have trade-offs. Episodic memory is more interpretable, but not a complete solution. Hybrid approaches are emerging. |

FAQ SECTION

What is episodic memory in AI?

Episodic memory in the context of artificial intelligence is a mechanism through which an agent stores and recalls experiences or events it has encountered, along with contextual information(such as time, location, and results).

How is episodic memory different from semantic memory in agents? Episodic memory contains information about specific contextual events that the agent has experienced (e.g., “I booked a flight to Paris for User X last month”). Semantic memory is a compilation of facts and knowledge that does not relate to a specific event (e.g., “Paris is the capital of France”).

How do reinforcement learning agents use episodic memory? Episodic memory in reinforcement learning agents enables them to recall specific events or episodes, including a sequence of actions, states, and rewards experienced in the past. This way, the agent can remember successful or unsuccessful experiences and use this information to make more informed decisions when encountering similar situations in the future.

Is episodic memory used in large language models? Most LLMs, such as GPT-3 or GPT-4 -4 do not have an episodic memory that persists between sessions. However, developers can augment LLMs with external memory modules to provide them the ability to store past user interactions and refer to those interactions later on.

What are common tools to implement episodic memory?

Popular tools also include vector databases (Pinecone, FAISS, Weaviate, etc) to store and retrieve experience embeddings, graph databases to store relationships between events, frameworks such as LangChain for building memories into LLM agents, and custom-built memory modules for specific applications.

Conclusion

Episodic memory is the cornerstone that will enable agents to be intelligent and adaptable. By endowing agents with the capabilities to remember, retrieve, and learn from their own unique experiences, we can transition from static, one-size-fits-all automation to systems that can personalize, adapt, and improve over time, just as humans do. While there are many remaining technical and ethical challenges to address, ongoing research and development are quickly overcoming these barriers. As AI agents become increasingly context-aware and capable of lifelong learning, episodic memory will form a building block for the next generation of trustworthy, effective, and human-like artificial intelligence, powered by scalable and high-performance infrastructure like Gradient GPU Droplets, enabling developers to train, deploy, and iterate on memory-enabled AI systems with ease.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author(s)

I am a skilled AI consultant and technical writer with over four years of experience. I have a master’s degree in AI and have written innovative articles that provide developers and researchers with actionable insights. As a thought leader, I specialize in simplifying complex AI concepts through practical content, positioning myself as a trusted voice in the tech community.

With a strong background in data science and over six years of experience, I am passionate about creating in-depth content on technologies. Currently focused on AI, machine learning, and GPU computing, working on topics ranging from deep learning frameworks to optimizing GPU-based workloads.

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- **Introduction**

- **Key Takeaways**

- **What Is Episodic Memory in AI?**

- **Types of Memory in AI Agents**

- **Why Episodic Memory Matters in AI Agents**

- **How Episodic Memory Works in AI Agents**

- **Applications and Use Cases of Episodic Memory**

- **Limitations and Challenges**

- **FAQ SECTION**

- **Conclusion**

- **References and Resources**

Join the many businesses that use DigitalOcean’s Gradient AI Agentic Cloud to accelerate growth. Reach out to our team for assistance with GPU Droplets, 1-click LLM models, AI agents, and bare metal GPUs.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.