- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

By John Andersen and Vinayak Baranwal

This tutorial is the final tutorial in a three-part series on workload identity federation:

- Part 1: Architecture

- Part 2: Deployment and Configuration

- Part 3: Usage from Droplets and GitHub Actions (this post)

Now that we’ve deployed and configured our OAuth application, we can create a new Droplet which will receive a workload identity token. We’ll exchange our token for Database connection credentials as well as a Spaces bucket access key. Finally, we’ll add a role and policy to enable the same exchange to happen from a GitHub Actions workflow file within a repository of our choosing.

Key Takeaways

- Use Droplet-issued OIDC tokens to securely exchange for database connection strings and Spaces access keys.

- Enforce role-based, policy-scoped access with least privilege.

- Run the same token exchange securely from GitHub Actions using

id-token: write. - Tokens are short-lived (5–15 min) and automatically rotated for improved security.

Droplet Creation and Token Provisioning

We can create a new Droplet which will receive a workload identity token by calling doctl compute droplet create with the API URL set to our deployed application. The OIDC subject will be prefixed with the Auth Context automatically. In the following example the Droplet will be issued a token with the subject value of:

actx:f81d4fae-7dec-11d0-a765-00a0c91e6bf6:role:ex-database-and-spaces-keys-access

Where `f81d4fae-7dec-11d0-a765-00a0c91e6bf6` is an example team UUID. The actual value will be the UUID of the team under which the Droplet was created.

$ doctl \

--api-url "https://<deployment-name>.ondigitalocean.app" \

compute droplet create \

--region sfo2 \

--size s-1vcpu-1gb \

--image ubuntu-24-04-x64 \

--tag-names "oidc-sub:role:ex-database-and-spaces-keys-access" \

test-droplet-0001

Creation of Droplet with read-write role

Token Usage from Droplet

From the Droplet, we grab the workload identity token which was provisioned by our server and can now hit API paths on the proxy as specified by our Droplet role and policy.

We grab relevant values from files created by our provisioning flow, the URL of our PoC, the team UUID, and the workload identity token.

URL=$(cat /root/secrets/digitalocean.com/serviceaccount/base_url)

TEAM_UUID=$(cat /root/secrets/digitalocean.com/serviceaccount/team_uuid)

ID_TOKEN=$(cat /root/secrets/digitalocean.com/serviceaccount/token)

We grab relevant values from files created by our provisioning flow, the URL of our PoC, the team UUID, and the workload identity token. Per our ex-database-and-spaces-keys-access role and policy, we exchange the workload identity token for a database-and-spaces-keys-access role token.

SUBJECT="actx:${TEAM_UUID}:role:database-and-spaces-keys-access"

TOKEN=$(curl -sf \

-H "Authorization: Bearer ${ID_TOKEN}" \

-d@<(jq -n -c \

--arg aud "api://DigitalOcean?actx=${TEAM_UUID}" \

--arg sub "${SUBJECT}" \

--arg ttl 300 \

'{aud: $aud, sub: $sub, ttl: ($ttl | fromjson)}') \

"${URL}/v1/oidc/issue" \

| jq -r .token)

We can then use the data-readwrite role token to access database connection strings.

DATABASE_UUID="9cc10173-e9ea-4176-9dbc-a4cee4c4ff30"

curl -sf \

-H "Authorization: Bearer ${TOKEN}" \

"${URL}/v2/databases/${DATABASE_UUID}" \

| jq -r .database.connection.uri

TAG_NAME="my-tag"

curl -sf \

-H "Authorization: Bearer ${TOKEN}" \

"${URL}/v2/databases?tag_name=${TAG_NAME}" \

| jq -r .databases[].connection.uri

We can also use it to create new Spaces access keys.

curl -sf \

-X POST \

-H "Authorization: Bearer ${TOKEN}" \

-H "Content-Type: application/json" \

-d@<(jq -n -c \

--arg name "bucket-111-read-token-droplet" \

--arg bucket "111" \

--arg perm "read" \

'{name: $name, grants: [{"bucket": $bucket, "permission": $perm}]}') \

"${URL}/v2/spaces/keys" \

| jq

If we provide parameters to the token creation which are invalid to the relevant policies we’ve defined API calls will be unsuccessful. The following is an example of an unsuccessful call per our data-readwrite policy. It attempts to create a read token for bucket 222, which is not listed in our defined allowlist parameters.

curl -vf \

-X POST \

-H "Authorization: Bearer ${TOKEN}" \

-H "Content-Type: application/json" \

-d@<(jq -n -c \

--arg name "bucket-111-read-token-droplet" \

--arg bucket "222" \

--arg perm "read" \

'{name: $name, grants: [{"bucket": $bucket, "permission": $perm}]}') \

"${URL}/v2/spaces/keys" \

| jq

This PoC uses a confidential OAuth client implementing the Authorization Code Flow (client secret, no PKCE). Tokens are short-lived (5–15 min) and are automatically rotated. Token exchanges are only permitted for policies defined explicitly, ensuring least-privilege access.

GitHub Actions Usage

For the sake of example we hypothesize that a workload might need to run database migrations during a release process. As such, we need the release workflow to have access to the database URI.

We define a policy which allows for creation of a data-readwrite role token.

How to create policies/ex-gha-readwrite.hcl

path "/v1/oidc/issue" {

capabilities = ["create"]

allowed_parameters = {

"aud" = "api://DigitalOcean?actx={actx}"

"sub" = "actx:{actx}:role:database-and-spaces-keys-access"

"ttl" = 300

}

}

And we assign the policy to a role for OIDC tokens created for a given GitHub Actions workflow.

How to create gha-roles/ex-gha-readwrite.hcl

role "ex-gha-readwrite" {

iss = "https://token.actions.githubusercontent.com"

aud = "api://DigitalOcean?actx={actx}"

sub = "repo:org/repo:ref:refs/heads/main"

policies = ["ex-gha-readwrite"]

job_workflow_ref = "org/repo/.github/workflows/do-wid.yml@refs/heads/main"

}

Add and push the file to apply the new policy.

git add .

git commit -sm "feat: configure access from gha"

git push

Now create a GitHub Actions workflow in your repository which uses the DigitalOcean Workload ID API proxy. In the following example we define the DigitalOcean team UUID we’re authenticating to in the OIDC token’s audience. This definition enables our API proxy to lookup RBAC associated with our team, and unlock the team token for use with the upstream DigitalOcean API, provided requests pass the data-readwrite policy validation.

How to create .github/workflows/do-wid.yml

name: DO Workload API

on:

push:

branches:

- main

paths:

- '.github/workflows/do-wid.yml'

jobs:

migrations:

runs-on: ubuntu-latest

permissions:

id-token: write

env:

DO_BASE_URL: 'https://<deployment-name>.ondigitalocean.app'

DO_TEAM_UUID: 'f81d4fae-7dec-11d0-a765-00a0c91e6bf6'

DO_DATABASE_UUID: '9cc10173-e9ea-4176-9dbc-a4cee4c4ff30'

steps:

- name: Get GitHub OIDC Token

id: token

uses: actions/github-script@v6

with:

script: |

const token = await core.getIDToken(

'api://DigitalOcean?actx=${{ env.DO_TEAM_UUID }}'

);

core.setOutput('oidc', token);

- name: Get data-readwrite token

id: data-readwrite

env:

SUBJECT: "actx:${{ env.DO_TEAM_UUID }}:role:database-and-spaces-keys-access"

GH_OIDC_TOKEN: ${{ steps.token.outputs.oidc }}

run: |

set -eo pipefail

TOKEN=$(curl -sf \

-H "Authorization: Bearer ${GH_OIDC_TOKEN}" \

-d@<(jq -n -c \

--arg aud "api://DigitalOcean?actx=${DO_TEAM_UUID}" \

--arg sub "${SUBJECT}" \

--arg ttl 300 \

'{aud: $aud, sub: $sub, ttl: ($ttl | fromjson)}') \

"${DO_BASE_URL}/v1/oidc/issue" \

| jq -r .token)

echo "::add-mask::${TOKEN}"

echo "oidc=${TOKEN}" >> "${GITHUB_OUTPUT}"

- name: Get database connection details

id: db

env:

DO_OIDC_TOKEN: ${{ steps.data-readwrite.outputs.oidc }}

run: |

set -eo pipefail

DB_URI=$(curl -sfH "Authorization: Bearer ${DO_OIDC_TOKEN}" \

"${DO_BASE_URL}/v2/databases/${DO_DATABASE_UUID}" \

| jq -r .database.connection.uri)

echo "::add-mask::${DB_URI}"

echo "uri=${DB_URI}" >> "${GITHUB_OUTPUT}"

Further Applications

Congratulations! You’ve successfully deployed, configured, and used the workload identity reverse proxy PoC to enable Droplets and GitHub Actions to access DigitalOcean API resources. However, the secretless fun doesn’t stop there!

In the age of dynamic workflows (aka AI Agents) we don’t always want to give out access tokens which allow destructive operations or write operations. Unfortunately, that fine grained control may not always be available to us. The MCP specification has OAuth support, however, that’s only useful to us within end-user driven environments. The workload identity reverse proxy we’ve covered in this series is an interesting pattern to explore within the headless AI Agent space. When deployed, it provides OAuth login capabilities and RBAC configuration to centrally manage usage of access tokens and forwarding of requests to upstream APIs using API specific tokens.

GitHub Copilot coding agent is an example of an environment which could benefit from the use of a workload identity reverse proxy. Instead of configuring manually provisioned API keys for MCP servers, the reverse proxy FQDN is used in place of those services APIs. Token exchange then happens similar to the GitHub Copilot coding agent Azure MCP configuration example for each upstream used. The workload’s OIDC token is exchanged for the scoped token which we’ve been using to make requests to specific paths protected by the reverse proxy. This scoped token, now dynamically allocated, is used in place of manually provisioned API tokens.

Headless AI agents are thereby enabled to operate autonomously within the bounds of the policies associated with the roles of the exchanged token. This enables enforcement of the principle of least privilege across upstream APIs and with many MCP servers which lack native support for such fine grained controls or support for workload identity authentication and authorization.

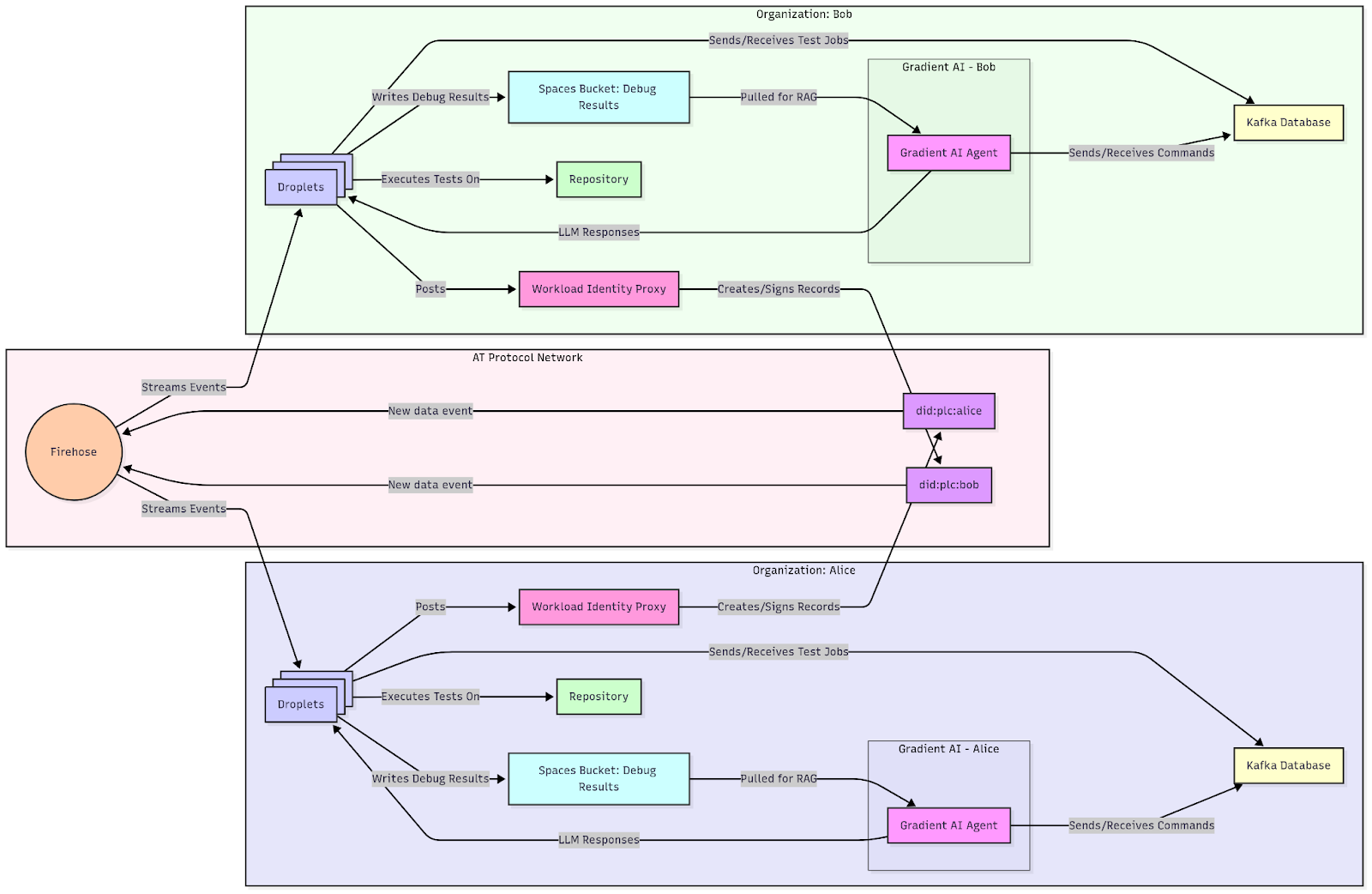

One way we could maximize the usefulness of this pattern is to apply it to headless AI agent swarms. We could combine prior work such as Seamless LangChain Integration with DigitalOcean Gradient™ AI Platform and LangGraph Multi-Agent Swarm to create a dynamic swarm of agents backed by elastic heterogeneous compute (DigitalOcean Droplets and GitHub Actions). A workload identity reverse proxy could also perform resource management at the role context, scoping resource usage / spend, disallowing requests or reaping resources when a role’s context has exceeded limits.

Communications between agents within the same trust boundary could easily be done via scoped resources within their team, such as Databases and Spaces buckets.

Agents or agent swarms within different trust boundaries (such as VPCs or across organizational boundaries) may wish to communicate over digital trust infrastructure such as the Authenticated Transfer Protocol (aka ATProto).

Cross Organization Agent Collaboration using ATProto

To enable such a flow we’d provision DID:PLC’s for our distinct trust boundaries, and enable token exchange within their roles and policies.

How to create droplet-roles/ex-database-and-spaces-keys-access.hcl

role "ex-database-and-spaces-keys-access" {

aud = "api://DigitalOcean?actx={actx}"

sub = "actx:{actx}:role:ex-database-and-spaces-keys-access"

policies = ["ex-database-and-spaces-keys-access", "ex-atproto"]

}

How to create policies/ex-atproto.hcl

path "/v1/oidc/issue" {

capabilities = ["create"]

allowed_parameters = {

"aud" = "api://ATProto?actx=did:plc:*"

"sub" = "actx:{actx}:role:atproto-write"

"ttl" = 300

}

}

We would then perform the OAuth web flow for each DID:PLC and configure roles and policies appropriately. As we’re switching from the DigitalOcean API context to the ATProto API context it’s important we ensure the role which allows issuance of ATProto access tokens enforces that they must only be granted to tokens from our team’s UUID. We do this by ensuring the source auth context is present in the allowed subject actx:f81d4fae-7dec-11d0-a765-00a0c91e6bf6.

How to create roles/atproto-write.hcl

role "atproto-write" {

aud = "api://ATProto?actx={actx}"

sub = "actx:f81d4fae-7dec-11d0-a765-00a0c91e6bf6:role:atproto-write"

policies = ["atproto-write"]

}

How to create policies/atproto-write.hcl

path "/xrpc/com.atproto.repo.createRecord" {

capabilities = ["create"]

}

Finally, we perform token exchange and post communications across trust boundaries from the Droplet. ATProto is a nice choice for this as agents within other trust boundaries can listen for new data events via the firehose / jetstream.

DID_PLC="did:plc:ewvi7nxzyoun6zhxrhs64oiz"

SUBJECT="actx:${TEAM_UUID}:role:atproto-write"

TOKEN=$(curl -sf \

-H "Authorization: Bearer ${ID_TOKEN}" \

-d@<(jq -n -c \

--arg aud "api://ATProto?actx=${DID_PLC}" \

--arg sub "${SUBJECT}" \

--arg ttl 300 \

'{aud: $aud, sub: $sub, ttl: ($ttl | fromjson)}') \

"${URL}/v1/oidc/issue" \

| jq -r .token)

curl -sf \

-X POST \

-H "Authorization: Bearer ${TOKEN}" \

-H "Content-Type: application/json" \

-d '{

"repo": "'"${USER_DID}"'",

"collection": "app.bsky.feed.post",

"record": {

"$type": "app.bsky.feed.post",

"text": "Hello World",

"createdAt": "'$(date -u +"%Y-%m-%dT%H:%M:%S.%3NZ")'"

}

}' \

"${URL}/xrpc/com.atproto.repo.createRecord" | jq

As we move into a new paradigm of software development with AI agents by our side, we hope that DigitalOcean will be the platform you and your agents choose for elastic compute as they work together across organizations and open source projects, spinning up Droplets for development and testing.

Frequently Asked Questions (FAQ)

What is workload identity federation and why use OAuth with DigitalOcean Droplets?

Workload identity federation enables trusted workloads (such as Droplets or CI jobs) to securely obtain and exchange short-lived tokens for API access, without embedding long-lived credentials. Using OAuth and OIDC for federation with DigitalOcean Droplets ensures fine-grained, auditable, and automatable access control, leveraging role-based policies and short-lived tokens that improve security and support least-privilege access.

How does token exchange work in this PoC for DigitalOcean resources?

The Proof of Concept (PoC) issues each Droplet or workload an OIDC token. This token is securely exchanged with the deployed API proxy for a resource-scoped access token (e.g., database or Spaces key), according to RBAC policies defined in HCL. Each access token has limited scope and lifetime, reducing risk in case of exposure.

Can GitHub Actions workflows also use the same identity federation?

Yes, GitHub Actions can be configured to use OpenID Connect (OIDC) tokens as part of a workflow. When properly configured with DigitalOcean’s workload identity API proxy, a GitHub Actions workflow can perform secure token exchanges, subject to role and policy restrictions, just like a Droplet. This approach avoids hard-coding credentials in CI/CD pipelines.

What are the security best practices implemented by this solution?

This guide’s approach implements several security best practices:

- All access is governed by RBAC policies that strictly limit actions and parameters.

- Tokens are short-lived (5–15 minutes), reducing the risk from compromised tokens.

- Token exchanges are auditable and performed only via explicit role and policy assignments.

- No long-lived static secrets or credentials are stored in Droplets or workflows.

How do I create and assign new roles and policies?

You can define new policies in HCL files and associate them with roles, specifying allowed actions and parameters. Assign these roles to Droplets (by tag) or to GitHub Actions workflows (by subject/audience and workflow reference). All changes should be version-controlled and applied by pushing to your configuration repository.

What are the prerequisites for deploying this workload identity solution on DigitalOcean?

- A deployed OAuth-based API proxy on DigitalOcean App Platform, as described in Part 2.

- An OAuth application registered in the DigitalOcean Control Panel, with its Client ID and Secret configured as encrypted environment variables in your deployment.

- jq and curl tools installed on Droplets or in automation environments for token handling.

- For GitHub Actions: Workflow with

id-token: writepermissions and proper workflow role configuration.

Can I limit which Droplets or workflows can exchange tokens for certain permissions?

Yes, access control is granular and based on role and policy mappings. For example, only Droplets with a specific tag or GitHub Actions workflows with a specific job_workflow_ref and subject can perform token exchanges for certain resources or actions. Adjust the policy and role files to set precise boundaries.

What happens if a token exchange attempt violates policy or RBAC rules?

The API proxy denies the request and the downstream API call fails. Only exchanges conforming to the explicitly defined policies (parameters, audiences, subjects) will succeed, providing defense-in-depth against privilege escalation or misconfiguration.

Where can I find the code and examples for this PoC?

Full source code and step-by-step deployment instructions are available at https://github.com/digitalocean-labs/droplet-oidc-poc. Example policy and role files, as well as GitHub Actions workflow YAML, are included in the repository.

Who should use this workload identity federation pattern?

This pattern is ideal for teams or organizations running workloads across Droplets, CI/CD pipelines, or other compute resources needing secure, rotated, and auditable API access to DigitalOcean services without distributing shared secrets or static credentials.

If you have further questions about implementing workload identity federation on DigitalOcean, refer to the official OAuth documentation or open an issue in the GitHub repo.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author(s)

Security engineering and secure by-default support for engineering teams.

Building future-ready infrastructure with Linux, Cloud, and DevOps. Full Stack Developer & System Administrator. Technical Writer @ DigitalOcean | GitHub Contributor | Passionate about Docker, PostgreSQL, and Open Source | Exploring NLP & AI-TensorFlow | Nailed over 50+ deployments across production environments.

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Key Takeaways

- Droplet Creation and Token Provisioning

- Token Usage from Droplet

- GitHub Actions Usage

- Further Applications

- Frequently Asked Questions (FAQ)

- References

Deploy on DigitalOcean

Click below to sign up for DigitalOcean's virtual machines, Databases, and AIML products.

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and SMBs

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.