Lambda Labs was founded in 2012 with a vision to make access to computation as effortless and ubiquitous as electricity. AI has been Lambda’s sole focus since its founding, initially centered on building deep learning workstations and servers before expanding into cloud infrastructure. The company built its reputation by offering straightforward GPU rentals with competitive pricing and a developer-friendly platform that supports popular machine learning frameworks and tools.

Although Lambda Labs was early to the market, numerous alternatives now offer similar capabilities, competitive pricing models, and specialized features tailored to different use cases. This article examines the top Lambda Labs alternatives worth considering, such as DigitalOcean’s Gradient™ AI GPU Droplets, comparing their pricing, technical capabilities, and features to help you select the right GPU cloud provider for your business.

Key takeaways:

-

There are three main types of Lambda Labs alternatives: cost-saving platforms like Vast.ai and Runpod, which offer significant discounts through shared resources and spot pricing; primary cloud services like AWS and Azure, which provide full enterprise support and compliance; and machine learning-focused providers like DigitalOcean Gradient AI GPU Droplets, explicitly designed for AI development workflows.

-

GPU pricing varies widely, from $1.87/hour for basic H100 access on marketplaces to over $49/hour for premium enterprise setups. Always check for hidden fees, such as data transfer costs and minimum usage requirements, which can make seemingly cheap options expensive.

-

Choose based on your needs: use budget marketplaces for experiments and learning, pick established cloud providers for business-critical work that needs reliability, and consider AI-specialized platforms if you want the most straightforward setup for machine learning projects.

What matters when choosing a GPU cloud provider?

Before discussing specific providers, consider what makes or breaks your experience with GPU cloud hosting platforms.

-

GPU selection and availability matter more than you think. Everyone wants H100s, but if you’re doing inference work, you might be perfectly fine with RTX 4090s at a fraction of the cost. The key is having options and spinning up instances when needed.

-

Pricing structure goes beyond the headline hourly rate. Hidden data transfer fees, minimum commitments, and complex billing can turn a seemingly cheap option into a budget nightmare. The best providers are upfront about all costs.

-

Pre-built environments can save hours or days of setup time. Nobody wants to spend their weekend configuring CUDA drivers and framework compatibility when they could be training models instead.

-

Support and reliability become critical when things go wrong. Having real humans who understand GPU workloads can make all the difference when troubleshooting complex issues. Response times, technical expertise, and access to knowledgeable engineers should factor into your decision just as much as raw performance specs.

Top Lambda Labs Alternatives

Now that we have discussed some considerations for what to look for in a GPU cloud provider, below are some of the affordable Lambda Labs alternatives that you can explore:

1. DigitalOcean Gradient™ AI GPU Droplets

DigitalOcean’s Gradient AI GPU Droplets provide straightforward GPU infrastructure for digital-native enterprises. They come with ready-to-use machine learning environments and Kubernetes integration, simplifying infrastructure complexity while maintaining high performance for AI workloads. This platform is ideal for forward-thinking organizations that require immediate GPU access with minimal setup, supporting containerized deployment workflows using Docker and Kubernetes.

Key features:

-

Broad selection of GPU choices, including NVIDIA RTX 4000, A40, A100, H100, and H200 configurations, to accommodate various workload needs.

-

Pre-installed PyTorch, TensorFlow, and Jupyter environments with CUDA 11.8+ for immediate use, saving configuration time.

-

DigitalOcean Kubernetes (DOKS) featuring GPU node pools and automatic scaling capabilities.

-

H100: $1.99/GPU/hour

-

H200: $3.44/GPU/hour

2. Runpod

Runpod is a budget-friendly GPU cloud solution designed for AI developers who prioritize cost-effectiveness without sacrificing essential functionality. It offers a unique dual-pricing model, including spot instances with cost savings, and a serverless architecture that ensures automatic resource scaling. With its globally distributed infrastructure, the platform guarantees consistent performance and accessibility for users worldwide.

Key features:

-

Up to 90% cost reduction on Spot GPU instances via intelligent automated bidding.

-

Serverless GPU functions with sub-3-second cold start times for A100s.

-

Docker template library with Stable Diffusion, LLaMA, and 50+ pre-configured AI models.

-

The community marketplace offers over 1,000 user-contributed, peer-reviewed container images.

-

H100: $2.59/hr

-

A100: $1.19/hr

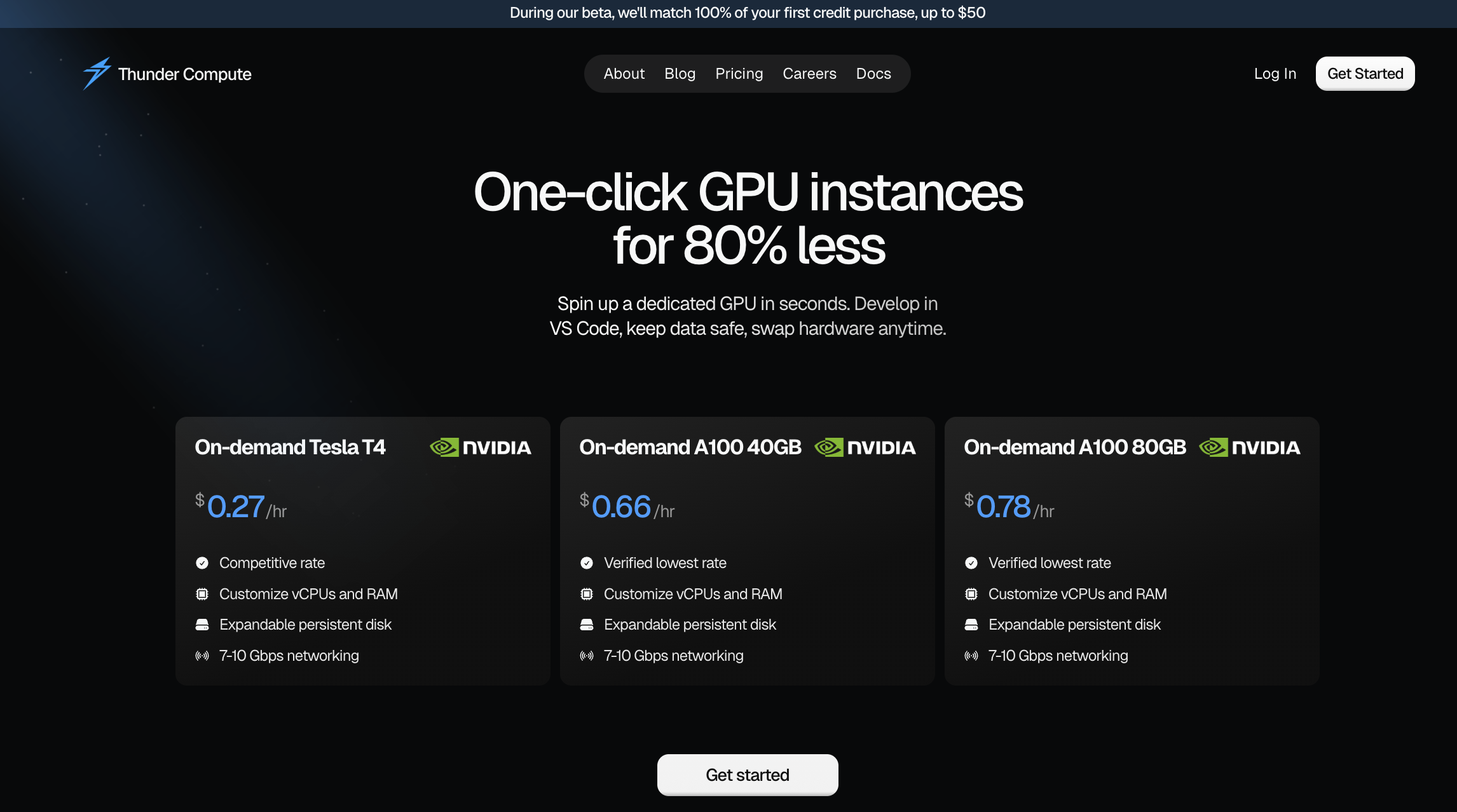

3. Thunder Compute

Thunder Compute is a developer-focused GPU cloud platform designed to simplify access to high-performance computing while maintaining competitive pricing and firm performance. Built with an emphasis on user experience and streamlined workflows, Thunder Compute bridges the gap between complex enterprise solutions and basic cloud offerings. It is ideal for AI practitioners who need powerful GPU resources without administrative overhead.

Key features:

-

Intuitive interface designed for AI/ML developers with simplified resource provisioning and management workflows.

-

High-performance GPU configurations, including modern NVIDIA architectures optimized for training and inference workloads.

-

Pre-configured environments and templates for popular AI frameworks, including PyTorch, TensorFlow, and Jupyter notebooks.

-

Flexible scaling options allow resource adjustment based on computational demands and project requirements.

-

On-demand A100 (80GB): $0.78/hour

-

On-demand H100: $1.89/hour

4. Amazon EC2 G5 Instances

AWS EC2 G5 delivers its most comprehensive GPU portfolio through its G5 instances, offering everything from high-memory training configurations to optimized inference solutions. The G5 instances provide cost-effective inference capabilities. This breadth comes with premium pricing but high flexibility for diverse AI workloads.

Key features:

-

Industry-leading 8x H100 configurations delivering 3.2TB HBM3 memory for the most demanding training workloads.

-

Advanced 3200 Gbps EFA networking infrastructure optimized for large-scale distributed training operations.

-

Intelligent Spot fleet optimization with automatic failover and smart bidding algorithms to reduce costs.

-

Deep AWS ecosystem integration spanning S3 storage, SageMaker ML platform, and Lambda serverless functions.

- On-demand pricing available

5. Nebius AI Cloud

Nebius AI Cloud is a specialized GPU cloud platform designed specifically for AI and machine learning workloads, offering a solid approach to high-performance computing infrastructure. It is designed to deliver enterprise-grade GPU resources without the complexity typically associated with traditional hyperscale providers. Nebius is an ideal solution for organizations seeking dedicated AI infrastructure with simplified management and competitive pricing.

Key features:

-

Specialized AI-focused infrastructure explicitly optimized for machine learning and deep learning workloads.

-

High-performance GPU configurations, including the latest NVIDIA architectures for training and inference operations.

-

Simplified deployment and management interface designed specifically for AI/ML practitioners and data scientists.

-

Enterprise-grade security and compliance features help ensure data protection and adherence to regulatory requirements.

-

H100: $2.00/hour

-

H200: $2.30/hour

6. Vultr Cloud GPU

Vultr Cloud GPU strips away enterprise complexity while maintaining strong performance, creating an ideal solution for developers seeking powerful GPU access without administrative overhead. This straightforward approach focuses on core functionality and transparent pricing, making it perfect for teams concentrating on AI development rather than infrastructure management. With rapid deployment and scalable resources, developers can spin up GPU instances in minutes and adjust capacity as project demands evolve.

Key features:

-

High-performance NVMe storage is standard across all GPU instances for optimal data throughput.

-

Global edge network spanning 25+ locations, providing low-latency inference deployment worldwide.

-

Transparent hourly billing structure with no hidden fees or complex pricing tiers to navigate.

-

One-click deployment templates for popular AI frameworks, including PyTorch, TensorFlow, and specialized applications.

-

H100: $2.99/GPU/hour

-

A100: $2.80/hour

7. TensorDock Cloud GPU

TensorDock cloud GPU platform is well-suited for cost-sensitive projects with moderate computational demands, making it an attractive option for academic researchers and independent machine learning engineers. It offers a flexible, on-demand GPU environment that allows users to tailor their resource allocation precisely across GPU, RAM, and vCPU configurations without long-term commitments or imposed quotas. The platform’s competitive hourly rates and pay-as-you-go model enable users to experiment with various GPU configurations while maintaining strict budget control.

Key features:

-

A selection of 44 GPU models across various performance tiers and price points.

-

Global network spanning over 100 locations for optimal latency and availability.

-

No quotas on GPU usage, providing unlimited scaling flexibility for varying workloads.

- H100: $2.25/hour

8. Vast.ai

Vast.ai operates as a decentralized GPU marketplace, connecting users directly with individual hardware owners worldwide in an Airbnb-style model. While this peer-to-peer approach introduces variability in reliability, it offers substantial cost savings for budget-conscious projects and experimental workloads that can tolerate some uncertainty. The platform provides an extensive selection of hardware, complete with detailed specifications for each instance, enabling users to filter and compare GPU options according to their specific computational requirements.

Key features:

-

Direct connections to individual GPU owners globally, maximizing potential cost savings.

-

Flexible rental periods ranging from minutes to months without restrictive minimum commitments.

-

Community-driven reliability ratings and host verification systems for informed decision-making.

-

H100 SXM: $1.87/hr, P25

-

H200: $2.35/hr, P25

9. CoreWeave GPU Compute

CoreWeave GPU Compute delivers enterprise-grade GPU infrastructure through a Kubernetes-native platform designed for demanding AI and machine learning workloads. The platform is built on bare-metal infrastructure with direct hardware access, providing the performance and flexibility that containerized applications require. This cloud-native approach makes CoreWeave ideal for teams already invested in modern development practices and container orchestration workflows.

Key features:

-

High-performance networking with ultra-low latency connections optimized for distributed training and multi-node GPU clusters.

-

Kubernetes-native architecture that integrates with existing container workflows and orchestration tools.

-

Bare-metal GPU instances offer direct hardware access for maximum performance, eliminating virtualization overhead.

-

Flexible deployment options supporting both on-demand and reserved capacity for cost optimization at scale.

-

On-demand HGX H100: $49.24/hour

-

On-demand HGX H200: $50.44/hour

If you need more powerful, large-scale GPU clusters beyond what Lambda Labs offers, explore our comprehensive guide to the Coreweave alternatives for cloud GPU. We compare enterprise-focused providers offering advanced networking, multi-GPU setups, and infrastructure designed for demanding AI workloads at scale.

10. Voltage Park

Voltage Park delivers cutting-edge GPU infrastructure engineered explicitly for demanding AI and machine learning workloads. Built with enterprise-grade hardware and optimized for large-scale training operations, Voltage Park caters to organizations prioritizing computational power and consistent performance over cost optimization, making it ideal for research institutions and enterprises with mission-critical AI applications. The platform’s high-performance networking and dedicated support infrastructure help ensure minimal downtime and maximum throughput for complex, resource-intensive computational tasks.

Key features:

-

High-performance GPU clusters featuring the latest NVIDIA architectures optimized for intensive training and inference workloads.

-

Specialized networking and storage solutions designed to eliminate bottlenecks in distributed training scenarios.

-

Optimized software stack and drivers specifically tuned for AI/ML frameworks and applications.

- On-demand H100: $1.99/hour

Resources

Lambda Labs alternatives FAQs

What are the best Lambda Labs alternatives in 2025?

The best alternatives depend on your specific needs. DigitalOcean Gradient AI GPU Droplets provide an excellent developer experience, offering competitive H100 pricing at $1.99/hour, while combining intuitive cloud infrastructure management with powerful GPU capabilities tailored for AI/ML workloads. The platform integrates seamlessly with DigitalOcean’s broader ecosystem of services like Droplets, Spaces, and Kubernetes, enabling developers to build comprehensive machine learning pipelines within a familiar environment.

Which Lambda Labs competitors offer cheaper GPU pricing?

DigitalOcean Gradient stands out with H100 GPUs at $1.99/hour, offering exceptional value through transparent, predictable pricing without hidden fees or complex tier structures. The platform combines competitive rates with enterprise-grade reliability and the developer-friendly experience DigitalOcean is known for, making it ideal for teams seeking both affordability and consistent performance.

Do Lambda Labs alternatives provide scalable GPU clusters?

Yes, several alternatives offer superior clustering capabilities. DigitalOcean offers GPU node pools with automatic scaling through its Kubernetes service.

Which Lambda Labs alternatives include pre-configured ML frameworks?

DigitalOcean Gradient AI GPU Droplets include PyTorch, TensorFlow, and Jupyter, with CUDA 11.8 or later pre-installed for immediate use, eliminating the time-consuming setup process typically associated with GPU computing environments. These ready-to-use configurations enable developers to start training models within minutes of provisioning, with all dependencies, drivers, and frameworks optimized for NVIDIA GPU acceleration and regularly maintained by DigitalOcean to support compatibility and security.

Accelerate your AI projects with DigitalOcean Gradient™ AI GPU Droplets.

Accelerate your AI/ML, deep learning, high-performance computing, and data analytics tasks with DigitalOcean Gradient™ AI GPU Droplets. Scale on demand, manage costs, and deliver actionable insights with ease. Zero to GPU in just 2 clicks with simple, powerful virtual machines designed for developers, startups, and innovators who need high-performance computing without complexity.

Key features:

-

Powered by NVIDIA H100, H200, RTX 6000 Ada, L40S, and AMD MI300X GPUs

-

Save up to 75% vs. hyperscalers for the same on-demand GPUs

-

Flexible configurations from single-GPU to 8-GPU setups

-

Pre-installed Python and Deep Learning software packages

-

High-performance local boot and scratch disks included

-

HIPAA-eligible and SOC 2 compliant with enterprise-grade SLAs

Sign up today and unlock the possibilities of DigitalOcean Gradient™ AI GPU Droplets. For custom solutions, larger GPU allocations, or reserved instances, contact our sales team to learn how DigitalOcean can power your most demanding AI/ML workloads.

About the author

Surbhi is a Technical Writer at DigitalOcean with over 5 years of expertise in cloud computing, artificial intelligence, and machine learning documentation. She blends her writing skills with technical knowledge to create accessible guides that help emerging technologists master complex concepts.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.