When ChatGPT emerged in late 2022, OpenAI took a lead in consumer AI. But Google, the company whose researchers literally invented the transformer architecture behind modern language models, wasn’t about to sit on the sidelines. After initially launching its AI offering as Bard in 2023, Google rebranded and supercharged it as Gemini, drawing on decades of search expertise and its ecosystem of productivity tools.

Today, these two platforms have diverged, each developing different strengths and capabilities. Both have proven their worth beyond casual conversation. ChatGPT continues to push boundaries with advanced reasoning and creative abilities. Gemini offers deep integration with Google’s productivity suite, pulling from Gmail, Drive, and YouTube while maintaining the context of your actual digital workflow.

Read on for a breakdown of ChatGPT vs Gemini, focusing on the differences between pricing tiers, model capabilities, and practical use cases.

Key takeaways:

-

Gemini is designed for multimodal, research-focused workflows. It can analyze text, images, video, and audio simultaneously. Its deep integration with Google Workspace enables teams to summarize meeting notes, draft context-aware replies, and extract actionable insights directly from Docs, Gmail, and Sheets.

-

Gemini offers real-time data access through Google Search, prioritizing accuracy, long-context reasoning, and source citation, making it ideal for analytical, regulatory, or compliance-intensive tasks.

-

ChatGPT excels in conversational AI and creative content generation, offering a highly intuitive interface for non-technical users and Codex for technical teams to write, debug, and document code efficiently. It also enables the creation of custom GPTs, which are personalized chatbots tailored for specific tasks, such as customer support, data analysis, or content review.

-

ChatGPT offers broader accessibility across over 100 countries and regions. Its advanced multilingual and multimodal capabilities for text, voice, image, and video make it suitable for distributed or international teams collaborating across languages and time zones.

What is Gemini?

Gemini is Google’s AI assistant, launched in December 2023 as the successor to their previous chatbot, Bard. This wasn’t just a rebranding; instead, Gemini is more advanced than Bard and is designed to be multimodal. It can process text, images, videos, and audio as inputs and generate text and image outputs.

Gemini’s deep integration with Google’s ecosystem connects it to information from Google Search, Gmail, and Drive, all in real-time. To understand how this works in practice, consider the following example: you can ask Gemini to analyze data from Google Sheets while simultaneously pulling context from a related document in Drive, all within a single conversation.

Gemini is available in two versions: Gemini Pro (the free tier) and Gemini Advanced (a paid subscription offering access to more powerful models and additional features).

Gemini features

Gemini’s features are built around its deep integration with Google’s ecosystem, allowing users to work across multiple Google services within a single AI-powered interface.

1. NotebookLM

NotebookLM enables users to convert large datasets of information into easy-to-understand, AI-assisted notebooks. Upload text documents, articles, research papers, whitepapers, and meeting notes, and transform them into AI-generated podcast-style discussions. According to the latest update, the platform now supports over 50 languages and offers audio overviews.

With this one simple tool, you can manage all your documents and get actionable insights within minutes. For example, you can provide a YouTube link, and NotebookLM will extract speaker quotes with timestamps, making them useful for research. Another use case: students can upload a mix of sources in different languages, such as a Portuguese documentary, a Spanish research paper, and English study reports, and generate an audio overview in their preferred language.

NotebookLM uses a RAG (retrieval-augmented generation) model that grounds responses exclusively in your uploaded documents, pulling directly from your sources rather than generating information from its training data.

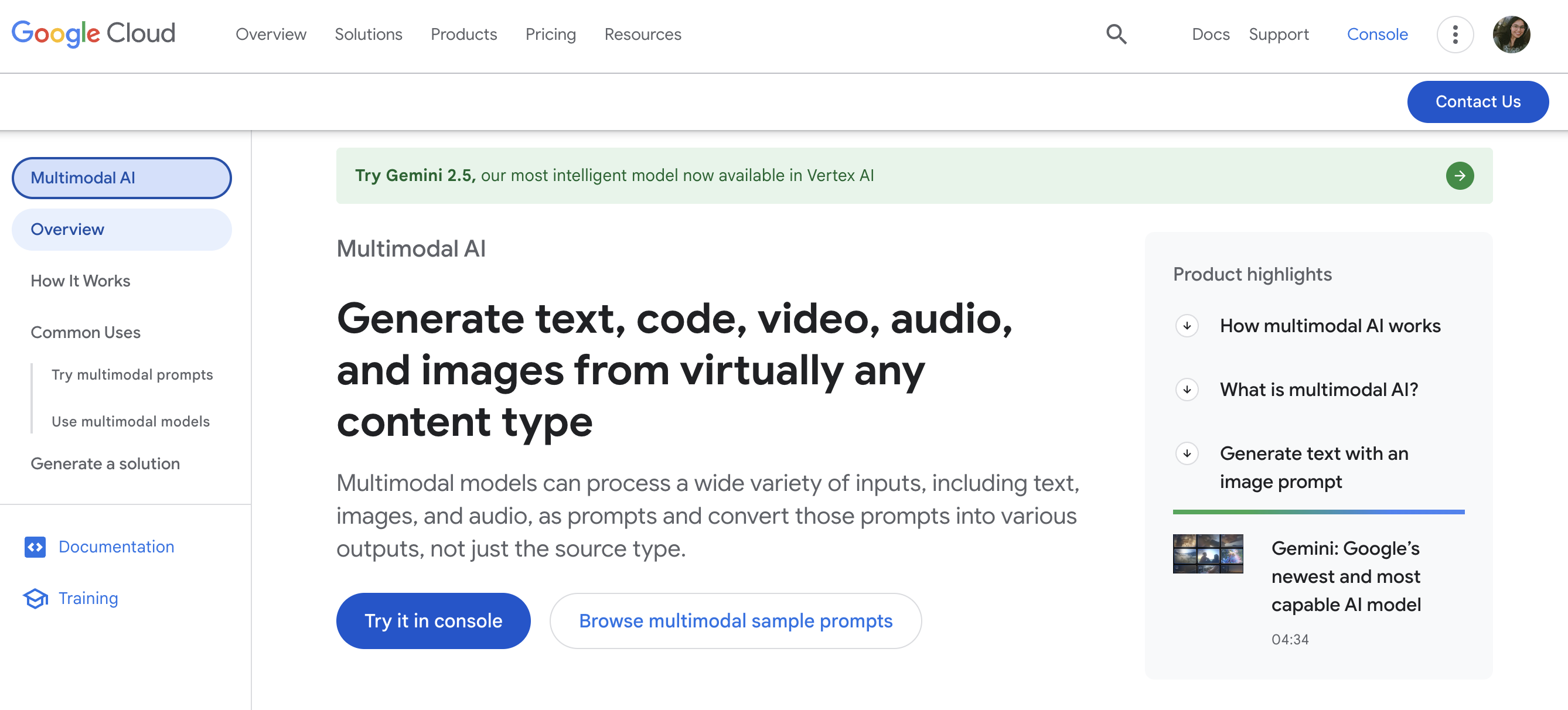

2. Multimodal reasoning

Multimodal reasoning enables Gemini to process and integrate images, text, video, and audio within a single workflow, allowing for integration of diverse content types. For example, upload a product design mockup (image), a recorded client feedback session (audio), and a market research report (text). Then, Gemini will analyze how the visual elements align with spoken concerns and written data, identifying connections such as a UI element flagged in the mockup that was also mentioned negatively in the audio and contradicts best practices in the report.

This capability becomes even more powerful when used in conjunction with Vertex AI, Google’s enterprise AI platform, where developers can build custom applications that use Gemini’s multimodal reasoning.

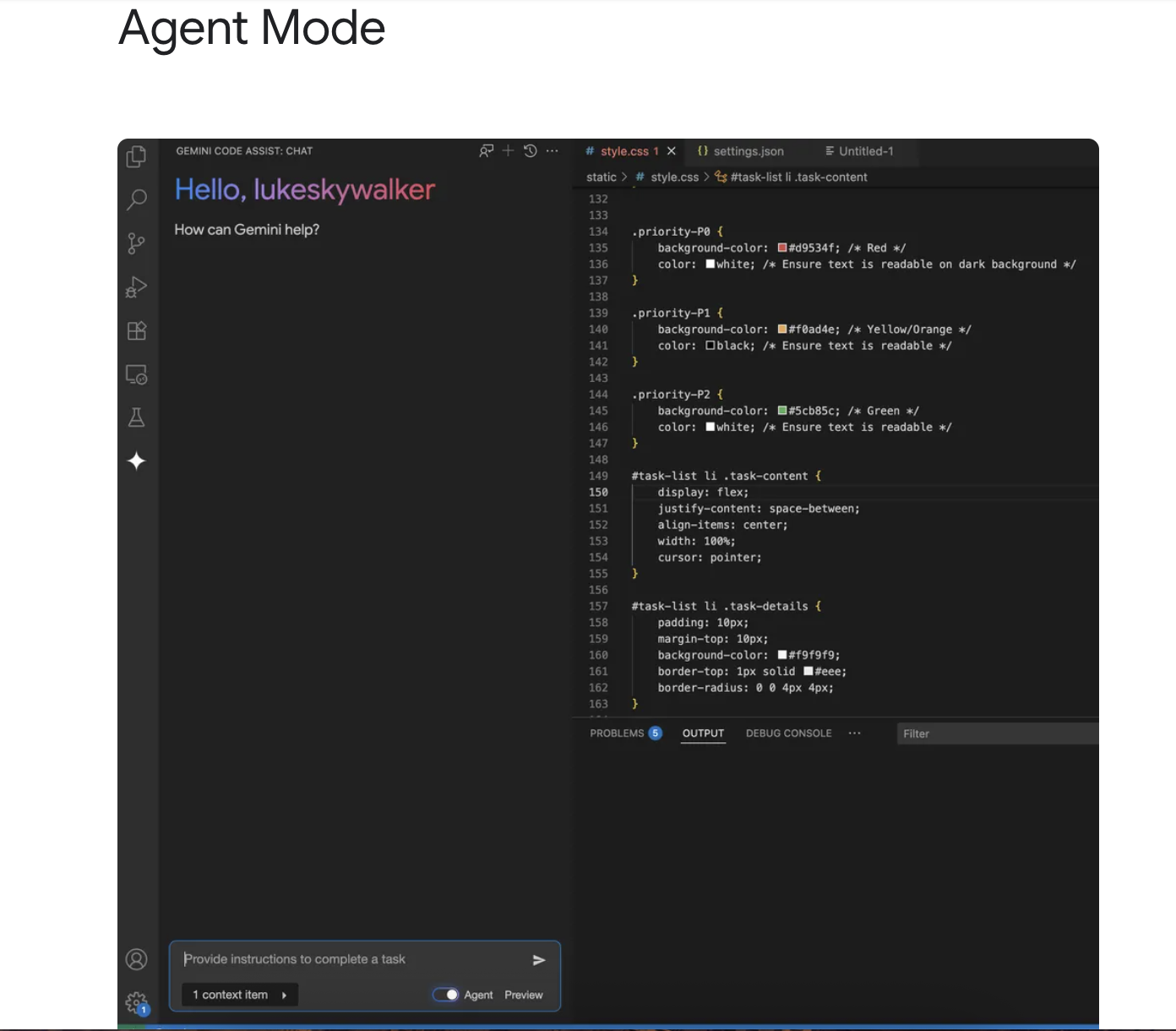

3. Agent mode

Agent mode offers the capability to perform multi-step and structured tasks simultaneously with access to workflows and APIs. Tasks ranging from basic workflows (such as sending emails and organizing information) to more technical tasks (like data analysis and document processing) can be executed without requiring multiple tools.

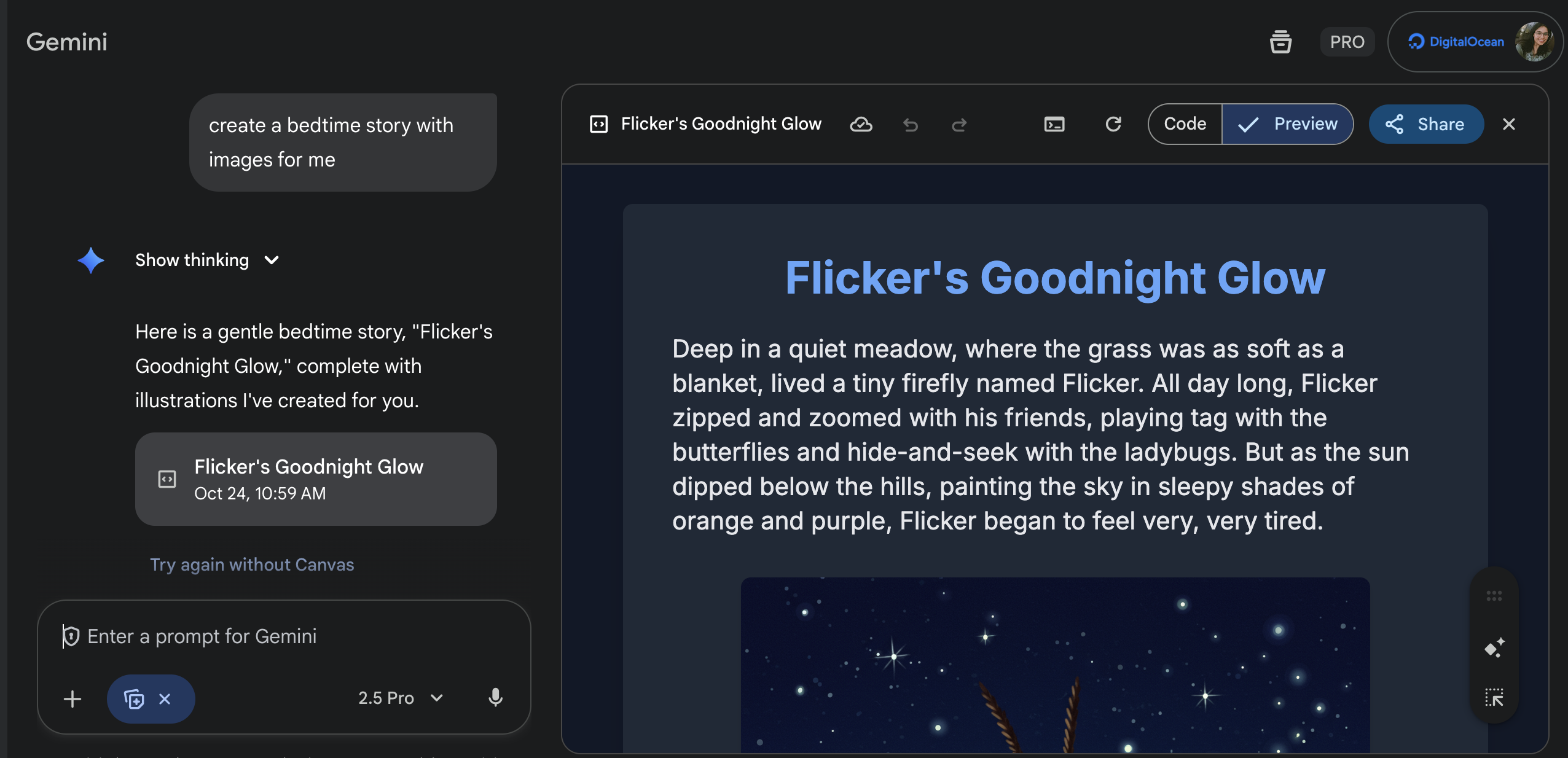

4. Canvas

Use Canvas to brainstorm ideas, create images, documents, and presentations, or write code—in a dedicated side-by-side workspace. Unlike standard chat, where outputs appear inline with conversation, Canvas opens your project in a separate panel, allowing you to preview the AI’s edits in real-time while maintaining your chat history. You can highlight specific sections for targeted revisions, adjust document length or reading level with one click, and iterate on the same project without losing previous versions in a scroll of messages.

5. Nano Banana

Gemini Nano Banana (also known as Gemini 2.5 Flash Image) is Google’s most efficient AI image model. It supports blending multiple images into a single scene, maintaining subject and character consistency across edits, and applying detailed changes, such as clothing, background, or style shifts, with simple natural-language prompts. The tool is designed for high-prompt accuracy and creative flexibility. Nano Banana also allows users to generate or remix visuals, ranging from realistic portraits to imaginative fantasy scenes, all within the Gemini app.

With the release of Gemini 3, Google has significantly extended its multimodal AI capabilities, and these enhancements also impact the image-generation and editing workflows supported by Nano Banana. According to Google, Gemini 3 delivers “state-of-the-art reasoning and multimodality” with the ability to analyze text, video, audio, and images in unified workflows.

Gemini Nano Banana offers features like:

-

Enables precise, context-aware image editing and generation

-

Supports multi-image blending for realistic visual composition

-

Interprets detailed prompts with high visual and stylistic accuracy

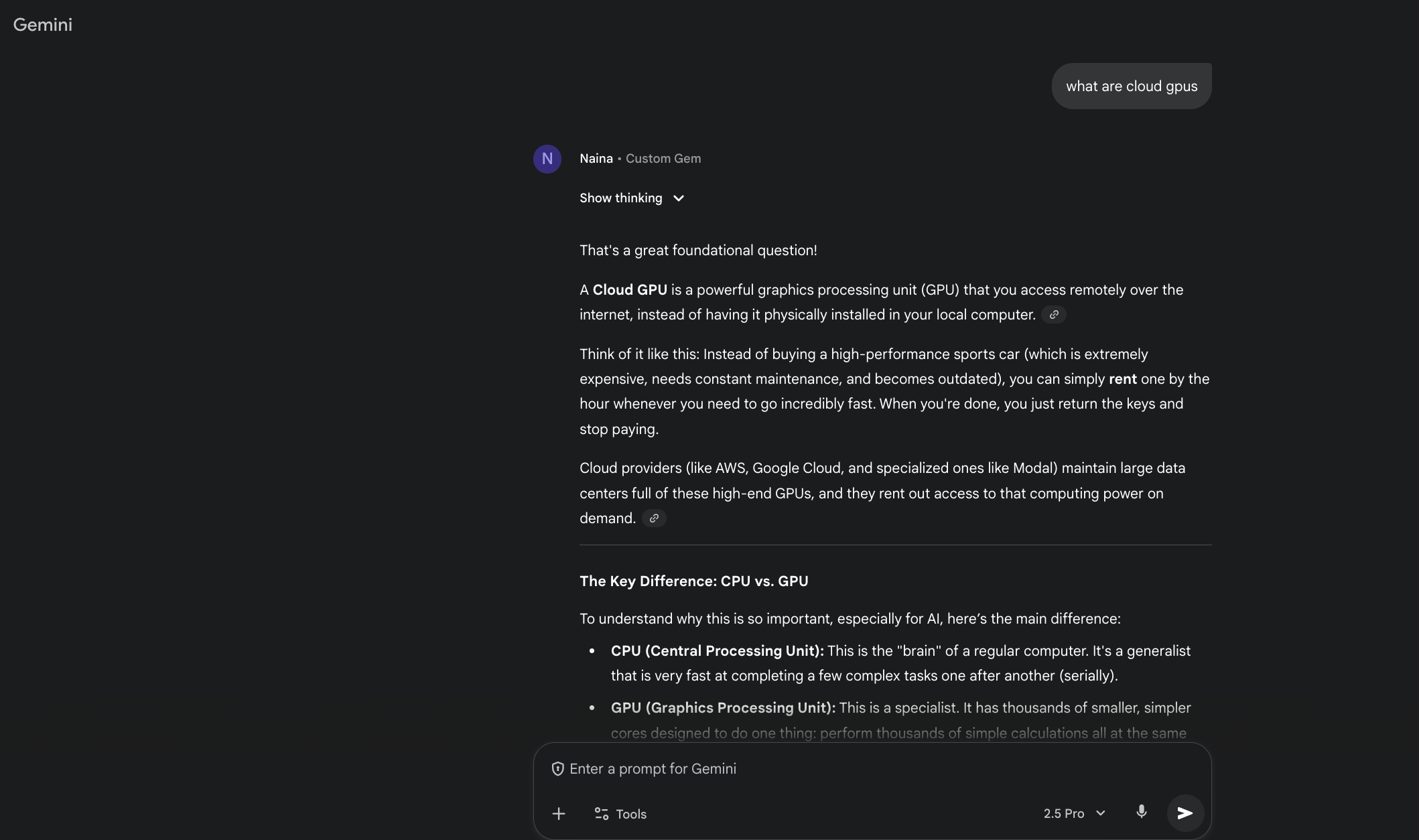

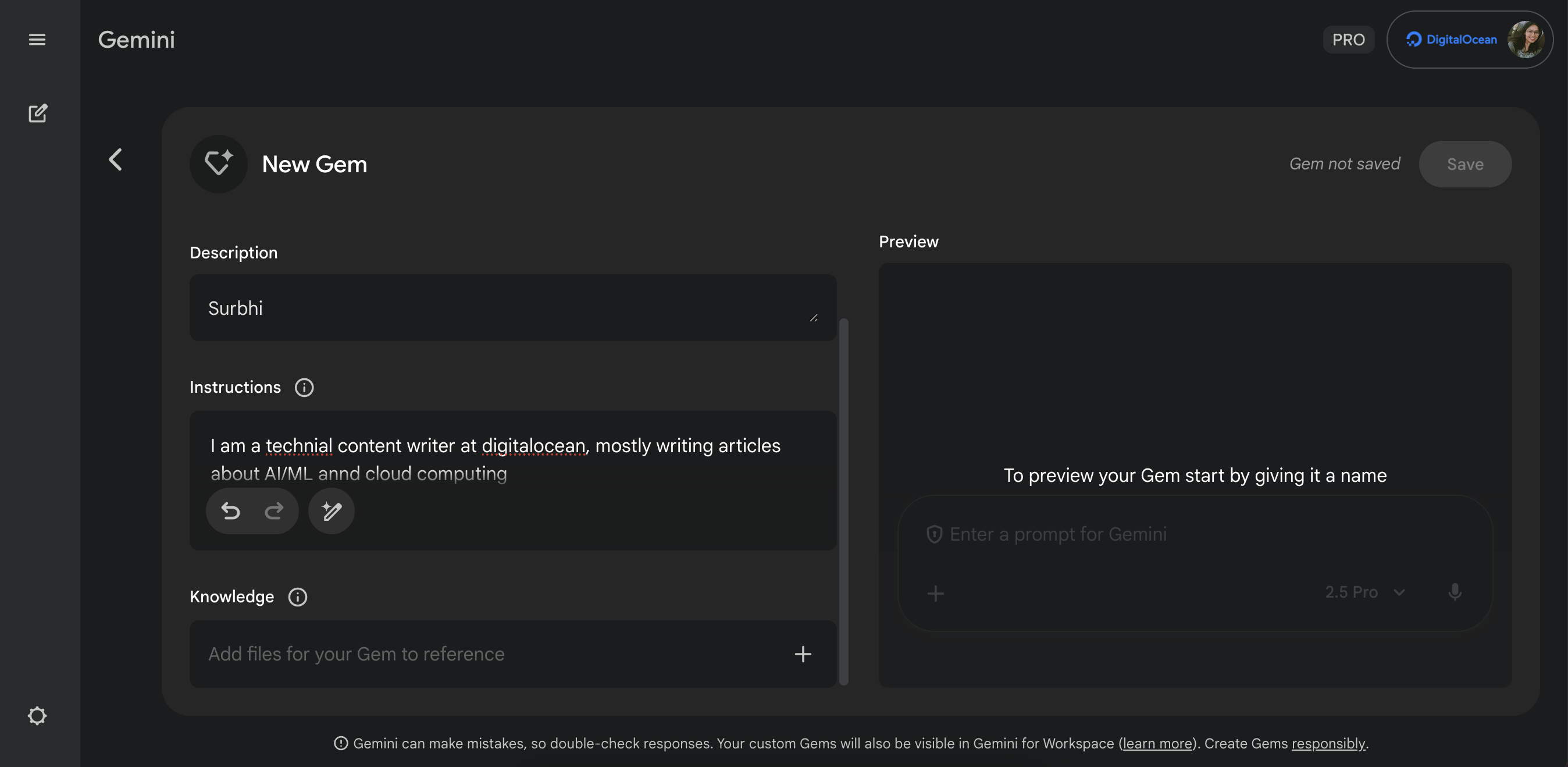

6. Gems

Gems are customizable versions of Gemini that you can create for specific tasks—like a personal writing coach, coding mentor, or brainstorming partner. If you’re familiar with OpenAI’s GPTs, Gems work on a similar principle: you define a Gem’s personality, expertise, and instructions, and it maintains that specialized behavior across conversations.

However, there are key differences. While GPTs can integrate external tools, APIs, and knowledge bases through actions, Gems focus on instruction-based customization and integrate directly with Google’s ecosystem, meaning your Gem can access your Gmail, Drive, or real-time web search without additional setup.

GPTs can be created or downloaded from OpenAI’s GPT gallery, where anyone can discover and use them. In contrast, Gems are primarily personal tools (although Google has indicated that sharing capabilities may be expanded in the future). Additionally, Gems are available to all Gemini Advanced subscribers. Creating Custom GPTs, however, requires a ChatGPT Plus subscription, and using them effectively often necessitates access to GPT-4.

Gemini models

Google offers Gemini in several distinct options, each optimized for specific use cases:

-

Gemini 2.5 Pro: The current top-tier model available through Google One AI Premium, offering enhanced reasoning power and multimodal capabilities with support for up to 2 million token context windows. The expanded context window allows you to input entire codebases, research papers, or hours of transcripts in a single session. This helps analyze complex data relationships, debug multi-file projects, or extract insights from long documents without losing context.

-

Gemini 2.5 Flash: Google’s specialized image generation and editing model that’s gained attention as a potential “Photoshop alternative” for AI-powered visual work. It excels at maintaining visual consistency when editing photos of people and pets—letting you change outfits while preserving exact likenesses, blend multiple photos, apply artistic styles, or restore old images without the need for expensive design software.

-

Gemini 2.5 Flash Lite: Google’s ultra-fast, cost-efficient model built for high-volume AI workloads. It delivers the core capabilities of the Gemini 2.5 family, including tool use (search, code execution), multimodal input (text, image, audio, video, PDF), and a large 1 million-token context window, while optimized for low latency and lower cost. With better performance than its predecessor (2.0 Flash-Lite) on coding, math, and science benchmarks, it’s ideal for tasks such as classification, translation, intelligent routing, and other high-throughput applications.

-

Gemini 3 Pro: Google’s most advanced multimodal model to date. It supports text, image, video, audio, and PDF inputs, with a 1 million–token context window and a 64k-token output limit. It introduces a thinking level parameter to balance reasoning depth, latency, and cost.

Gemini pricing

Gemini pricing follows Google’s workspace model:

-

Free tier with generous usage limits: 5 prompts per day using Gemini 2.5 Pro with a 32,000-token context window, can generate or edit up to 100 images daily, access 5 Deep Research reports per month, and create up to 20 audio overviews.

-

Gemini Advanced: $19.99/month bundled with Google One AI Premium, includes 100 daily prompts with 1M token context window, 1,000 daily image generations, NotebookLM Plus, integration across Gmail/Drive/Docs/Sheets/Slides/Meet, 2TB storage, and Google Home Premium Standard.

-

Included in Google Workspace plans starting at $7.20/user/month (billed annually).

-

Enterprise features are built into existing Google Workspace Business and Enterprise plans, offering enterprise-grade data security (customer data is never used for training without explicit consent), admin controls to manage and turn off Gemini features, and the automatic application of existing Workspace data security and sovereignty controls.

What is ChatGPT?

ChatGPT is a conversational AI platform built on top of OpenAI’s models. It was launched in November 2022 and gained immense popularity overnight. It is built on the GPT architecture (Generative Pre-trained Transformer), which enables it to understand and generate human-like responses for any query.

The latest model, GPT-5, is a unified multimodal system that handles text, images, and audio in real-time, offering faster reasoning, richer context understanding, and enhanced creative and analytical capabilities. Text-based conversations remain ChatGPT’s core strength, but users can now engage more effectively with ChatGPT through visual and audio inputs.

ChatGPT features

ChatGPT continues to evolve as a versatile AI assistant, integrating multimodal capabilities and creative tools that extend beyond text-based interactions. From generating detailed images and videos to interpreting complex documents and data, its expanding feature set supports a wide range of professional and creative workflows.

1. Sora 2

Sora 2 is a text-to-video model and advanced discovery interface that generates realistic short videos from text prompts. It enables content remixing, storyboarding, and creative edits. Via Cameo functionality, users can insert themselves or others into videos, resulting in highly personalized content.

2. Codex

Codex is ChatGPT’s software engineering assistant, designed for cloud-based collaboration and coding support. It can answer detailed questions about a codebase, run code snippets, debug errors, or even draft pull requests. For developers handling large projects, Codex acts like a virtual pair programmer, offering suggestions and optimizing workflow efficiency.

3. DALL-E 3

Integrated directly into ChatGPT, DALL-E 3 is an AI image generation tool. Unlike earlier versions, DALL-E 3 understands nuanced prompts and follows instructions surprisingly well.

However, it still struggles with:

-

Text rendering: producing spelling errors in images

-

Difficulty understanding negative prompts: asking it to exclude something often makes it fixate on that element

It can create or modify high-quality images from prompts, supporting in-painting (selecting and modifying specific areas of an existing image), style adjustments, and scene customization. Users can produce visuals for presentations, social media, or creative projects, turning abstract ideas into polished, professional-grade images.

4. Agent mode

In agent mode, ChatGPT executes complex, multi-step tasks autonomously. Unlike standard ChatGPT, which answers questions, agent mode actively engages with websites, clicking, typing, and navigating to complete real-world tasks, such as building financial models, comparing travel options, or creating executive dashboards. Users maintain control through permission requests and can view a live activity log of the AI’s actions.

In the official video from OpenAI, you can see how agent mode operates in real-time. The demo begins with the user enabling agent mode and giving a task, such as planning a trip. The agent then navigates across websites, gathers and analyzes data, and even fills in forms all within a secure, user-approved workflow. Throughout the process, it pauses at checkpoints for permission before logging in or submitting any data, ensuring complete transparency and control. Finally, the agent delivers a finished output, such as a presentation, spreadsheet, or summary, along with a clear activity log showing every action it performed.

5. Whisper

Whisper is OpenAI’s AI voice speech recognition model, integrated into ChatGPT for voice conversations. It enables speech-to-text conversion, supporting voice interactions and transcription tasks. Whisper significantly outperforms major competitors in accuracy: with a median Word Error Rate (WER) of 8.06%, it’s roughly twice as accurate as Google Speech-to-Text (16-20% WER) and Amazon Transcribe (18-22% WER).

6. GPTs

OpenAI’s GPTs enable users to build domain-specific assistants for tasks such as financial analysis, coding, or marketing. Creators can upload files, set instructions, and allow features such as web browsing and code interpretation. While free users can explore public GPTs, creating or using advanced features like Deep Research and Atlas requires a paid plan.

Google’s Gemini Gems offer similar functionality, allowing users to design custom assistants with instructions and file uploads, integrated with Google Workspace tools. In essence, both platforms enable tailored AI workflows—GPTs focus on customization and integrations, while Gems emphasize productivity within Google’s ecosystem.

7. Atlas

Atlas, OpenAI’s AI-powered browser, embeds ChatGPT directly into your browsing experience, allowing it to see what you’re viewing and take action across the web. It can navigate websites, fill out forms, add items to carts, and automate multi-step workflows, such as researching products or compiling team briefs. With agent mode, Atlas handles complex tasks autonomously—opening tabs, reading documents, and completing actions—while you maintain oversight and control.

In OpenAI’s official video, Atlas is shown navigating websites, summarizing content, and requesting user approval before taking actions, illustrating how it merges conversational intelligence with browsing.

8. Deep research

Deep research enables you to conduct in-depth analyses in ChatGPT: you provide a complex research prompt, and it gathers, analyzes, and synthesizes web data, documents, and PDFs, then produces a detailed, cited report. According to OpenAI, it “conducts multi-step research on the internet for complex tasks” and “accomplishes in tens of minutes what would take a human many hours.”

In this official video, you’ll see the system clarify the prompt (asking follow-up questions), browse web pages, review documents and PDFs, build tables and charts, and finally produce a formatted report with citations, demonstrating how in-depth research moves beyond surface-level responses to deliver curated, traceable insights.

ChatGPT models

OpenAI has released several model generations, each exponentially smarter than the last:

-

GPT-4.1: Released April 14, 2025, this next-generation model family represents a major leap in coding ability, instruction-following, and long-context comprehension. The complete GPT-4.1 model supports up to 1 million tokens of context and sets new benchmarks on developer-centric tasks (e.g., scoring 54.6% on SWE-bench Verified, a 21-point gain versus GPT 4).

-

GPT-5: Released in August 2025, A unified model designed for advanced reasoning and faster response times. Its launch drew some controversy, with early users criticizing it for being overly “sycophantic” or too agreeable in tone. OpenAI has since fine-tuned it based on user feedback, resulting in a more balanced personality and stable reasoning performance. GPT-5 now powers ChatGPT by default across tiers, combining responsiveness with deep contextual understanding.

-

GPT-5 Mini: A compact, cost-efficient variant of GPT-5 built for lighter yet high-volume workloads. It retains the core reasoning and instruction-following abilities of the flagship model but is optimized for faster responses and reduced API costs. With strong performance in summarization, classification, and conversational tasks, GPT-5 Mini is ideal for developers and teams seeking a balance of speed, quality, and affordability in production-scale applications.

-

GPT-5 Nano: The smallest and fastest model in the GPT-5 lineup, designed for ultra-low latency and cost-sensitive scenarios. It powers use cases such as real-time chatbots, quick text generation, and large-scale automation, where responsiveness matters more than deep reasoning. Despite its lightweight architecture, GPT-5 Nano maintains compatibility with GPT-5’s safety and instruction-tuning frameworks, making it a reliable choice for streamlined AI deployments.

ChatGPT pricing

ChatGPT pricing follows a tiered subscription model:

-

Free tier with limited features: limited deep research, memory, and context, as well as message and upload capabilities, and slower image generation.

-

ChatGPT Plus: $20/month for GPT-5 access with higher usage limits, such as 25 deep research reports, 40 agent mode chats, expanded messages and upload, and better image generation.

-

ChatGPT Pro: $200/month for GPT-5 access and priority access.

-

Enterprise plans with dedicated support.

When to use Gemini vs ChatGPT

Both Gemini and ChatGPT now offer advanced multimodal AI capabilities, including the ability to handle text, images, audio, and even video. However, their design philosophies and ecosystems serve different needs. Below is practical guidance to understand how each tool works best.

Gemini use cases

Gemini’s family of models supports a broad spectrum of applications across industries, ranging from reasoning and coding to image generation, data analysis, and workflow automation. With multimodal understanding and long-context processing, Gemini adapts to creative, technical, and enterprise environments alike.

For multimodal content analysis

Gemini 2.5 Pro and Gemini 2.5 Flash represent Google DeepMind’s latest generation of AI models, offering advanced cross-media reasoning across text, images, audio, video, and code within a single chat exchange. These models enable users to analyze complex datasets, for example, combining performance dashboards, screenshots, and customer feedback, to generate cohesive summaries and insights.

Gemini 2.5 Pro focuses on high-accuracy reasoning, long-context understanding (up to a million tokens), and deep analysis. At the same time, Gemini 2.5 Flash prioritizes speed and efficiency for real-time or large-scale use cases. Together, they deliver multimodal intelligence for research, product development, and enterprise analytics.

With the introduction of Gemini 3, Google extends these capabilities with stronger multimodal grounding, broader tool use, and more advanced reasoning controls such as configurable thinking levels for balancing depth and latency. Gemini 3 is optimized for enterprise-grade analysis across long documents, complex media, and multi-step workflows, building on the strengths of the 2.5 family while delivering higher accuracy, stability, and output quality.

For current information

Gemini is invaluable for fact-checking, market research, or tracking technology updates in real-time. For instance, if a product manager is researching evolving AI pricing models, Gemini can surface the latest vendor documentation. However, both Gemini and ChatGPT can still misinterpret or hallucinate information, so any AI-sourced insights should be cross-verified before publication or decision-making.

For Google Workspace integration

At DigitalOcean, we use Google Workspace tools daily, including Gmail, Docs, Sheets, and Meet. Gemini integrates natively across all of them, with basic use already included in Google Workspace plans. During meetings, Gemini summarizes notes, identifies key decisions, and suggests next-step actions directly in Docs. In Gmail, it drafts context-aware responses, and in Sheets, it automates data interpretation.

This embedded experience makes it a natural, AI-enabled extension of our workflow. ChatGPT can also connect with Google Workspace through tools like Zapier, Make, and browser extensions, enabling automated drafting, task management, and data analysis.

For accuracy

Gemini’s long-context window (up to 2 million tokens) allows it to process lengthy codebases, research papers, or policy documents while maintaining coherence. For data analysts, tools like NotebookLM help generate first-draft reports, risk assessments, or technical summaries, especially when working with structured datasets or internal documents. However, while its source-linking and grounded reasoning make it more reliable than many AI tools on the market, it’s not infallible. Like all AI systems, it can misinterpret or omit nuances, so human expertise and review remain essential before using its output for regulated, compliance, or high-stakes documentation.

ChatGPT use cases

ChatGPT’s conversational and analytical capabilities make it effective across various contexts, including creative, professional, and educational settings. Its integration with tools like image generation, code interpretation, and file analysis expands its role from a writing assistant to a multifunctional AI collaborator.

For creative and conversational tasks

ChatGPT (GPT-5 and 4o) excels at generating natural, engaging, and contextually rich text. It’s ideal for content creation, brainstorming, and storytelling—from drafting blog posts and ad copy to designing onboarding materials. It’s best used as a starting point for ideation and drafting, with human review and refinement ensuring the final output aligns with your brand voice and creative goals.

For customization

ChatGPT enables users to create custom GPT domain-specific assistants, fine-tuned for their individual workflows. For example, a developer could create a GPT that automates API documentation generation, and a marketer could build a GPT that surfaces competitor trends. These custom assistants can access uploaded files and configured tools, though direct API calls and complex integrations typically require the more advanced Assistants API. GPTs are great for low-code customization, while the Assistants API enables deeper, programmatic integration.

For team collaboration across skill levels

ChatGPT’s intuitive conversational interface makes it accessible for both non-technical team members and technical users. Codex, the cloud-based coding assistant, helps with understanding, refactoring, and generating code across large projects. This blend of accessibility and technical depth makes ChatGPT a versatile tool for teams working on shared projects or problem-solving tasks.

For global availability

Both ChatGPT and Gemini are widely available worldwide, although their coverage differs slightly. ChatGPT is officially supported in over 100 countries and continues expanding its multilingual capabilities for text, voice, image, and video through tools like Sora and Whisper. Gemini, meanwhile, is more tightly integrated within the Google ecosystem, offering broader availability in regions where Google services are dominant but limited access in countries with restricted Google infrastructure or data regulations.

Gemini vs ChatGPT FAQs

What is the difference between Gemini and ChatGPT?

Gemini is Google’s AI assistant, deeply integrated with Gmail, Drive, Calendar, and Search to work within your existing Google ecosystem. ChatGPT is OpenAI’s conversational AI platform, known for advanced reasoning capabilities, creative problem-solving, and tools like custom GPTs and the Atlas browser for web-based automation.

Which AI is more multimodal: Gemini or ChatGPT?

Gemini is more capable of performing multimodal tasks. Built from the ground up to handle text, images, videos, and audio simultaneously, Gemini can analyze complex visual content, process videos frame by frame, and comprehend documents with mixed media. ChatGPT has basic image understanding but can’t yet compete with Gemini’s multimodal capabilities.

How do Gemini and ChatGPT pricing plans compare?

Gemini and ChatGPT pricing for premium features is nearly identical; Gemini Advanced costs $19.99 per month, while ChatGPT Plus costs $20/month. However, Gemini offers more value for Google Workspace users (starting at $7.20/user/month, included in the Workspace plan) and provides a more generous free tier. ChatGPT, by contrast, offers a Pro plan at $20 per month that unlocks access to advanced models like GPT-4o, faster performance, GPTs, deep research, agent mode, and priority access. There’s no directly comparable Gemini Pro tier, since Gemini’s premium access is tied to Workspace plans rather than a standalone subscription.

Which is better for enterprise: Gemini or ChatGPT?

The decision between Gemini and ChatGPT for your business ultimately depends on your existing infrastructure and requirements. Gemini integrates naturally with Google Workspace and offers Gems for customizable workflows within that ecosystem. ChatGPT, on the other hand, supports broader third-party integrations and GPTs that connect with external APIs and tools, making it more versatile for teams operating across multiple platforms.

Does Gemini outperform ChatGPT in reasoning tasks?

Gemini’s and ChatGPT’s reasoning capabilities differ by task type. Gemini excels in analytical reasoning, research tasks, and technical accuracy, leveraging real-time data access and providing source citations with NotebookLM. ChatGPT excels in creative reasoning, conversational problem-solving, and handling ambiguous requests. For structured analytical work, Gemini typically wins; for creative and conversational reasoning, ChatGPT has the advantage.

Which offers better memory context: Gemini or ChatGPT?

ChatGPT maintains both short-term and long-term memory, granting ongoing access to past interactions to adapt responses and provide more personalized assistance. Users have control to edit or delete saved information.

Gemini offers similar memory capabilities for Advanced subscribers, with user-controlled information management. Google is also testing “pcontext” (personalized context) that would integrate insights from Gmail, Photos, Calendar, Search, and YouTube—potentially offering deeper personalization than ChatGPT’s siloed approach.

Build with DigitalOcean’s Gradient™ Platform

DigitalOcean Gradient™Platform makes it easier to build and deploy AI agents without managing complex infrastructure. Build custom, fully-managed agents backed by the world’s most powerful LLMs from Anthropic, DeepSeek, Meta, Mistral, and OpenAI. From customer-facing chatbots to complex, multi-agent workflows, integrate agentic AI with your application in hours with transparent, usage-based billing and no infrastructure management required.

Key features:

-

Serverless inference with leading LLMs and simple API integration

-

RAG workflows with knowledge bases for fine-tuned retrieval

-

Function calling capabilities for real-time information access

-

Multi-agent crews and agent routing for complex tasks

-

Guardrails for content moderation and sensitive data detection

-

Embeddable chatbot snippets for easy website integration

-

Versioning and rollback capabilities for safe experimentation

Get started with DigitalOcean Gradient™ Platform for access to everything you need to build, run, and manage the next big thing.

About the author

Surbhi is a Technical Writer at DigitalOcean with over 5 years of expertise in cloud computing, artificial intelligence, and machine learning documentation. She blends her writing skills with technical knowledge to create accessible guides that help emerging technologists master complex concepts.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.