Has your social media feed been flooded with celebrity selfies, quirky 3D figurines, and vintage saree trends? Much of this wave’s contributions come from Nano Banana, Google’s viral AI image generation and editing tool that’s part of the Gemini 2.5 Flash Image model. Between its launch on August 26 and September 9, 2025, Google’s Gemini app gained over 23 million new users, with people generating more than 500 million images in just two weeks.

This surge pushed Gemini to the number one spot on the App Store and Google Play. But is Nano Banana just fueling views, trends, or does it have broader, practical applications? In this article we answer all your questions about Nano Banana, its features, use cases, and limitations.

💡Key takeaways:

-

Nano Banana aims to maintain the consistency of a subject across edits and different styles.

-

Images generated from Nano Banana can be used for prototyping, entertainment, marketing, and education.

-

Limitations include limited language support, image resolution, and usage quotas.

What is Nano Banana?

Nano Banana is the codename for the latest update to Google’s Gemini 2.5 Flash image generation model. Users can create new visuals from text prompts, refine, remix, edit existing images, and merge multiple images into a single composition with natural language instructions.

Based on early tests and user reviews, Nano Banana performs well when editing existing images while preserving subject consistency and detail far better than many older tools. In direct comparisons with Midjourney, it has produced photorealistic scenes that more closely match prompt instructions for lighting, textures, and atmosphere.

Key features:

-

Maintain a subject’s look and feel across multiple images and contexts.

-

Use natural language to target specific elements in an image, like backgrounds, objects, colors, or textures.

-

Uses built-in knowledge of the real world for complex, multi-step instructions.

-

Starter apps in Google AI Studio make it easy to test product mockups, character consistency, and editing workflows.

-

Uses ‘SynthID watermarking’ so that all outputs include an invisible watermark for ethical traceability and authenticity.

💡Learn how to turn text prompts into animated videos by pairing Google’s Nano Banana image model with Stability AI’s image-to-video pipeline on a DigitalOcean GPU Droplet ⬇️

Nano Banana pricing

Nano Banana is available through both a free tier and a paid tier. Google provides free access via AI Studio and the Gemini API, giving users a daily quota of image generations for experimentation and light use. For higher-volume or production workloads, paid pricing applies.

The model is billed at $30 per 1,000,000 output tokens, with each image accounting for 1290 output tokens ($0.039 per image). Paid access is available through the Gemini API, AI Studio, and Vertex AI for enterprise use.

-

Feeling overwhelmed by the pricing and complexity of hyperscalers? Dive into our in‑depth comparison of AWS, Azure, and GCP to demystify how these giants differ in cost, services, and performance, and why exploring alternatives like DigitalOcean could be your smartest move.

-

Explore our detailed guide on the top 7 Kubernetes platforms that rival GKE. Compare features, pricing, and ease of use to find the best fit for your workloads.

How to use Nano Banana?

You can start with Nano Banana in two ways: Through Gemini interface, or Google’s AI Studio (suitable for experimenting purposes), integrating Nano Banana into applications via the Gemini API (for developers), or through Vertex AI (for enterprise).

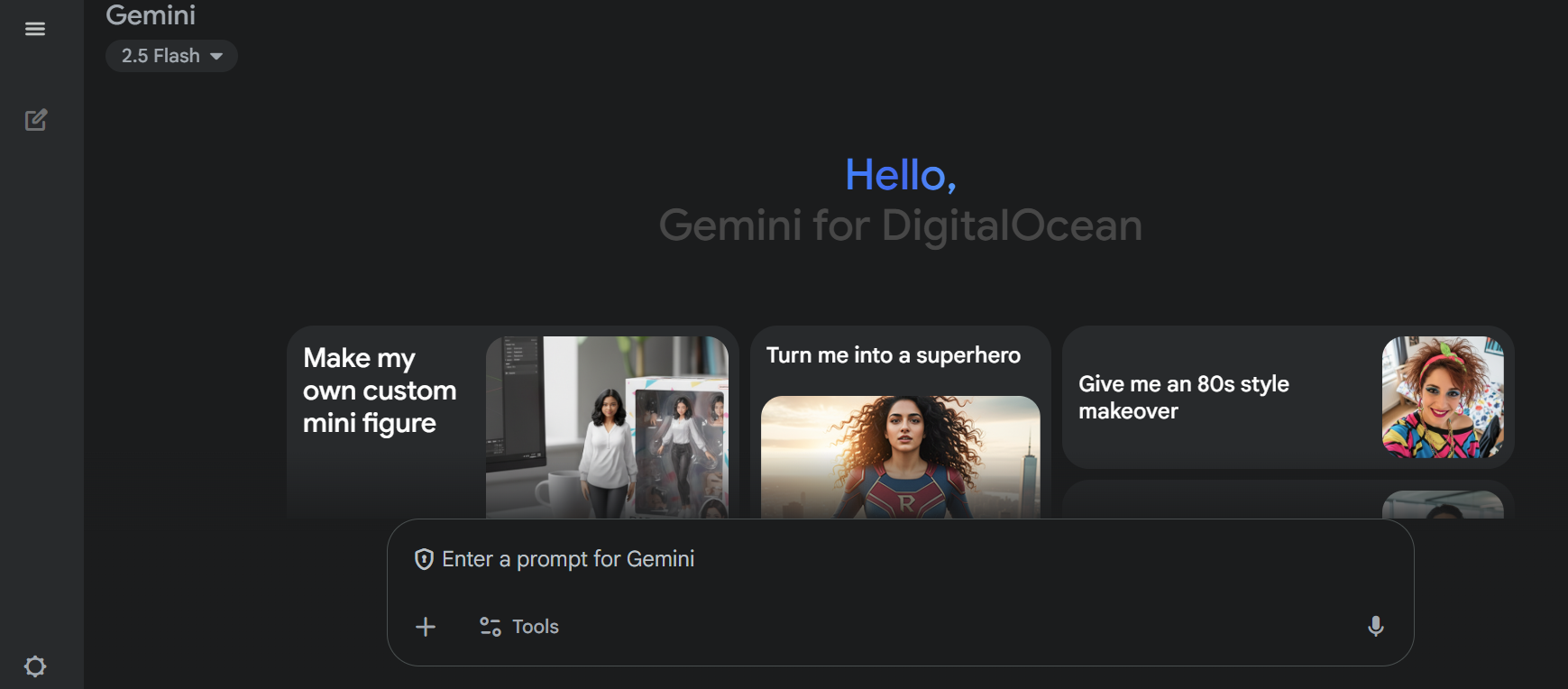

Nano Banana via Gemini

- Sign in to Google Gemini (available in mobile app also)

-

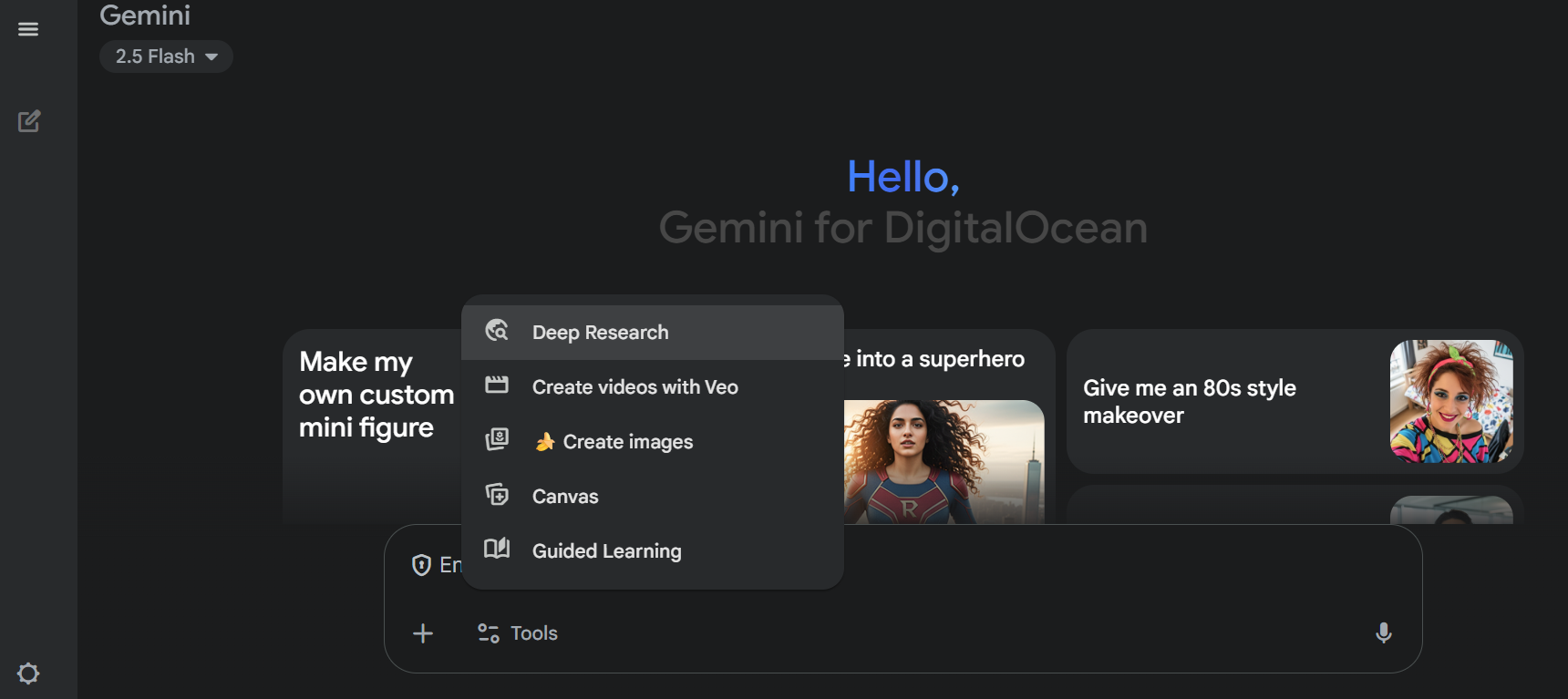

From the tools section, select “Nano Banana Create images”

-

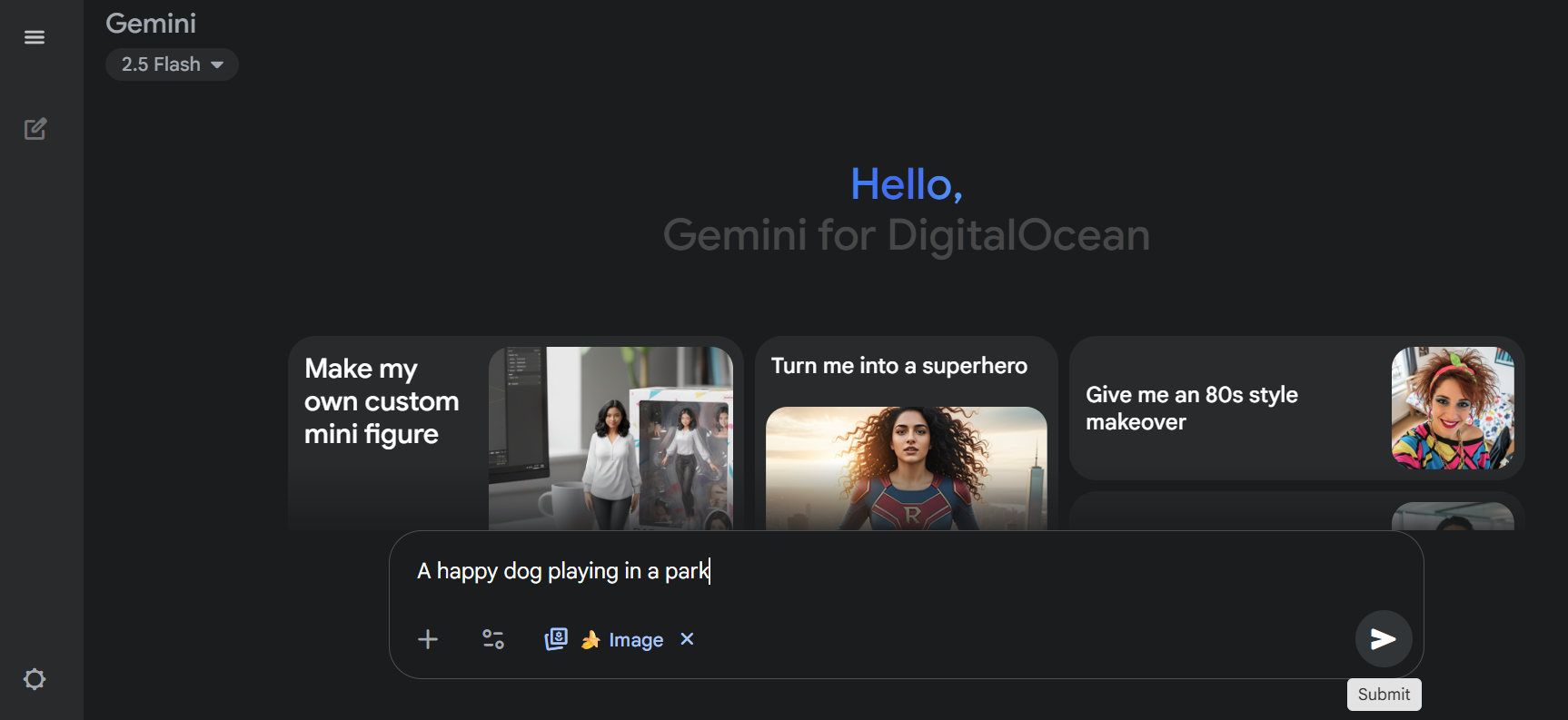

Write your prompt with details like subject, mood, lighting, and intended use (e.g., “social banner” or “product mockup”) and then click the “Submit” icon at the right end. To edit or merge multiple images use the “+” button, upload your images, and give detailed specific prompts.

-

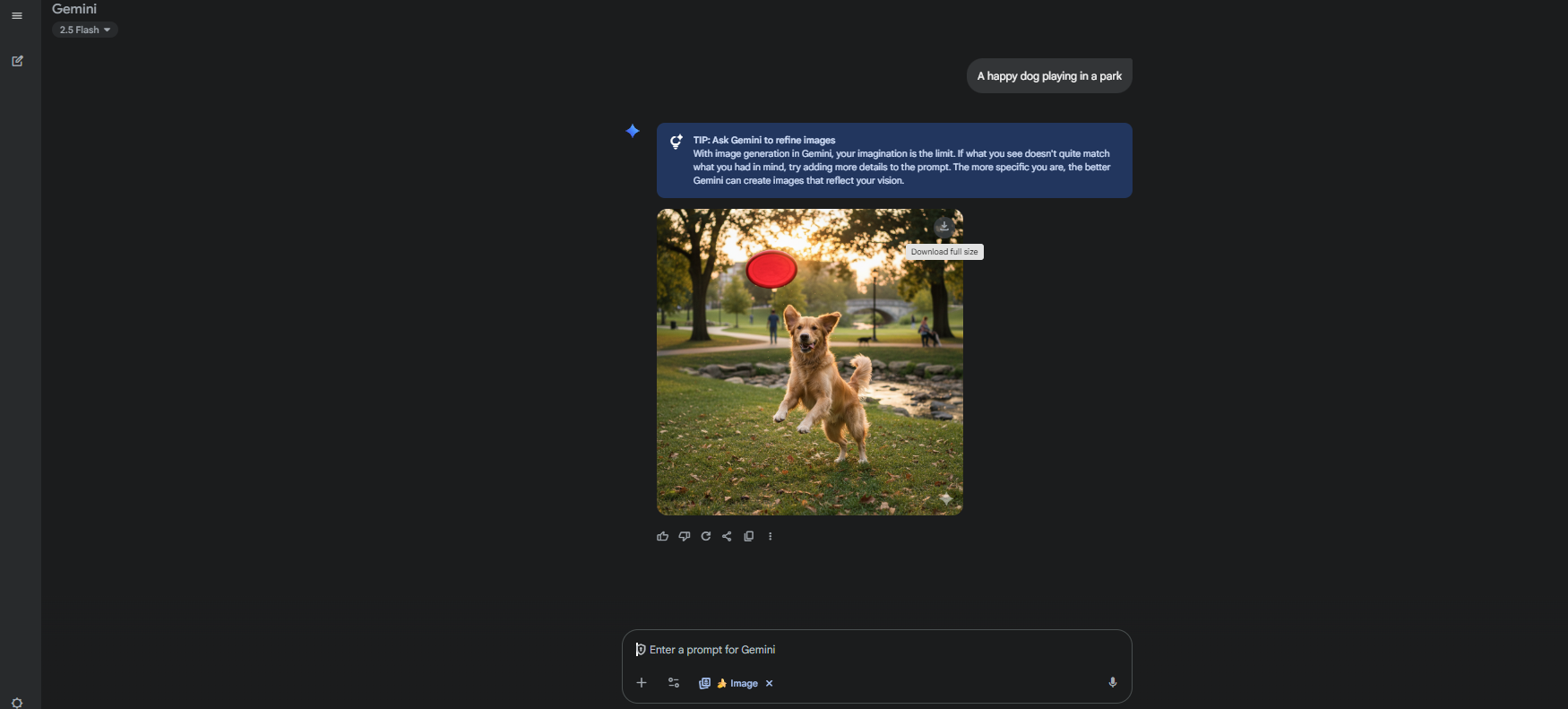

Wait for a few seconds as the model generates an image. Refine iteratively by adjusting prompts or re-uploading inputs to guide the model toward the desired result.

-

Download your image to use in your project.

Nano Banana via Google AI Studio

- Sign in to Google AI Studio.

-

Click “Try Nano Banana”

-

Enter a text prompt.

-

Click “Run” to see your image output and download the image if needed.

Nano Banana via Gemini API

-

Use Gemini API to set up authentication and install the Gemini SDK or use REST endpoints.

-

Choose the flash-image model models/gemini-2.5-flash-image-preview.

-

Send requests with either: Text-only prompts for text-to-image, or text + image for editing, multiple images + text for compositing.

-

Parse the image output (PNG up to 1024×1024 px).

Nano Banana prompting best practices

Structured prompts with specific factors like mood, layout, and lighting help you get the most out of Nano Banana. Good prompts help the model understand what you want, and how you want it. Below are some strategies that you can try for image generation. Google has shared image generation guidelines on how to craft effective prompts for Nano Banana, and we have summarized the most important points.

Note: Generative AI models can produce varied results for the same prompts depending on context, phrasing, or input data. Your outputs may differ from the samples shown here.

1. Use descriptive prompts to define context

Instead of throwing in a few disconnected words, writing a full description that paints a scene will yield better outputs. The model responds better to natural, descriptive language because it uses text embeddings to capture relationships between objects, lighting, and mood. Include purpose (e.g., social banner, print logo) so composition and legibility match the final use.

When you describe a setting in sentences, the model’s attention mechanisms can align tokens (e.g., “dog,” “mahogany table,” “soft ambient lighting”) with specific visual features during the denoising process in diffusion. This makes it easier to generate a coherent image where layout, shadows, and textures blend with each other naturally, instead of appearing as isolated or mismatched elements.

| Example prompt | Output | Description |

|---|---|---|

| A car going down a road |  |

Less effective prompt which just specifies the basics without details. |

| A classic red sports car driving along a coastal road at sunset along the hillside. A woman wearing a colorful scarf drives, and a happy dog sits in the passenger seat. The mood is joyful, cinematic, and warm, with soft golden light. |  |

More detailed prompt with elements, background, mood, and lighting. |

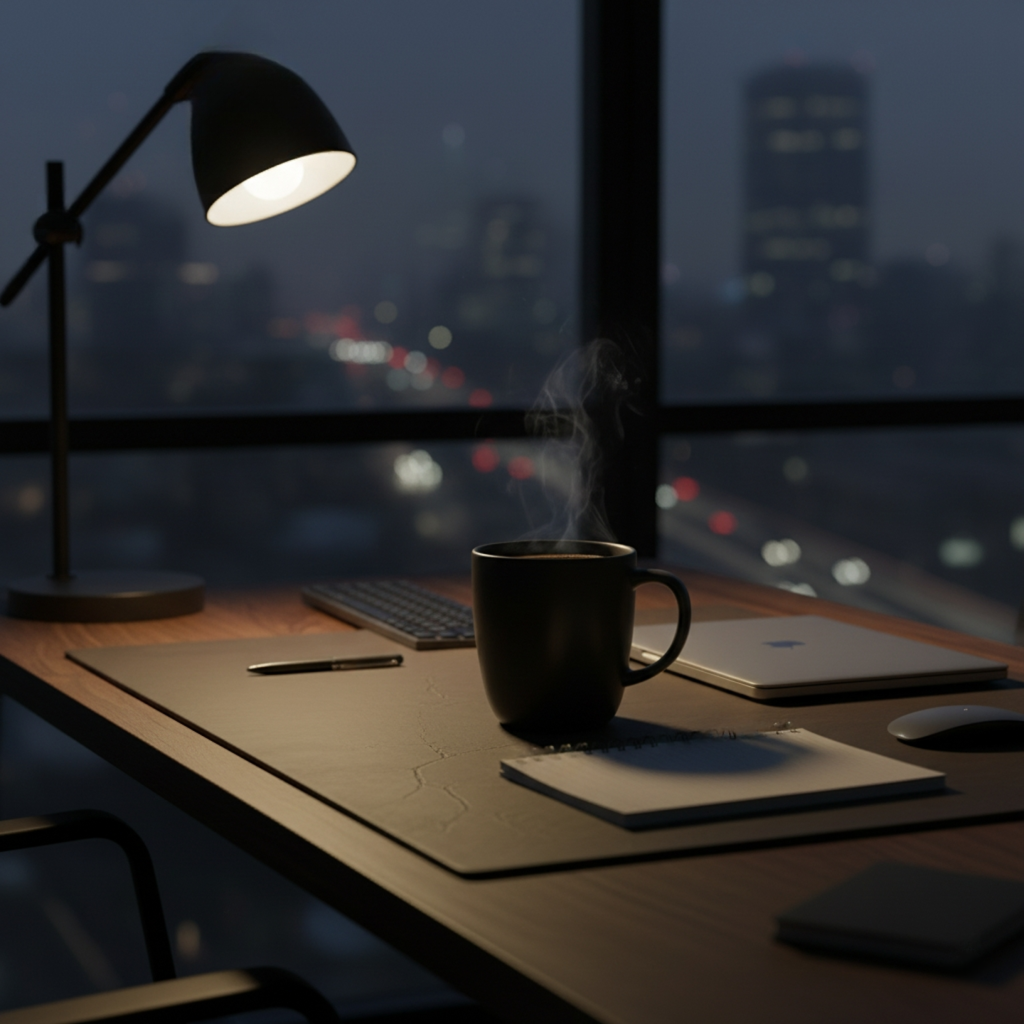

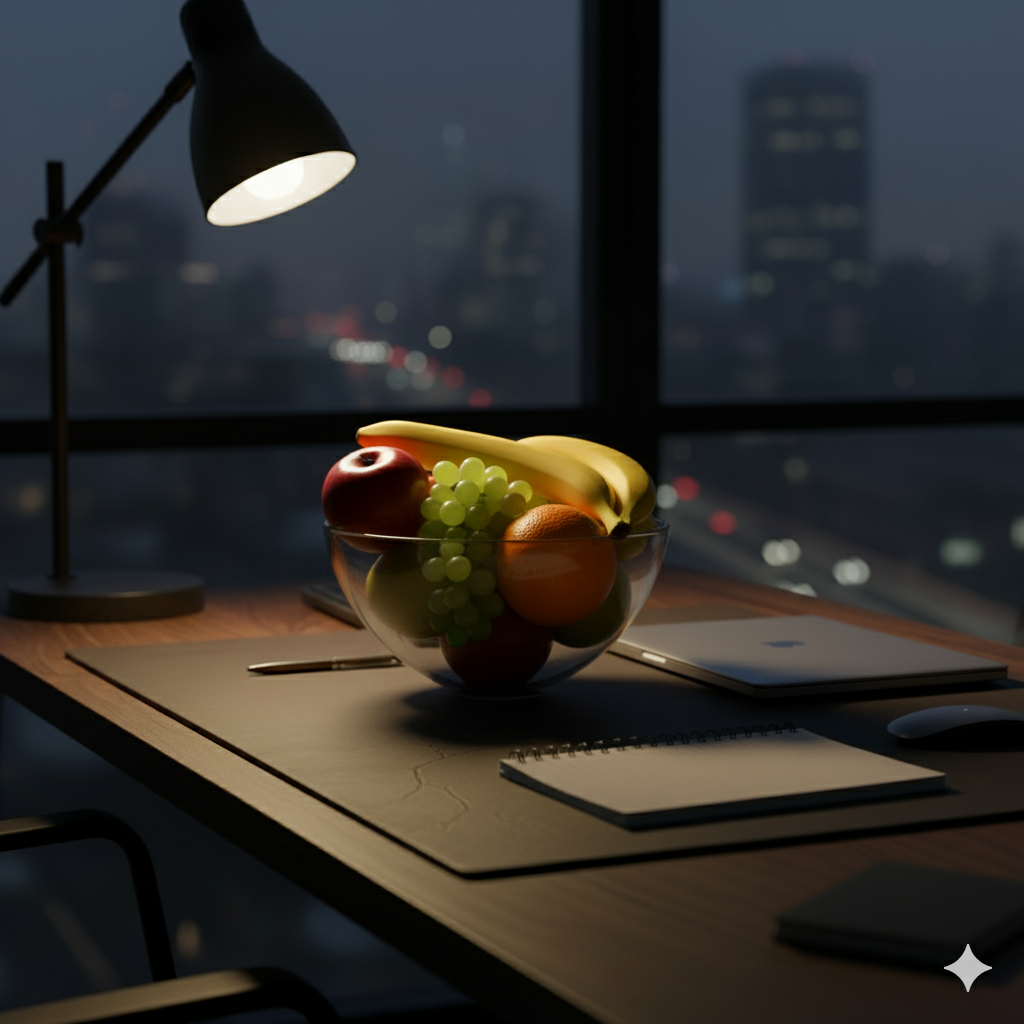

2. Provide multiple images

Whether you’re editing existing images or combining multiple inputs, giving the model visual references and clear instructions helps it stay consistent. Editing mode doesn’t regenerate the entire image. It identifies the region of interest from the prompt that you provide. For example if you want to edit “the sofa in a living room photo”, it only re-synthesizes pixels in that region. The rest of the image stays untouched, so edits look more natural and preserve the original context.

When you give multiple images as input you can merge elements or styles from two or more images into a single output guided by your prompt. For combining multiple images, include all relevant inputs so the model can align styles and composition.

| Example prompt | Output | Description |

|---|---|---|

Editing: Using the attached photo of my desk, replace the coffee mug with a healthy fruit bowl, keeping the same lighting and shadows. |

|

Edits a part of the image and preserves the rest. |

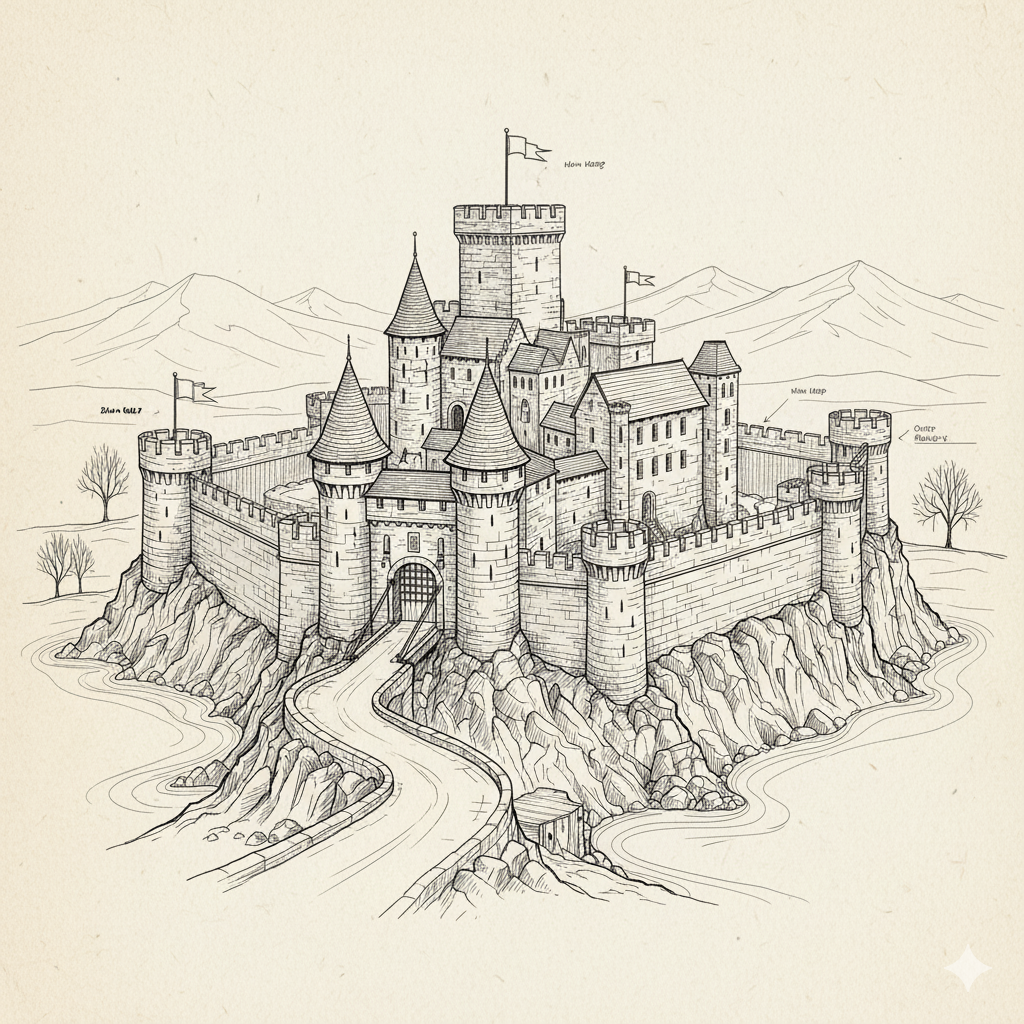

Multi-image inputs: Blend the provided sketch of a castle with the attached photo of a dense forest so the castle appears within the towering trees.   |

|

Combines both the images smoothly. |

-

Learn how to build a full-featured AI image prompt builder app in Python & Streamlit with user-friendly UI to craft, randomize, and export prompts for image generation workflows.

-

Explore how Fooocus simplifies the Stable Diffusion pipeline using premium tools like ControlNet, LoRAs, and built-in optimizations to generate sharper, more detailed AI images.

-

Discover how NVIDIA’s Sana model pushes limits with up to 4096×4096 resolution and sampling techniques for high-quality, fast text-to-image synthesis.

3. Avoid contradicting prompts and use semantic negative prompts

Conflicting details confuse the model and might lead to AI hallucinations. Make sure your description forms a consistent scene instead of mixing incompatible instructions. Google also recommends using “semantic negative prompts”. Instead of listing exclusions (like “no bikes”), describe the desired state in positive terms to avoid semantic conflicts.

| Example prompt | Output | Description |

|---|---|---|

| A place with no plants |  |

When there is only negation in the prompt the model focuses only on the negative aspect and generates an output. |

| A calm city with buildings, and roads without plants. |  |

The prompt still contains “without plants”, but the difference is that the emphasis is on the positive state of the output street (“calm city with buildings, and roads”) rather than describing only the negative objects. |

4. Take an iterative approach to conversational prompting

Prompting when treated as a process of refinement provides desired outputs. You can start simple, check the output, and then add details or adjust what isn’t working. This iterative, conversational approach helps guide the model step by step, rather than overwhelming it with conflicting or overly complex instructions in a single attempt.

| Example prompt | Output | Description |

|---|---|---|

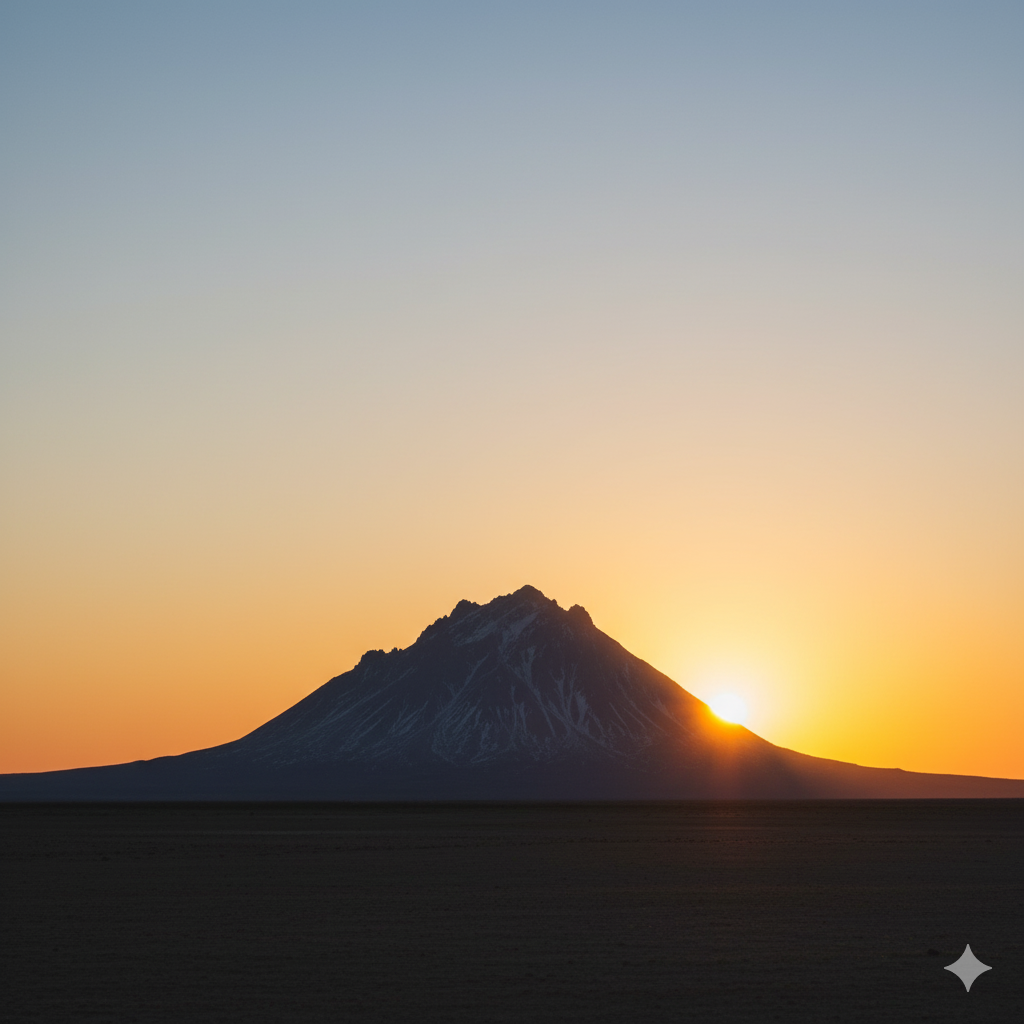

| A mountain with sunrise |  |

Basic prompt |

| Change this mountain to a snow-capped mountain peak glowing in the first light of sunrise, with rolling mist in the valley below. |  |

Adds atmosphere to the basic details |

| To this snow-capped mountain peak, add the first light of sunrise, with rolling mist in the valley below, pine forests stretching into the horizon, and a flock of birds silhouetted against the golden sky. |  |

Refined output with detail and motion. |

💡Whether you’re a beginner or a seasoned expert, our AI/ML articles help you learn, refine your knowledge, and stay ahead in the field.

Nano Banana use cases

Nano Banana’s blend of text-to-image generation, editing, and composition helps designers across industries. From retail to media, the model’s ability to merge inputs, preserve consistency, and apply targeted edits makes it a flexible tool for real-world workflows.

1. Prototyping

Interior designers can use Nano Banana to simulate different room layouts, wall colors, or lighting, while consumer product brands can test variations of sneakers, electronics, or packaging in multiple styles and textures. The model can also be used to generate 3D figurine concepts, which might help designers preview collectible models or character prototypes without physical molding.

For example, one user demonstrated how Nano Banana can prototype interior layouts by visualizing a bedroom with and without a curtain split to create a semi-separated office space.

2. Entertainment and media production

Writers, game designers, and content creators can integrate Nano Banana to visualize characters, environments, and storyboards. For instance, a game studio could generate concept art of a new fantasy world with consistent characters across multiple scenes. Paired with video models like Veo 3, Nano Banana can supply base images of characters, providing continuity across stitched clips.

Animator Billy Woodward shows how Nano Banana boosts character and scene consistency, creating an AI-driven animation from a single start frame with Seedance for motion.

-

Wan 2.1 displays open-source text-to-video and image-to-video foundational models for generation of realistic videos even with high motion scenes, running from small to large parameter sizes.

-

Learn how to deploy HunyuanVideo, a 13B-parameter open-source text-to-video model on DigitalOcean GPU Droplets, with complete setup steps, model architecture insights, and video generation tips.

3. Retail industry

E-commerce businesses need large catalogs of product imagery with a consistent brand look. Nano Banana can edit base photos to produce variations, changing colors, removing backgrounds, or placing items in different contexts without repeated photoshoots. For customers, online platforms can plug-in virtual try-on experiences, where user photos are combined with product images to show how clothing, accessories, or footwear might look in real life.

A brand designer used Nano Banana to test her own designs in different lights, and settings.

4. Brand campaigns

Ad teams can generate product images targeted to specific audiences. A skincare brand, for instance, might prompt Nano Banana to create lifestyle photos in different seasonal settings for marketing their products. The ability to control mood, composition, and text overlays makes it suitable for trying and testing campaign visuals at scale.

An AI creator uses Nano Banana for “Ads 2.0” to generate slogans, taglines, and visuals for 25+ brand-new apps.

💡 Looking for innovative ways to run your campaigns? Discover AI marketing tools that simplify content creation, ad optimization, and customer engagement.

Limitations of Nano Banana

While Nano Banana brings image generation and editing features, it also has limitations. Understanding these boundaries helps set realistic expectations for real-world use cases.

Limited language support

Nano Banana works best in English, Spanish (Mexico), Japanese, Simplified Chinese, and Hindi. Prompts in other languages may still function but often with reduced accuracy or consistency. This makes multilingual deployments less predictable, and industries targeting diverse audiences might need to validate outputs carefully.

Resolution constraints

Image outputs are limited to PNG files up to 1024 px. While this limit is suitable for digital displays and prototyping, this resolution is too small for high-quality print or large-scale visuals. Upscaling tools can help, but may introduce blurring or artifacts, which adds extra work for design teams.

Lack of production stability

The model is still in a preview phase, meaning it is experimental and subject to change. Features may be updated, removed, or behave inconsistently across sessions. For production use, this instability might pose risks to long-term reliability and makes Nano Banana better suited for testing and exploration at this stage.

Input support constraints

Nano Banana only works with text and image inputs, so media like audio or video is not supported. It cannot generate video directly, workflows that require video output need to integrate additional models or tools with Nano Banana.

Quotas and usage limits

Usage is capped by quotas, token counts, and rate limits. Large or frequent requests may hit these thresholds which might lead to delays or throttling. Developers need to plan around these constraints, splitting complex prompts into smaller chunks or monitoring quota usage to prevent workflow disruptions.

References

Nano Banana FAQs

Is Nano Banana free to use?

Yes, Nano Banana (Gemini 2.5 Flash Image) is available in AI Studio and Gemini API with a free tier, giving users a daily quota of image generations for experimentation and light use. For higher workloads or production deployments, paid pricing applies at about $0.039 per image.

What’s the difference between Imagen and Nano Banana?

Imagen and Nano Banana (Gemini 2.5 Flash Image) are both AI image generation models available through the Gemini, but they serve different purposes.

-

Imagen is Google’s production-ready text-to-image model. It is known for photorealism, sharp detail, typography, and artistic styles, and is suitable for tasks like brand visuals, product designs, or high-quality creative work. Pricing is usage-based and varies depending on the specific model used within Google Cloud’s Vertex AI platform.

-

Nano Banana is an update to Gemini AI image model. It is still in preview and focuses on flexibility and editing workflows. It supports multi-turn conversational edits, semantic editing refinements, and multi-image composition. Pricing follows a token model ($0.039 per image at 1024×1024 px).

What kinds of inputs does Nano Banana support?

Nano Banana currently supports text prompts and image inputs. You can use it for text-to-image generation, editing an existing image, or combining multiple images with a prompt. It does not support audio, video, or cross-media inputs at this time.

Can Nano Banana be used for commercial projects?

Yes, Nano Banana can be used for commercial projects through the Gemini API or Vertex AI. However, since it’s still in a preview phase, Google notes that features may change, and developers should test reliability before using it in production at scale.

Accelerate your AI projects with DigitalOcean Gradient™ AI Droplets

Unlock the power of GPUs for your AI and machine learning projects. DigitalOcean GPU Droplets offer on-demand access to high-performance computing resources, enabling developers, startups, and innovators to train models, process large datasets, and scale AI projects without complexity or upfront investments.

Key features:

-

Flexible configurations from single-GPU to 8-GPU setups

-

Pre-installed Python and Deep Learning software packages

-

High-performance local boot and scratch disks included

Sign up today and unlock the possibilities of GPU Droplets. For custom solutions, larger GPU allocations, or reserved instances, contact our sales team to learn how DigitalOcean can power your most demanding AI/ML workloads.

About the author

Sujatha R is a Technical Writer at DigitalOcean. She has over 10+ years of experience creating clear and engaging technical documentation, specializing in cloud computing, artificial intelligence, and machine learning. ✍️ She combines her technical expertise with a passion for technology that helps developers and tech enthusiasts uncover the cloud’s complexity.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.