NLP vs NLU: Key Differences and How They Work Together

By Jess Lulka

Content Marketing Manager

- Published:

- 10 min read

When you think of computers and language, programming might come to mind, where you use specific languages like Python, JavaScript, C++, or Java to write instructions that tell the system what to do and how to respond to commands. These programming languages provide the structured syntax and vocabulary needed to communicate with computers effectively.

But with AI, that’s changing. We’re in a new era where conversing with computers is much more human. You can now interact with computer systems by asking questions or posing search queries like you would a colleague or friend, and get a conversational response back.

The technologies behind these capabilities—natural language processing (NLP) and natural language understanding (NLU)—are subcategories of AI and machine learning, helping machines better understand language and interact with humans in a friendlier (and less technical) way. Let’s examine NLP vs. NLU, their commonalities and differences, common use cases, and considerations.

Key takeaways:

-

Natural Language Processing (NLP) is the broad field of AI focused on how machines understand and generate human language, while Natural Language Understanding (NLU) is a subset of NLP dealing specifically with comprehension of meaning and intent from language.

-

NLP covers tasks like tokenization, translation, and language generation, whereas NLU zeroes in on interpreting context, sentiment, and intent (for example, understanding what a user really asks for, despite variations in phrasing).

-

In practice, NLU is one component of an NLP system: for instance, a virtual assistant uses NLU to interpret your question (what you mean) and NLP to formulate a useful response, making both essential and complementary for effective language-based AI applications.

What is natural language processing?

Natural language processing is a subfield of AI that uses deep learning to help computers process, analyze, and generate human language. It relies on machine learning algorithms and computational linguistics to analyze and process text and spoken language, and improve its understanding over time to create more refined responses. Its main use cases include text classification, semantic analysis, natural language generation, and translation.

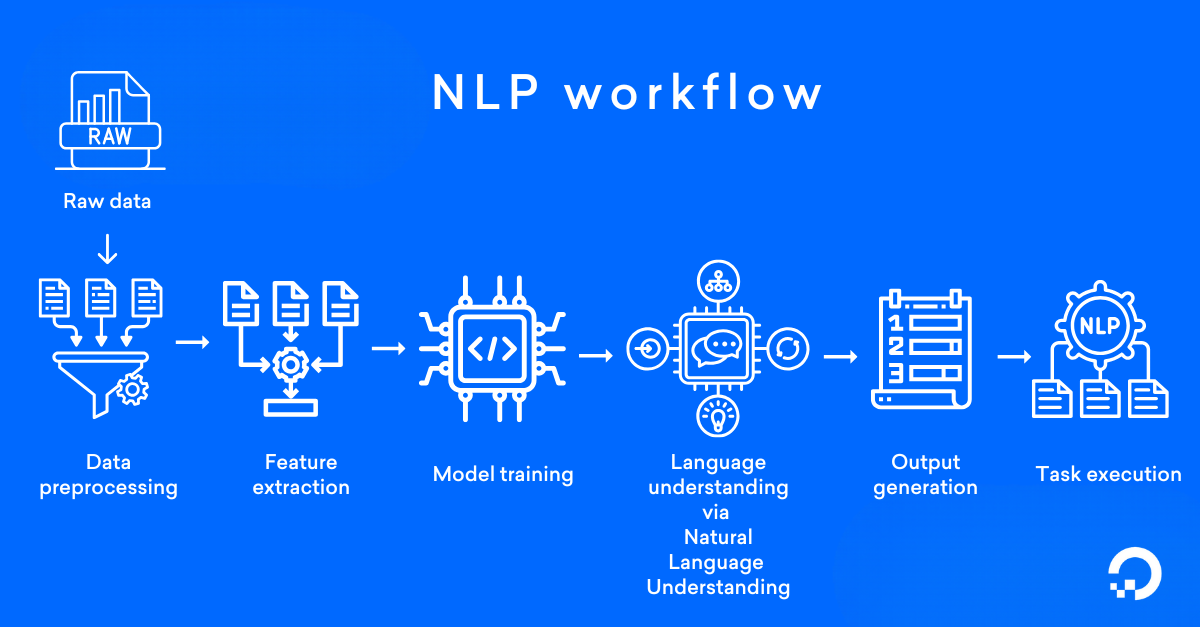

Implementing natural language processing requires data collection, data pre-processing, and model training. However, the pre-processing phase uses several techniques (tokenization, stemming, lemmatization, and stop word removal) to ensure the data is useful for training.

Examples of natural language processing tools include SpaCy, Hugging Face Transformers, Natural Language Toolkit, and IBM Watson.

What is natural language understanding?

Natural language understanding is a subgroup of natural language processing focused on enabling computers to comprehend human language as it is naturally spoken or written.

Unlike broader natural language processing, NLU specifically deals with the interpretation and meaning extraction aspects, using sophisticated algorithms to help machines grasp semantic nuances, identify contextual elements, recognize intent, and draw meaningful insights from unstructured text. This technology forms the foundation for systems that can truly interact with humans on their linguistic terms rather than requiring structured commands.

Tools that you can use for natural language understanding include Rasa NLU and Snips. You can also use software that supports NLP, like the ones mentioned above.

How does natural language understanding work?

NLU uses algorithms to help machines interpret language, derive meaning, identify context, and draw insights. To do this, it collects speech data and reduces it into a structured ontology, a data model consisting of semantics and pragmatics definitions.

The ontology shows the machine relationships and properties between concepts and categories, and organizes concepts to show how they relate to a specific domain. With these structures, for example, a system can recognize that “diamond” can either be a card suit, a baseball term, or a jewel.

With the structured ontology now available, NLU starts the intent and entity recognition process. Intent recognition identifies user sentiment and user objective. Entity recognition singles out the entities (named or numeric) in a phrase or data set and then analyzes them for more information. To do this, NLU breaks the language down into individual words called tokens.

The NLU model then processes these tokens to determine their parts of speech and intent. It first identifies the word’s part of speech, then re-analyzes it for different meanings and how it fits in the sentence compared to the other words.

For example, if you typed “attend a concert at the Seattle Symphony on August 22nd” into an NLU-enabled search engine, the model would break it down as:

Concert tickets [intent] / need:

Available seats [intent]

Seattle Symphony [location]

August 22 [date]

This analysis identifies the need, location, intent, and date of the query. The search program would then produce search results for the Seattle Symphony website and show available seats for purchase for any concert on August 22nd.

NLP, NLU, and NLG at a glance

Natural language processing and natural language understanding have a strong overlap—they are both subsets of AI that deal with processing and comprehending human language. Both rely on machine learning algorithms and data training to complete their main objectives.

Where the two diverge is what they specifically do to help machines work with language.

NLP makes language readable for machines and processes the initial data with tokenization, entity recognition, syntax, and parsing. NLU facilitates language comprehension with text categorization, sentiment analysis, semantic parsing, and intent analysis. Here’s how the two compare side-by-side:

| Feature | Natural Language Processing | Natural Language Understanding |

|---|---|---|

| Focus | Language data processing and analysis for fundamental language processing | Language input interpretation for human-like language comprehension |

| Input | Text or speech data | Text or speech data |

| Output | Structured speech data | Analyzed unstructured data |

| Techniques and Processing | Rule-based text generation, parsing, tokenization, and parts-of-speech tagging | Advanced language comprehension, word dependency identification, intent analysis, and sentiment identification |

| Use Cases | Text analysis, language translation, and smart assistants | Sentiment analysis and speech recognition |

Even with different objectives, NLP and NLU work together to help machines process and understand any text- or voice-based inputs. Here’s what that process looks like:

Once this process completes, the machine learning model then uses natural language generation (NLG) to craft a response that sounds more human-like or conversational. To do this, NLG models run through content analysis, data understanding, document structuring, sentence aggregation, grammatical structuring, and language presentation to return a response that makes sense to a human user in the system’s desired tone.

Use cases for NLP and NLU

NLP and NLU often work together in a range of AI productivity tools to digest human language and gain information about user needs and intent. Because natural language processing simply focuses on what was said, it requires NLU to help the system understand what was meant and provide a better grasp of human-based language.

The most common use cases for these two technologies are:

Chatbots

Chatbots are a big part of customer service. They allow you to interact with brands to get support or complete certain tasks, such as scheduling a meeting, requesting a product demonstration, or retrieving account information and documents. NLP allows chatbots to scan prompts, ascertain specific keywords, and then provide a previously programmed response (via a decision tree or “If This Then That” programming).

Chatbots have evolved and now use NLU to gain better intent recognition and provide a more human-like customer experience. Instead of having a user select a topic from a menu or knowledge base, the chatbot can ingest human-provided prompts. The NLU model can then figure out what you are asking, discern any sentiment or emotion, and then act accordingly. It also has the ability to understand slang or casual conversation much better than NLP, which facilitates more casual human interaction. It can also recall past conversations or information based on user preferences and integrate it into further responses.

Voice assistants

Voice assistants are another prime example of NLP and NLU working together. NLP figures out what is said, and NLU deciphers any specific tone of voice, sentiment, or additional context. All of this is done in seconds, and then NLG formulates and provides a response.

Challenges and errors of NLP and NLU

As much as NLP and NLU models have evolved over time, understanding human language still presents challenges. These models still have limits to just how much they can understand the complexities of language and are only as competent and knowledgeable as the training data. The main challenges for natural language applications include:

Ambiguity in human language

Even with lots of data and model training, NLP and NLU will still face challenges with human language comprehension. This is due to words and sentences having multiple meanings, the use of idioms and figurative language, and cultural and social influences on word choice, grammar, and syntax.

Researchers and developers must also account for the evolution of language as new word uses, slang, and algospeak appear over time. You could theoretically address this through extensive model training, but it would require substantial and diverse training data, which is not always regularly available, and it would need to encompass an extremely wide variety of groups.

False positives and data inaccuracies

In NLP and NLU, false positives are phrases that the system incorrectly categorizes or identifies as sensitive information. For example, a system might incorrectly identify “Apple” as an entity in the phrase “Apple pie is my favorite dessert,” thinking the user is talking about the company and not the fruit.

This not only leads to inaccurate data, but also to out-of-vocabulary phrases, poor generalization about specific words or topics, and system limitations. To address this issue and ensure increased accuracy and reliability, you can use probabilistic models (which allow for an uncertainty quantification), confidence scores, threshold tuning, and ensemble method learning techniques.

Resources

-

Optimizing Natural Language Processing Models Using Backtracking Algorithms: A Systematic Approach

-

How To Perform Sentiment Analysis in Python 3 Using the Natural Language Toolkit (NLTK)

NLP vs. NLU FAQs

What is the main difference between NLP and NLU?

NLP (Natural Language Processing) is the broader field that encompasses all computational techniques for processing human language, including text analysis, language generation, and speech recognition. NLU (Natural Language Understanding) is a specialized subset of NLP that focuses specifically on comprehending the meaning, intent, and context behind human language inputs.

How do NLP and NLU work together in AI applications?

NLP handles the technical processing of language data tokenization, parsing, and syntactic analysis while NLU interprets the semantic meaning and user intent from that processed data. Together, they enable AI systems to not just process text mechanically but understand what users actually want, making applications like chatbots and virtual assistants more effective and contextually aware.

What are common applications where NLP and NLU are used together?

Voice assistants like Siri and Alexa use NLP to process speech-to-text conversion and NLU to understand user commands and provide relevant responses. Customer service chatbots employ both technologies to parse customer inquiries (NLP) and determine the appropriate support action or response (NLU), creating more natural and helpful interactions.

Why is understanding the distinction important for businesses implementing AI?

Understanding the difference helps businesses choose the right technology for their specific needs simple text processing might only require NLP tools, while applications requiring intent recognition need robust NLU capabilities. This knowledge also helps in evaluating AI vendors and solutions, ensuring businesses invest in technology that can actually understand customer needs rather than just processing text superficially.

Build with DigitalOcean’s Gradient Platform

DigitalOcean Gradient Platform makes it easier to build and deploy AI agents without managing complex infrastructure. Build custom, fully-managed agents backed by the world’s most powerful LLMs from Anthropic, DeepSeek, Meta, Mistral, and OpenAI. From customer-facing chatbots to complex, multi-agent workflows, integrate agentic AI with your application in hours with transparent, usage-based billing and no infrastructure management required.

Key features:

-

Serverless inference with leading LLMs and simple API integration

-

RAG workflows with knowledge bases for fine-tuned retrieval

-

Function calling capabilities for real-time information access

-

Multi-agent crews and agent routing for complex tasks

-

Guardrails for content moderation and sensitive data detection

-

Embeddable chatbot snippets for easy website integration

-

Versioning and rollback capabilities for safe experimentation

Get started with DigitalOcean Gradient Platform for access to everything you need to build, run, and manage the next big thing.

About the author

Jess Lulka is a Content Marketing Manager at DigitalOcean. She has over 10 years of B2B technical content experience and has written about observability, data centers, IoT, server virtualization, and design engineering. Before DigitalOcean, she worked at Chronosphere, Informa TechTarget, and Digital Engineering. She is based in Seattle and enjoys pub trivia, travel, and reading.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.