Note: Pricing and features are accurate as of 12, September 2025 and are subject to change.

Behind every AI project like building a chatbot solving user’s queries, an image generator creating lifelike art, or a recommendation engine predicting your next favorite song, is a graphics processing unit (GPU) working at scale. These processors calculate billions of parameters, delivering the raw computational muscle needed for massive datasets and compute complex algorithms.

But GPUs like NVIDIA’s H100 or AMD MI300X can be costly, scarce, and setting up dedicated bare-metal GPU servers networked together involves high capital costs and complex infrastructure management. These considerations place ownership out of reach for many teams. That’s why GPU rental platforms have become an option for users looking for offerings that are more flexible, and provide on-demand access to high-performance hardware. Read on to explore how these platforms work, what they cost, and how to choose the right setup for your AI projects.

💡Key takeaways:

-

Owning enterprise-grade GPUs can be impractical due to their high purchase cost, and infrastructure overheads. GPU rental services address this challenge by making advanced compute resources available on a flexible, pay-as-you-go basis, removing the need for upfront investment.

-

Platforms like DigitalOcean, Jarvis Labs, Vast.ai, SaladCloud, Hyperstack, and Qubrid, rent GPUs on-demand to train, fine-tune, or run AI models at scale.

-

Rental GPUs pricing depends on hardware type, usage model, and platform design.

What is GPU rental?

GPU rental is the process of accessing high-performance GPUs through a cloud (centralized data centers offering virtualized GPU resources) or hosting providers (platforms that aggregate GPUs from data centers or individuals) on a pay-as-you-go or subscription basis, instead of purchasing and maintaining the hardware yourself.

In this model, cloud GPU resources are allocated virtually, for developers, researchers, and organizations to run computationally intensive tasks, like training large language models (LLMs) or running deep learning inference. These instances are provisioned on-demand and integrated with supporting infrastructure such as CPU cores, memory, storage, and networking. Depending on the platform, users can:

-

Choose GPU types (e.g., NVIDIA H100, A100, RTX 4090, or legacy cards like V100 and T4).

-

Scale by renting multiple GPUs for distributed training or high-throughput inference.

-

Pay flexibly via hourly, usage-based, or marketplace-driven pricing models.

💡Working on an innovative AI or ML project? DigitalOcean GPU Droplets offer scalable computing power on demand, perfect for training models, processing large datasets, and handling complex neural networks.

Spin up a GPU Droplet today and experience the future of AI infrastructure without the complexity or large upfront investments.

Factors to consider while choosing GPUs for rental

When renting GPUs for AI or high-performance computing projects, you will need the right balance of technical performance, cost, and workload requirements. The right GPU choice depends on your model size, precision requirements, and infrastructure integration.

1. GPU architecture

Different GPU architectures are optimized for specific workloads. For example, NVIDIA Hopper (H100) and Ampere (A100) support advanced tensor cores, FP8/FP16 mixed-precision training, and higher memory bandwidth, suitable for training LLMs. In contrast, consumer-grade GPUs like RTX 5090 can be more cost-effective for smaller fine-tuning or inference tasks. Always check FLOPS (floating-point operations per second), CUDA cores, and tensor core support to align with your workload.

2. Memory capacity

VRAM size determines whether your model can fit on a single GPU or requires model parallelism. For instance, an H100 (80GB) can handle models with billions of parameters, while a T4 (16GB) may quickly run out of memory for anything beyond small-scale inference. Memory bandwidth also impacts data transfer speed; GPUs with higher bandwidth (e.g., A100 with 2 TB/s) might reduce training bottlenecks.

3. Cost optimization

GPU rental platforms offer on-demand, spot/marketplace pricing, or committed-use discounts. If your workload is experimental, spot pricing may be cost-efficient; if predictable, reserved instances can reduce long-term costs.

💡

-

7 Strategies for effective GPU cost optimization: Actionable tactics for reducing GPU expenses through smart usage patterns and infrastructure choices.

-

Want to fine-tune LLMs affordably? Use DigitalOcean GPU Droplets to fine-tune powerful language models using PEFT techniques like LoRA/QLoRA, quantization, and cost-saving strategies.

4. Scalability

Large AI models require distributed training across multiple GPUs. Platforms like DigitalOcean provide GPU Droplets that can be deployed in multi-GPU configurations, for parallelized training with frameworks like PyTorch Distributed Data Parallel (DDP) or DeepSpeed. For training massive models, like a 400B+ parameter LLM, you should ensure your setup includes high-bandwidth interconnects such as NVLink or InfiniBand, which are more common on HPC-focused providers.

5. Software ecosystem

Check if the rented GPU environment includes AI/ML-ready images with preinstalled CUDA, cuDNN, PyTorch, or TensorFlow. If your workload needs specialized libraries, like TensorRT for inference or Ray for distributed computing, ensure the platform supports them without manual installation overhead. For example, DigitalOcean GPU Droplets ship with NVIDIA drivers and pre-configured AI/ML ready images, saving your setup time.

7 GPU renting platforms

GPU rental platforms vary in how they provide access to high-performance hardware. Some operate as large cloud providers with managed infrastructure, while others function as marketplaces that aggregate GPUs from data centers or individuals. We will look at six platforms, their key features, and how their pricing models compare.

1. DigitalOcean Gradient™ GPU Droplets

DigitalOcean’s Gradient GPU Droplets are virtual machines equipped with NVIDIA or AMD GPUs, available without the upfront costs of hardware ownership. With GPU Droplets, customers can rent anywhere from a single GPU to a multi GPU setup, and scale resources to match training or inference requirements. Since they come with storage options and prebuilt AI/ML environments, they reduce the setup overhead associated with configuring rented GPUs, which makes them suitable for both experimental and production workloads.

Key features:

-

Rent from one to eight GPUs within a GPU Droplet, suitable for both lightweight experiments and distributed training workloads.

-

Offers separate storage tiers: a boot disk for persistent system data and a scratch disk for temporary, high-speed data staging, useful for large-scale model data handling

-

Comes with preinstalled NVIDIA drivers, CUDA libraries, and deep learning frameworks. Users can also deploy Hugging Face models instantly via Gradient™ AI 1‑Click models, simplifying environment setup.

With a 12-month commitment, H100 × 8 priced at $1.99/GPU/hour. MI325X × 8 costs $1.69/GPU/hour, and MI300X × 8 to $1.49/GPU/hour. On-demand pricing starts at $0.76/hour for RTX 4000 Ada, $1.57/hour for RTX 6000 Ada and L40S, $1.99/hour for AMD MI300X (single GPU), $3.39/hour for a single H100, and $3.44/hour for H200.

💡Whether you’re a beginner or a seasoned expert, our AI/ML articles help you learn, refine your knowledge, and stay ahead in the field.

2. Jarvis Labs

Jarvis Labs is a GPU cloud service that offers users access to NVIDIA GPUs through a straightforward wallet-based system. It provides a browser-accessible environment, and supports both Jupyter notebooks and SSH. As an India-based provider, the company currently offers GPU rentals in India and Finland, with more regions coming soon.

Key features:

-

Users can “pause, resume, or delete” GPU instances with just a few clicks. When resuming, they can even switch GPU types to better match the task at hand.

-

Offers launch templates with environments preloaded with frameworks like Automatic1111, support for customizable setups ideal for AI/ML research and development .

-

Users prepay into a wallet rather than committing to long-term contracts.

H100 and A100 GPUs are priced at $2.99/hr, and $1.29/hr respectively. RTX 5000 starts around $0.39/hr.

3. Salad

SaladCloud is a distributed GPU cloud platform that aggregates underutilized consumer‑grade GPUs from individuals, such as gamers and small-scale contributors into a scalable compute network. Users deploy GPU‑accelerated workloads like AI inference, data pipelines, or batch processing via containerized environments, paying only for active runtime with no cold‑start charges. SaladCloud has over 1 million geo-distributed nodes across 180+ countries. The platform holds SOC 2 certification and uses isolation methods with redundant security measures to safeguard environments and data.

Key features:

-

Workloads are deployed via fully managed Docker‑style containers, using Salad’s SCE for deployment, scaling, and orchestration across a decentralized GPU network.

-

SaladCloud can reach new GPU hardware (e.g., RTX 5090, 5080) almost immediately, because of the early access by individual contributors.

-

Users are billed only once their container is running, initial setup phases (e.g., downloading containers) are free.

A100 available at around $0.40–$0.50/hr, depending on card variant.

4. Runpod

RunPod rents GPU compute resources on-demand rather than owning physical hardware. It supports AI, machine learning, and HPC workloads by offering GPU access over 31 global regions. The platform provides templates, built-in developer tools & integrations with APIs, and CLI for AI/ML workflow.

Key features:

-

Includes both dedicated GPU Pods for full control and serverless options for inference workloads, designed to reduce idle compute costs.

-

Allows users to deploy from a single GPU to large clusters.

-

Users can choose from mid-range cards like the RTX 4090 and L4, to high-end models such as H100, H200, A40, and AMD’s MI300X depending on the scale and type of compute task.

On-demand rates start at $1.99-$2.69/hour for H100 configurations.

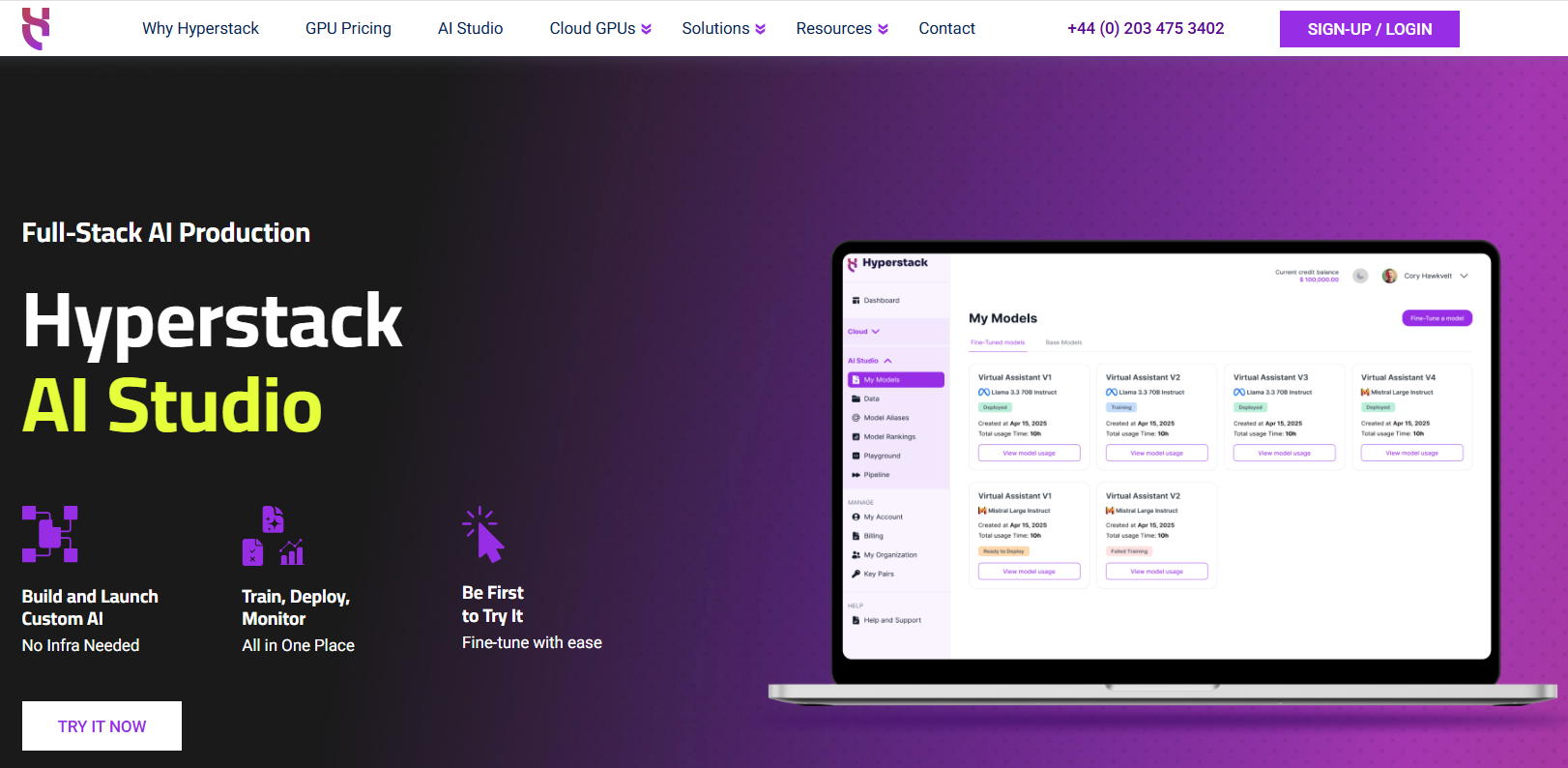

5. Hyperstack

Hyperstack offers on-demand access to enterprise-grade NVIDIA GPUs, and RTX series, on a pay-as-you-go basis. Users can launch GPU-backed virtual machines or containers with billing down to the minute, and can reserve capacity for lower rates. For simulation and visualization workflows, Hyperstack supports GPUs like the RTX A6000 and A40, for real-time rendering, physics-based simulations, and large-scale 3D modeling.

Key features:

-

Offers options from A100 (PCIe, SXM, NVLink) to H100 (SXM, NVLink, PCIe).

-

Provides ultra-fast networking, storage, RBAC, one-click deployment, and API/SDK support.

-

Deploys on data centers running 100% renewable energy, which may appeal to environmentally-conscious teams.

H100 GPUs range from $1.90 to $2.40 per hour based on the configuration. A100 GPUs cost between $1.35 and $1.60 per hour for on-demand use.

6. Vast.ai

Vast.ai, founded in 2018, operates as a cloud-based GPU marketplace connecting users seeking AI/ML compute with hosts who provide GPU resources via their own hardware by running Vast’s hosting software. Users can search and rent machines through a web interface, and hosts are responsible for hardware setup, driver installation, and listing their machines for rent.

Key features:

-

Two rental modes: on‑demand, which runs continuously at a fixed price set by hosts; and interruptible, which uses a bidding system where the highest bid wins, but jobs can be paused if outbid or if higher priority rentals begin.

-

Offers a filter for users with sensitive or production workloads, to select only among verified hosts located in data centers that maintain ISO 27001 or Uptime Institute Tier 3/4 certifications.

-

Marketplace includes offerings from professional data centers, individuals, and smaller-scale hosts.

Vast.ai pricing is shown as “$/hr, P25,” which means the 25th percentile, i.e., 25% of listings are cheaper and 75% are more expensive, to give a realistic baseline instead of being skewed by outliers. A100 GPUs start around $0.61/hr, H100 GPUs cost $1.80/hr.

💡Looking for a simplified marketplace solution? DigitalOcean Marketplace provides preconfigured, 1-Click Apps, Kubernetes tools, SaaS add-ons, and AI models, for developers to deploy software and AI environments.

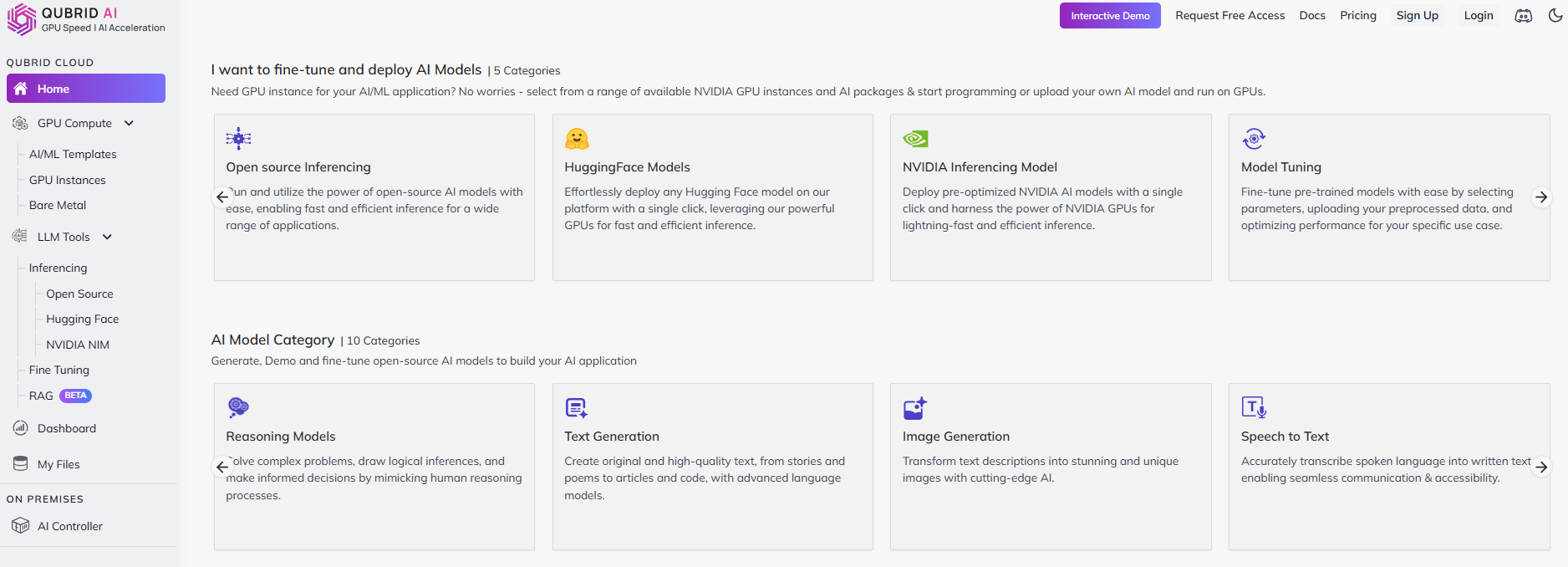

7. Qubrid AI

Qubrid AI is a hybrid GPU-as-a-service platform that helps users deploy NVIDIA GPU compute resources, on-demand or through reservation-based plans ranging from hourly to annual commitments. It provides both virtual machine and bare-metal server options, with support for hybrid deployment models that support cloud-based, on-premises, or appliance scenarios. The platform also aims for developer experience with workflows and template-based environment setups.

Key features:

-

Offers pre-designed frameworks like TensorFlow, PyTorch, ComfyUI, and Langflow that are ready-to-use.

-

Deploys GPU instances c via multiple interfaces, like SSH, Jupyter notebooks, or Visual Studio Code, depending on their workflow preferences.

-

When launching GPU instances, users can set automatic shutdown times to prevent idle compute waste.

H100 (80 GB): $2.73/hr, A100 (80 GB): $1.69/hr

References

Renting GPUs FAQs

What platform is most cost-effective for long-term AI training projects?

The most cost-effective option for long-term AI training depends on how you balance price, reliability, and flexibility. In general, platforms that offer reserved or committed usage plans provide predictable savings over time, while on-demand or spot-based rentals are cheaper but risk interruptions. For workloads that run continuously for weeks or months, choosing a service that combines stable access to GPUs with flexible pricing is a good deal. For example, with DigitalOcean GPU Droplets you can scale training environments quickly while keeping costs transparent and manageable for AI projects.

What are the hidden costs in GPU rental pricing? Watch for egress costs, data-transfer fees, inter-region traffic, storage (object/blocks) and request costs, public IPs/VPN/Interconnect, and support plans; they can exceed GPU time if you move lots of data or run long jobs.

Do GPU rental providers support open-source model marketplaces (Hugging Face, Replicate, etc.)?

Yes, most GPU rental providers integrate with open-source ecosystems to reduce setup friction. This makes it easier for users to experiment with community models or deploy custom fine-tuned variants without manually building environments.

-

Hugging Face: Many platforms offer preloaded Hugging Face libraries, so you can spin up an instance and pull models, and datasets directly.

-

Replicate & Modal: Some providers have APIs or templates for deploying models from marketplaces.

-

Custom containers: Most GPU rental providers allow you to bring your own Docker containers with pre-installed frameworks and integrations for open-source model marketplaces. For instance, DigitalOcean supports custom containers through App Platform, where you can deploy images from Docker Hub, GitHub Container Registry, or DigitalOcean’s own container registry.

Which GPUs are best for inference vs. fine-tuning workloads?

For inference workloads, the best choice is GPUs that deliver high memory bandwidth and low latency, such as the NVIDIA L40S, A10G, or RTX 4090. These cards are suitable for running models in the 7B–70B range using FP16 or INT8 precision.

Fine-tuning places heavier demands on GPU resources, which require larger VRAM capacity and stability for long training jobs. Enterprise-grade GPUs like the NVIDIA A100 80GB, H100, or AMD MI300X are preferred for this, when scaling across multiple GPUs, handling checkpointing, and supporting distributed training.

Accelerate your AI projects with DigitalOcean Gradient™ AI Droplets

Unlock the power of GPUs for your AI and machine learning projects. DigitalOcean GPU Droplets offer on-demand access to high-performance computing resources, enabling developers, startups, and innovators to train models, process large datasets, and scale AI projects without complexity or upfront investments.

Key features:

-

Flexible configurations from single-GPU to 8-GPU setups

-

Pre-installed Python and Deep Learning software packages

-

High-performance local boot and scratch disks included

Sign up today and unlock the possibilities of GPU Droplets. For custom solutions, larger GPU allocations, or reserved instances, contact our sales team to learn how DigitalOcean can power your most demanding AI/ML workloads.

About the author

Sujatha R is a Technical Writer at DigitalOcean. She has over 10+ years of experience creating clear and engaging technical documentation, specializing in cloud computing, artificial intelligence, and machine learning. ✍️ She combines her technical expertise with a passion for technology that helps developers and tech enthusiasts uncover the cloud’s complexity.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.